Image by Author

Recently we’ve all been having a super hard time catching up on the latest releases in the LLM space. In the last few weeks, several open-source ChatGPT alternatives have become popular.

And in this article we’ll learn about the ChatGLM series and ChatGLM-6B, an open-source and lightweight ChatGPT alternative.

Let's get going!

What is ChatGLM?

Researchers at the Tsinghua University in China have worked on developing the ChatGLM series of models that have comparable performance to other models such as GPT-3 and BLOOM.

ChatGLM is a bilingual large language model trained on both Chinese and English. Currently, the following models are available:

- ChatGLM-130B: an open-source LLM

- ChatGLM-100B: not open-sourced, but available through invite-only access

- ChatGLM-6B: a lightweight open-source alternative

Though these models may seem similar to the Generative Pretrained Transformer (GPT) group of large language models, the General Language Model (GLM) pretraining framework is what makes them different. We’ll learn more about this in the next section.

How Does ChatGLM Work?

In machine learning, you'd know GLMs as generalized linear models, but the GLM in ChatGLM stands for General Language Model.

GLM Pretraining Framework

LLM pre training has been extensively studied and is still an area of active research. Let’s try to understand the key differences between GLM pretraining and GPT-style models.

The GPT-3 family of models use decoder-only auto regressive language modeling. In GLM, on the other hand, optimization of the objective is formulated as an auto regressive blank infilling problem.

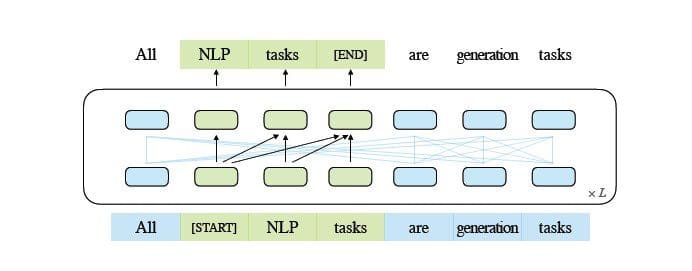

GLM | Image Source

In simple terms, auto regressive blank infilling involves blanking out a continuous span of text, and then sequentially reconstructing the text this blanking. In addition to shorter masks, there is a longer mask that randomly removes long blanks of text from the end of sentences. This is done so that the model performs reasonably well in natural language understanding as well as generation tasks.

Another difference is in the type of attention used. The GPT group of large language models use unidirectional attention, whereas the GLM group of LLMs use bidirectional attention. Using bidirectional attention over unmasked contexts can capture dependencies better and can improve performance on natural language understanding tasks.

GELU Activation

In GLM, GELU (Gaussian Error Linear Units) activation is used instead of the ReLU activation [1].

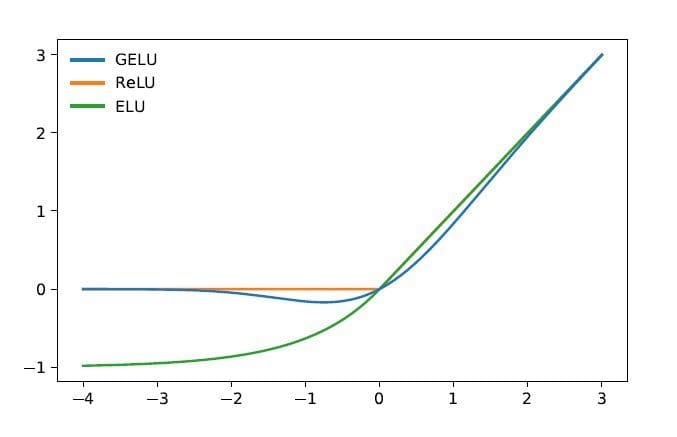

GELU, ReLU, and ELU Activations | Image Source

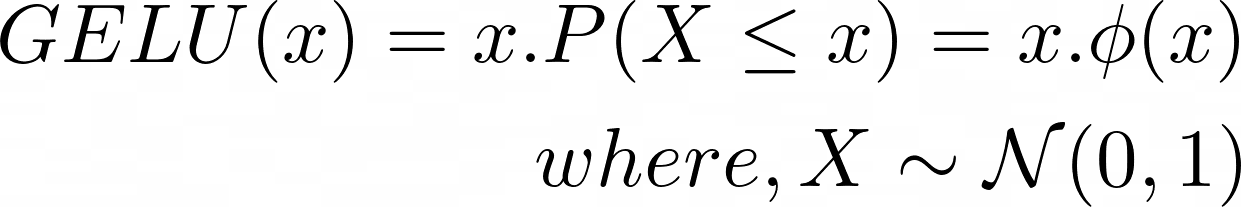

The GELU activation and has non-zero values for all inputs and has the following form [3]:

The GELU activation is found to improve performance in as compared to ReLU activations, though computationally more intensive than ReLU.

In the GLM series of LLMs, ChatGLM-130B which is open-source and performs as well as GPT-3’s Da-Vinci model. As mentioned, as of writing this article, there's a ChatGLM-100B version, which is restricted to invite-only access.

ChatGLM-6B

The following details about ChatGLM-6B to make it more accessible to end users:

- Has about 6.2 billion parameters.

- The model is pre-trained on 1 trillion tokens—equally from English and Chinese.

- Subsequently, techniques such as supervised fine-tuning and reinforcement learning with human feedback are used.

Advantages and Limitations of ChatGLM

Let’s wrap up our discussion by going over ChatGLM’s advantages and limitations:

Advantages

From being a bilingual model to an open-source model that you can run locally, ChatGLM-6B has the following advantages:

- Most mainstream large language models are trained on large corpora of English text, and large language models for other languages are not as common. The ChatGLM series of LLMs are bilingual and a great choice for Chinese. The model has good performance in both English and Chinese.

- ChatGLM-6B is optimized for user devices. End users often have limited computing resources on their devices, so it becomes almost impossible to run LLMs locally—without access to high-performance GPUs. With INT4 quantization, ChatGLM-6B can run with a modest memory requirement of as low as 6GB.

- Performs well on a variety of tasks including summarization and single and multi-query chats.

- Despite the substantially smaller number of parameters as compared to other mainstream LLMs, ChatGLM-6B supports context length of up to 2048.

Limitations

Next, let’s list a few limitations of ChatGLM-6B:

- Though ChatGLM is a bilingual model, its performance in English is likely suboptimal. This can be attributed to the instructions used in training mostly being in Chinese.

- Because ChatGLM-6B has substantially fewer parameters as compared to other LLMs such as BLOOM, GPT-3, and ChatGLM-130B, the performance may be worse when the context is too long. As a result, ChatGLM-6B may give inaccurate information more often than models with a larger number of parameters.

- Small language models have limited memory capacity. Therefore, in multi-turn chats, the performance of the model may degrade slightly.

- Bias, misinformation, and toxicity are limitations of all LLMs, and ChatGLM is susceptible to these, too.

Conclusion

As a next step, run ChatGLM-6B locally or try out the demo on HuggingFace spaces. If you’d like to delve deeper into the working of LLMs, here's a list of free courses on large language models.

References

[1] Z Du, Y Qian et al., GLM: General Language Model Pretraining with Autoregressive Blank Infilling, ACL 2022

[2] A Zheng, X Liu et al., GLM-130B – An Open Bilingual Pretrained Model, ICML 2023

[3] D Hendryks, K Gimpel, Gaussian Error Linear Units (GELUs), arXiv, 2016

[4] ChatGLM-6B: Demo on HuggingFace Spaces

[5] GitHub Repo

Bala Priya C is a technical writer who enjoys creating long-form content. Her areas of interest include math, programming, and data science. She shares her learning with the developer community by authoring tutorials, how-to guides, and more.

- OpenChatKit: Open-Source ChatGPT Alternative

- 8 Open-Source Alternative to ChatGPT and Bard

- Dolly 2.0: ChatGPT Open Source Alternative for Commercial Use

- MiniGPT-4: A Lightweight Alternative to GPT-4 for Enhanced Vision-language…

- The 7 Best Open Source AI Libraries You May Not Have Heard Of

- Top Open Source Large Language Models