Hugging Face, Nvidia, Johns Hopkins University, along with Answer.AI and LightOn, announced a successor to the encoder-only transformer model, called BERT – called ModernBERT.

The new model improves over BERT in both speed and accuracy. It also increases the context length to 8k tokens, compared to just 512 tokens for most encoder models. This means that its context length is sixteen folds larger than any other encoder model. It is also trained on 2 trillion tokens.

ModernBERT is also the first encoder-only model that includes a large amount of code in its training data.

“These features open up new application areas that were previously inaccessible through open models, such as large-scale code search, new IDE features, and new types of retrieval pipelines based on full document retrieval rather than small chunks,” read the announcement on HuggingFace.

A detailed technical report outlining ModernBERT was also published on Arxiv. According to the published benchmark results, ModernBERT outperformed several encoder-only models in various tasks.

ModernBERT also demonstrated highly efficient performance results on the NVIDIA RTX 4090 and scored better than many other encoder-only models. “We’re analysing the efficiency of an affordable consumer GPU, rather than the latest unobtainable hyped hardware,” read the announcement.

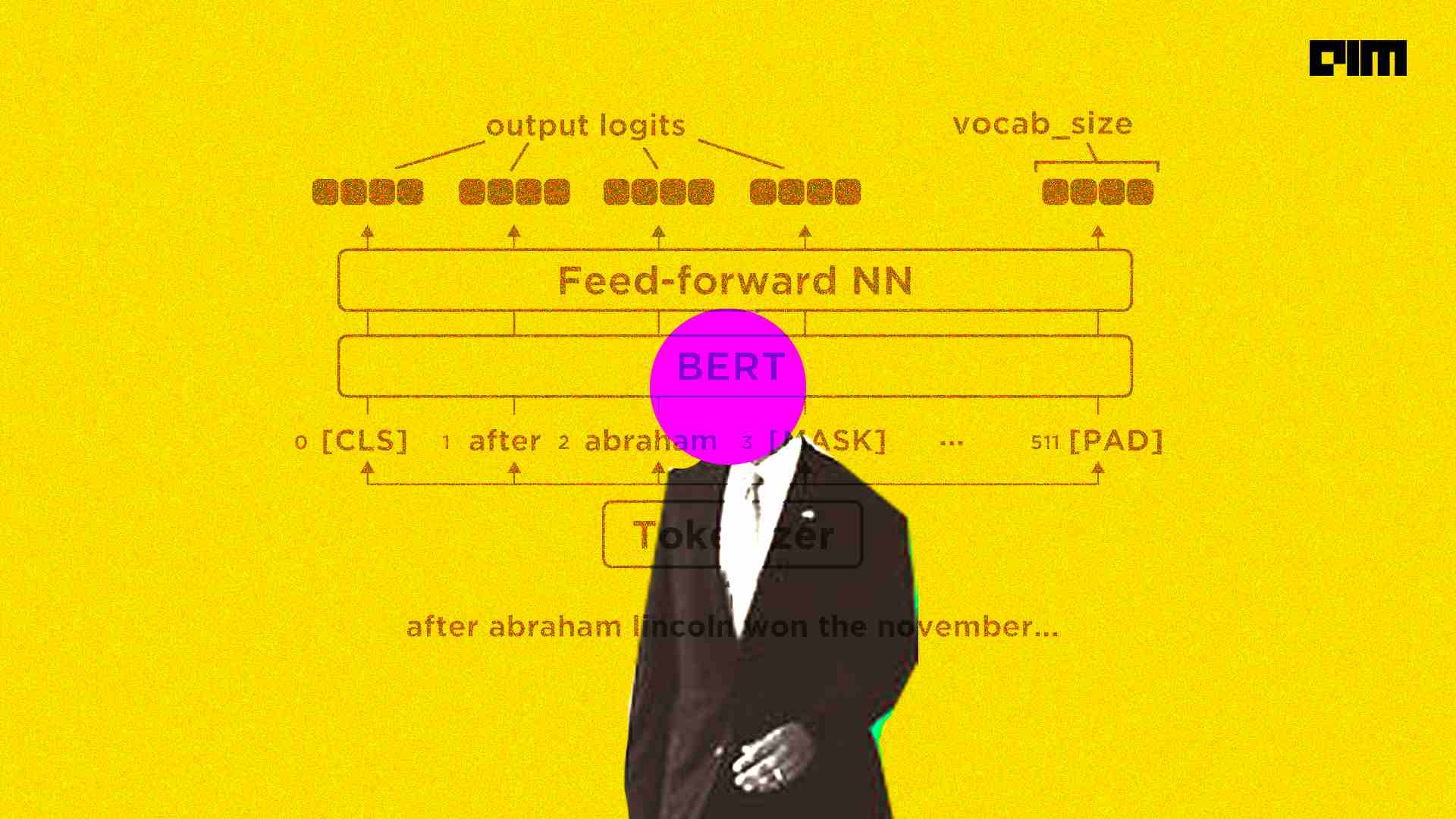

BERT, or Bidirectional Encoder Representations from Transformers, is a language model created by Google in 2018. Unlike popular models such as GPT, Llama, and Claude, which are solely decoder-based, BERT is exclusively an encoder model.

In the announcement, Hugging Face likens decoder-only models to Ferraris, a remarkable engineering feat designed to win, while comparing the BERT model to a Honda Civic, an economical and efficient car.

These models efficiently process documents at scale for retrieval without being resource-intensive during inference. Consequently, encoder models continue to be employed for various tasks such as classification and Natural Entity Recognition, as noted in the technical report.

“While LLMs have taken the spotlight in recent years, they have also motivated a renewed interest in encoder-only models for IR, [information retrieval]’, read the report.

The post BERT Has Finally Found It’s Successor appeared first on Analytics India Magazine.