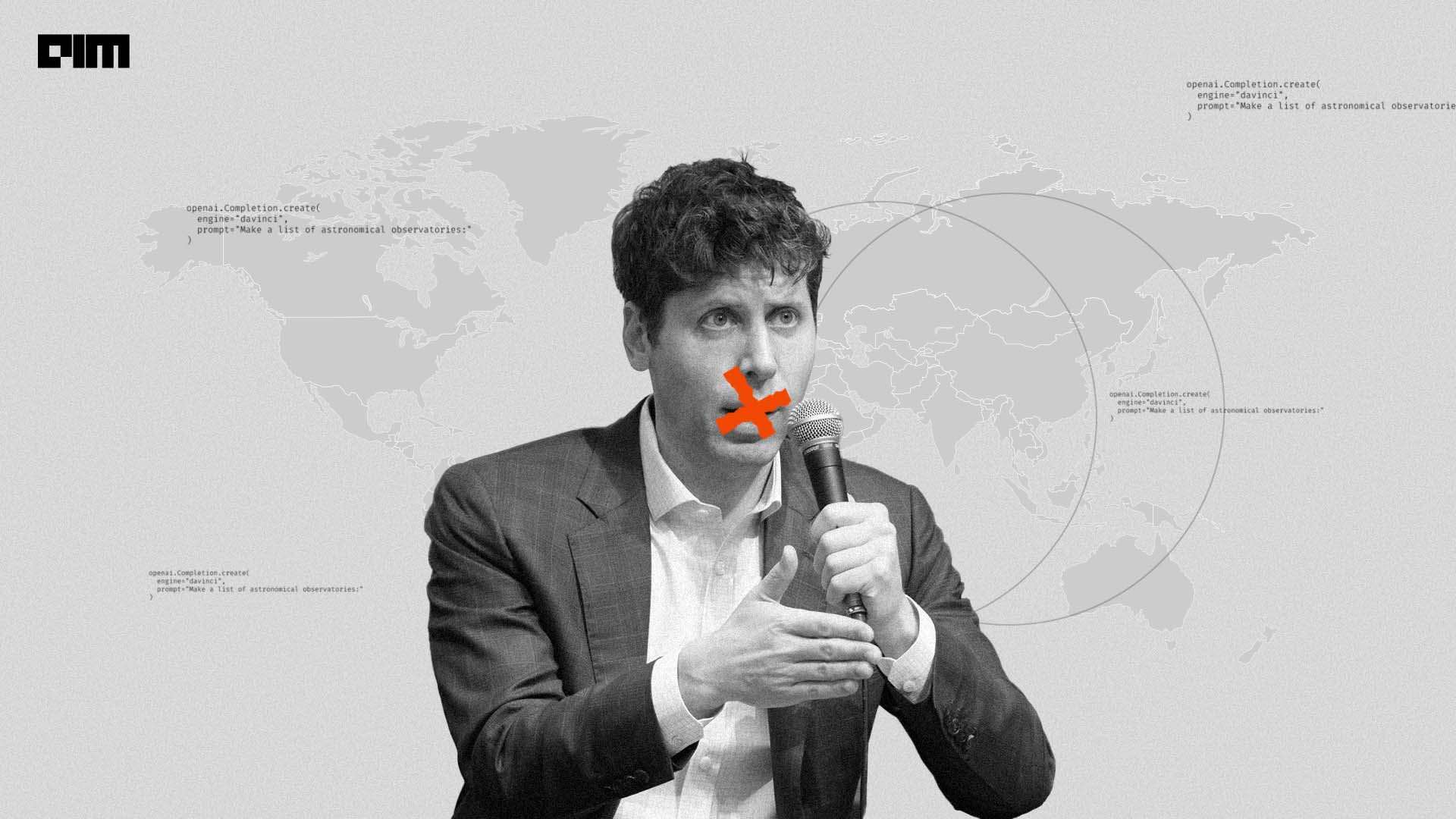

Sam Altman and his team’s discussions around the world grew repetitive by the time he reached Australia. It was Israel that truly challenged them with their difficult questions. Asked by tech leaders, researchers and students, they were direct and to the point, which ironically left Sam and Ilya Sutskever speechless.

“Could the open source element potentially match GPT 4’s abilities without additional technical advances or is there a secret sauce in GPT4 unknown to the world that sets it apart from the other models?

Or am I wasting my time installing Stable Vicunia 13 billion plus wizard? Am I wasting my time, tell me?” demanded Ishay Green, a researcher of Open Source AI and its applications.

This was the very first question to Altman and Sutskever which elicited an applause before they answered it.

Nonetheless, Sutskever responded that there will always be a gap between the open source and the private models and this gap may even be increasing with time. “The amount of effort, engineering and research it takes to produce one such neural net keeps increasing and so even if there are open source models they will be less and less produced by small groups of dedicated researchers and more from the providence of large firms,” he added.

While there is some truth to Sutskever’s response that OpenAI has a significant upper hand mostly because of their advantage of being the first to work on it. It is also true that the open source community is quickly catching up.

Another question that gained traction was, “How was the base model of GPT4 before you lobotomised it?” Interesting choice of words, one that everyone had, yet it went without a satisfactory answer. The crowd had a good mix of youtubers, startups founders, and students.

Israel Talks

The discussion was moderated by Prof. Nadav Cohen at Tel Aviv University, where they spoke on open source, the role of academics, climate change, the future of jobs and more. Sutskever took on most of the questions while Altman spoke about policy.

What was impressive about this discussion was not just the questions but the right follow up asked by Cohen. While talking about the contribution of the scientific community, and their role in building AI, he asked,“Are you considering models where you maybe publicise things to selected crowds, maybe not open source to the entire world but to scientists or is that something you’re considering?”

To this, Altman explained that a red team of 50 scientists were indeed recruited to adversarially test ChatGPT. Most of the vile content has been reigned in since, but some of the scientists speak of how it is impossible to clean up all the harmful content that ChatGPT can spew out and advise to proceed with ‘extreme caution’.

Solving Climate Crisis

In response to the question on the role of AI in solving climate change, Sutskever said rather flippantly, “Here’s how you solve climate change, you need a very large amount of efficient carbon capture, you need the energy for the carbon capture, you need the technology to build it and you need to build a lot of it. You not only ask how to do it but also to build it.” Surely this is fantastical thinking, while the very concept of carbon capture is being doubted by many experts and scientists.

AI Regulation

The question on if OpenAI would sidestep regulation as Mark Zuckerberg did was gracefully handled by Sam. He explained that they were formed as a company keeping in mind the ‘superpowers’ of incentives. They have an unusual structure and have a capped profit and if incentives are designed right, the behaviour of the company can be manipulated.

“We don’t have the incentive structure that a company like Facebook had and I think they were very well-meaning people at Facebook. They were just in an incentive structure that had some challenges.Ilya always says we tried to feel the AGI when we were setting up our company originally and then we would set up our profit structure so how do we balance the need for the money for compute with what we care about is this Mission.” he said.

Though he does speak of “warmly embrace regulation,” and seems earnest in his interviews there is a large difference between what he says and what he does.

After this conversation Sam met Israel’s President Isaac Herzog, and said, “I am sure Israel will play a huge role” in reducing risks from artificial intelligence as the country debates how to regulate the technology behind ChatGPT. He then made his way to India after Jordan, Qatar and the United Arab Emirates. And instead of speaking of anything important, the more light hearted conversation revolved around topics of love and glamour of AI.

The post When Sam Altman was Stumped appeared first on Analytics India Magazine.