Image by Author

We're witnessing an upsurge in open-source language model ecosystems that offer comprehensive resources for individuals to create language applications for both research and commercial purposes.

Previously, we have highlighted Open Assistant and OpenChatKit. Today, we'll delve into GPT4ALL, which extends beyond specific use cases by offering comprehensive building blocks that enable anyone to develop a chatbot similar to ChatGPT.

What is the GPT4ALL Project?

GPT4ALL is a project that provides everything you need to work with state-of-the-art natural language models. You can access open source models and datasets, train and run them with the provided code, use a web interface or a desktop app to interact with them, connect to the Langchain Backend for distributed computing, and use the Python API for easy integration.

Apache-2 licensed GPT4All-J chatbot was recently launched by the developers, which was trained on a vast, curated corpus of assistant interactions, comprising word problems, multi-turn dialogues, code, poems, songs, and stories. To make it more accessible, they have also released Python bindings and a Chat UI, enabling virtually anyone to run the model on CPU.

You can try it yourself by installing native chat-client on your desktop.

- Mac/OSX

- Windows

- Ubuntu

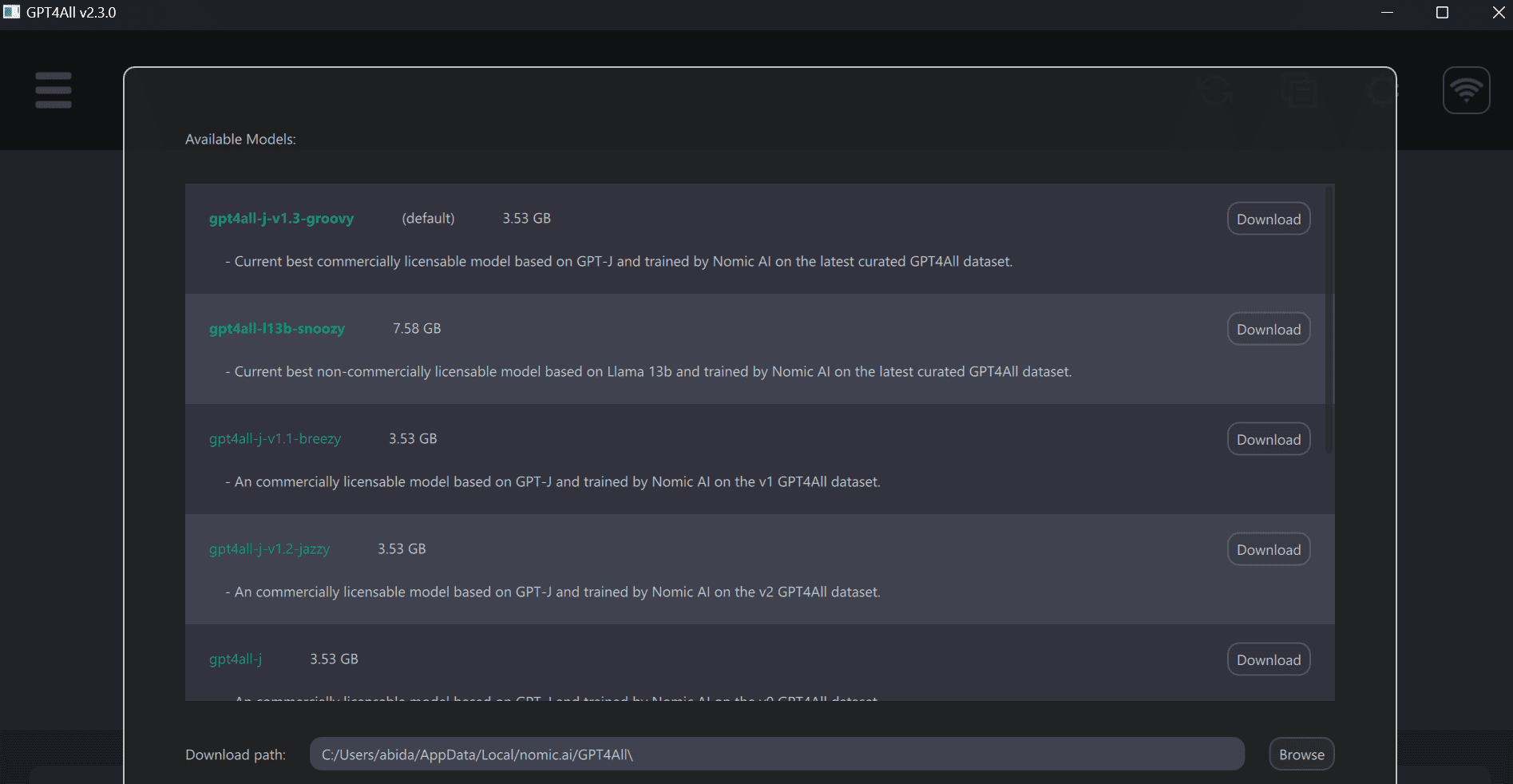

After that, run the GPT4ALL program and download the model of your choice. You can also download models manually here and install them in the location indicated by the model download dialog in the GUI.

Image by Author

I have had a perfect experience using it on my laptop, receiving fast and accurate responses. Additionally, it is user-friendly, making it accessible even to non-technical individuals.

Gif by Author GPT4ALL Python Client

The GPT4ALL comes with Python, TypeScript, Web Chat interface, and Langchain backend.

In this section, we will look into the Python API to access the models using nomic-ai/pygpt4all.

- Install the Python GPT4ALL library using PIP.

pip install pygpt4all- Download a GPT4All model from http://gpt4all.io/models/ggml-gpt4all-l13b-snoozy.bin. You can also browse other models here.

- Create a text callback function, load the model, and provide a prompt to

mode.generate()function to generate text. Check out the library documentation to learn more.

from pygpt4all.models.gpt4all import GPT4All def new_text_callback(text): print(text, end="") model = GPT4All("./models/ggml-gpt4all-l13b-snoozy.bin") model.generate("Once upon a time, ", n_predict=55, new_text_callback=new_text_callback) Moreover, you can download and run inference using transformers. Just provide the model name and the version. In our case, we are accessing the latest and improved v1.3-groovy model.

from transformers import AutoModelForCausalLM model = AutoModelForCausalLM.from_pretrained( "nomic-ai/gpt4all-j", revision="v1.3-groovy" ) Getting Started

The nomic-ai/gpt4all repository comes with source code for training and inference, model weights, dataset, and documentation. You can start by trying a few models on your own and then try to integrate it using a Python client or LangChain.

The GPT4ALL provides us with a CPU quantized GPT4All model checkpoint. To access it, we have to:

- Download the gpt4all-lora-quantized.bin file from Direct Link or [Torrent-Magnet].

- Clone this repository and move the downloaded bin file to

chatfolder. - Run the appropriate command to access the model:

- M1 Mac/OSX:

cd chat;./gpt4all-lora-quantized-OSX-m1 - Linux:

cd chat;./gpt4all-lora-quantized-linux-x86 - Windows (PowerShell):

cd chat;./gpt4all-lora-quantized-win64.exe - Intel Mac/OSX:

cd chat;./gpt4all-lora-quantized-OSX-intel

- M1 Mac/OSX:

You can also head to Hugging Face Spaces and try out the Gpt4all demo. It is not official, but it is a start.

Image from Gpt4all

Resources:

- Technical Report: GPT4All-J: An Apache-2 Licensed Assistant-Style Chatbot

- GitHub: nomic-ai/gpt4all

- Python API: nomic-ai/pygpt4all

- Model: nomic-ai/gpt4all-j

- Dataset: nomic-ai/gpt4all-j-prompt-generations

- Hugging Face Demo: Gpt4all

- ChatUI: nomic-ai/gpt4all-chat: gpt4all-j chat

GPT4ALL Backend: GPT4All — ???? LangChain 0.0.154

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in Technology Management and a bachelor's degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.

- Top Open Source Large Language Models

- Baize: An Open-Source Chat Model (But Different?)

- 7 Top Open Source Datasets to Train Natural Language Processing (NLP) &…

- Google’s Model Search is a New Open Source Framework that Uses Neural…

- Learn About Large Language Models

- Bark: The Ultimate Audio Generation Model