Self-Regulation Is the Standard in AI, for Now April 19, 2023 by Alex Woodie

Are you worried that AI is moving too fast and may have negative consequences? Do you wish there was a national law to regulate it? Well, that’s a club with a fast-growing list of members. Unfortunately, if you reside in the United States, there are no new laws designed to restrict the use of AI, leaving self-regulation as the next-best thing for companies adopting AI–at least for now.

While it’s been many years since “AI” replaced “big data” as the biggest buzzword in tech, the launch of ChatGPT in late November 2022 kicked off an AI goldrush that has taken many AI observers by surprise. In just a few months, a bonanza of powerful generative AI models have captured the world’s attention, thanks to their remarkable capability to mimic human speech and understanding.

Fueled by ChatGPT’s emergence, the extraordinary rise of generative models in mainstream culture has led to many questions about where this is all going. The astonishment that AI can generate compelling poetry and whimsical art is giving way to concern about the negative consequences of AI, ranging from consumer harm and lost jobs all the way to false imprisonment and even annihilation of the human race.

This has some folks very worried. And last month, a consortium of AI researchers sought a six-month pause on the development of new generative models bigger than GPT-4, the massive language model unfurled by OpenAI last month.

“Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources,” states an open letter signed by Turing Award winner Yoshua Bengio and OpenAI co-founder Elon Musk, among others. “Unfortunately, this level of planning and management is not happening.”

Elon Musk says AI could lead to "civilization destruction" (DIA TV/Shutterstock)

Not surprisingly, calls for AI regulation are on the rise. Polls indicate that Americans view AI as untrustworthy and want it regulated, particularly for impactful things such as self-driving cars and receiving government benefits. Musk said that AI could lead to “civilization destruction.”

However, while there are several new local laws targeting AI–such as the one in New York City that focuses on use of AI in hiring, enforcement for which was delayed until this month–there are no new federal regulations specifically targeting AI nearing the finish line in Congress (although AI falls under the rubric of laws already in the books for highly regulated industries like financial services and healthcare).

With all the excitement of AI, what’s a company to do? It’s not surprising that companies want to partake of the positive benefits of AI. After all, the urge to become “data-driven” is viewed as a necessity for survival in the digital age. However, companies also want to avoid the negative consequences–whether real or perceived–that can result from using AI poorly in our litigious and cancel-loving culture.

“AI is the Wild West,” Andrew Burt, founder of AI law firm BNH.ai, told Datanami earlier this year. “Nobody knows how to manage risk. Everybody does it differently.”

With that said, there are several frameworks available that companies can use to help manage the risk of AI. Burt recommends the AI Risk Management Framework, which comes from the National Institute of Standards (NIST) and which was finalized earlier this year.

"When it is OK to give somebody more than somebody else"? asks AI expert Cathy O'Neil

The RMF helps companies think through how their AI works and what the potential negative consequences might be. It uses a “Map, Measure, Manage, and Govern” approach to understanding and ultimately mitigating risks of using AI in a variety of products.

While companies are worried about the legal risk of using AI, those worries are currently outweighed by the upside of using the tech, Burt says. “Companies are more excited than they are worried,” he says. “But as we’ve been saying for years, there’s direct relationship between the value of an AI system and the risk it poses.”

Another AI risk management framework comes from Cathy O'Neil, CEO of the O'Neil Risk Consulting & Algorithmic Auditing (ORCAA) and a 2018 Datanami Person to Watch. ORCAA has proposed a framework called Explainable Fairness (which you can see here).

The Explainable Fairness provides a way for organizations to not only test their algorithms for bias, but also to work through what happens when differences in outcomes are detected. For example, if a bank is determining eligibility for a student loan, what factors can be legitimately used to approve or reject the loan or charge a higher or lower interest?

Obviously, the bank must use data to answer those questions. But which pieces of data–that is, what factors reflecting the loan applicant–can they use? Which factors should they be legally allowed to use, and which factors should not be used? Answering those questions is not easy or straightforward, O’Neil says.

“That’s the whole point of this framework, is that those legitimate factors have to be legitimized,” O’Neil said during a discussion at Nvidia’s GPU Technology Conference (GTC) held last month. “What counts as legitimate is extremely contextual…When it is OK to give somebody more than somebody else?”

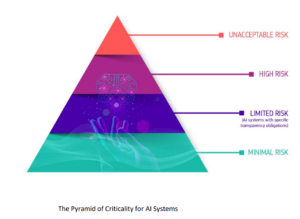

The European Union categorizes potential AI harms on the "Pyramid of Criticality"

Even without new AI laws in place, companies should start to begin asking themselves how they can implement AI fairly and ethically to abide by existing laws, says Triveni Gandhi, the responsible AI lead at data analytics and AI software vendor Dataiku.

“People have to start thinking about, OK how do we take the law as it stands and apply it to AI use cases that are out there right now?” she says. “There are some regulations, but there’s also a lot of people who are thinking about what are the ethical and value oriented ways we want to build AI. And those are actually questions that companies are starting to ask themselves, even without overarching regulations.”

Gandhi encourages the use of frameworks to help companies get started on their ethical AI journeys. In addition to the NIST RMF framework, there is also the

“There are any number of framework and ways of thinking that are out there,” she says. “So you just need to pick one that’s most applicable to you and start working with it.”

Gandhi encourages companies to start exploring the frameworks and becoming familiar with the different questions, because that will get them on their way on their own ethical AI journey. The worst thing they can do is delay getting started in search of the “perfect framework.”

“The roadblock comes because people expect perfection right away,” she says. “You’re never going to start with a perfect product or pipeline or process. But if you start, at least that’s better than not having anything."AI regulation in the United States is likely to be a long and winding road with an uncertain destination. But the European Union is already moving forward with its own regulation, termed the AI Act, which could go into effect later this year.

The AI Act would create a common regulatory and legal framework for the use of AI that impacts EU residents, including how it’s developed, what companies can use it for, and the legal consequences of failing to adhere to the requirements. The law will likely require companies to receive approval before adopting AI for some use cases, outlaw certain other AI uses deemed too risky.

A global AI regulation would be desireable, says Fractal's Sray Agarwal (Zia-Liu/Shutterstock)

If US states follows Europe’s lead on AI, as California did with the California Consumer Privacy Act (CCPA) and the EU’s General Data Protection Act (GDPR), then it’s likely that the AI Act could be a model for American AI regulation.

That could be a good thing according to Sray Agarwal, a data scientist and principal consultant at Fractal, who says we need global consensus on AI ethics.

“You never want a privacy law or any kind of ethical law in the US to be opposite of any other country which it trades with,” says Agarwal, who has worked as a pro bono expert for the United Nations on topics of ethical AI. “It has to have a global consensus. So forums like OECD, World Economic Forum, United Nations and many other such international body needs to sit together and come out with consensus or let’s say global guidelines which needs to be followed by everyone.”

But Agarwal isn’t holding his breath that we’re going to have that consensus anytime soon. “We are not there yet. We are not anywhere [near] responsible AI,” he says. “We have not even implemented it holistically and comprehensively in different industries in relatively simple machine learning models. So talking about implementing it in ChatGPT is a challenging question.”

However, the lack of regulation should not prevent companies from moving forward with their own ethical practices, Agarwal says. In lieu of government or industry regulation, self-regulation remains the next-best option.

Th