Nvidia’s AI Safety Tool Protects Against Bot Hallucinations April 25, 2023 by Agam Shah

Early large-language models have proven to be triple-threat AIs – Bing and ChatGPT are entertaining, and can generate artificial love, hate, and even dance.

But in the process of testing large-language models, one thing became obvious very quickly: AI models can make up stuff, and conversations can veer off track easily.

The risk posed by the LLMs prompted IT leaders, including Elon Musk and Steve Wozniak, to issue a letter to halt the giant AI experiments.

The propensity for chatbots to hallucinate is a problem that OpenAI, Microsoft, and Google are trying to solve. The fundamental effort is around building a mechanism so artificial intelligence systems can be trusted, and to try and drive down the rate at which an AI goes off on a tangent and creates illusions.

But Nvidia on Tuesday took a practical approach to the problem by releasing a programmable tool that can act as an intermediary so large-language models stay on track and provide relevant answers to queries.

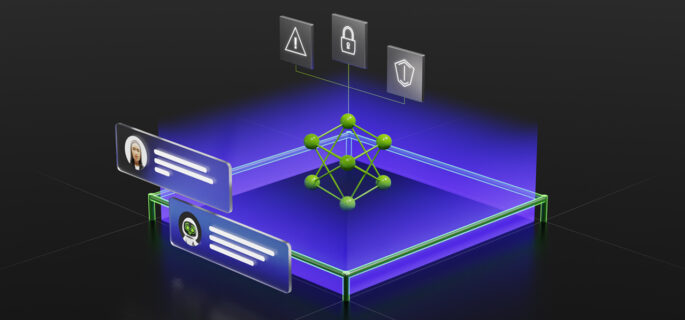

The open-source tool, called NeMo Guardrails, monitors the conversation between a user and the LLM, and that helps keep the conversation on track.

"It tracks the state of the conversation – who said what, what are we talking about now, what were we talking about before – and it provides a programmable way for developers to implement guardrails," said Jonathan Cohen, vice president of applied research at Nvidia, in a press briefing.

The guardrails can act as a form of enforcement so conversations remain within the context of the topic. The tool quickly implements AI safety rules for organizations looking to save time and extract more productivity from AI systems, Cohen said.

There are no AI safety plug-in tools available in the market today, and Cohen indicated that something is better than nothing.

For example, NeMo Guardrails can be plugged into a customer service chatbot to only answer questions about a company's products, and decline conversations about competitive products. The guardrail can also steer a conversation back to the company's products.

A guardrail can be programmed to include safety response systems for fact-checking routines, and to detect and mitigate hallucinations.

The tool can also be used to detect jailbreaks when automating coding, which helps safely execute code. For example, it can check if an API conforms to a company's security model. "We can place it on an allow list and allow an LLM to only interact with APIs on this list," Cohen said.

Enforcing guardrails is especially important in specialized domains such as finance or health care, which is highly regulated. A guardrail can include code to recognize contextual queries – for example, it can tell users something like “I am a healthcare chatbot, but this is not a healthcare question. Please reframe your question.”

The tool is being released on the Github repository, and will be available with Nvidia's software offerings.

"We think that the problem of AI safety and guardrails is one that the community needs to work on together and so we've decided to make our tool kit open source. It is designed to interoperate with all tool kits and all major language models today," Cohen said.

NeMo Guardrails sits in between humans and large-language models. Once the user submits a query at the prompt, it goes through the guardrail, which checks it for context. The query is then passed on to open-source tools such as LangChain, which is used to develop applications that harness the capability of language models.

After the LLM generates a response, it goes back to LangChain, and is evaluated by NeMo Guardrails before presenting the answer to a user. If the response is not good, the guardrail can send it back to the original LLM or a different large-language model to regenerate an answer. The response needs to pass the guardrail’s checks before it can be presented to a user.

"The reason we made it a programmable system is precisely so that you have total control over what this logic is," Cohen told EnterpriseAI.

The toolkit is a complete system that is available in Github, which includes the runtime and APIs to run it. Nvidia has developed a programming tool called Colang that controls the behavior of this runtime, which can be accessed through Python.

“The NeMo Guardrails system is a client Python library, you can take the library and do what you want with it,” Cohen said, later adding that “it includes inside it an interpreter for the Colang language,” Cohen said.

The Colang runtime executes on CPUs, so the NeMo Guardrails toolkit is not hamstrung by CUDA, which is Nvidia’s proprietary AI programming framework. Most of Nvidia’s CUDA codebase can execute only on the company’s GPUs.

"The hardware requirements are going to depend on the service you're calling, or if it's a language model that you're running locally. It is whatever LangChain supports, we work with automatically," Cohen said.

Nvidia's toolkit is being published as debates around AI regulation and safety heat up in Washington DC and the tech industry. There is no known standard or implementation around AI safety, and Nvidia is open-sourcing the toolkit to get the conversation going.

"There's an emerging open-source community like LangChain. It is emerging as a very popular API for interacting with all these things. That is one of the reasons we built our system on top of LangChain," Cohen said.

The fluency of tools like ChatGPT is quite stunning, but it is also a wakeup call on building resilient systems and trustworthy AI, said Kathleen Fisher, who is a director at DARPA, during Nvidia's GPU Technology Conference last month.

"You could build kind of belts and suspenders or other things on top that might catch the problems and drive the occurrence rate down… I think we are going to see really fast movement in this space and it will be interesting and terrifying at the same time," Fisher said.

Other companies are approaching AI safety differently. Microsoft previously halted hallucinatory answers by limiting the number of questions users could ask its BingGPT, which is based on OpenAI's GPT-4.

OpenAI earlier this month said GPT-4 provides 40% more accurate answers than its GPT-3.5. OpenAI is advocating better training, more data, responsible governance, and industry cooperation to help improve AI safety.