In a blockbuster announcement at its annual GTC conference, NVIDIA CEO Jensen Huang unveiled the company’s next-generation Blackwell GPU architecture, promising massive performance gains to fuel the AI revolution.

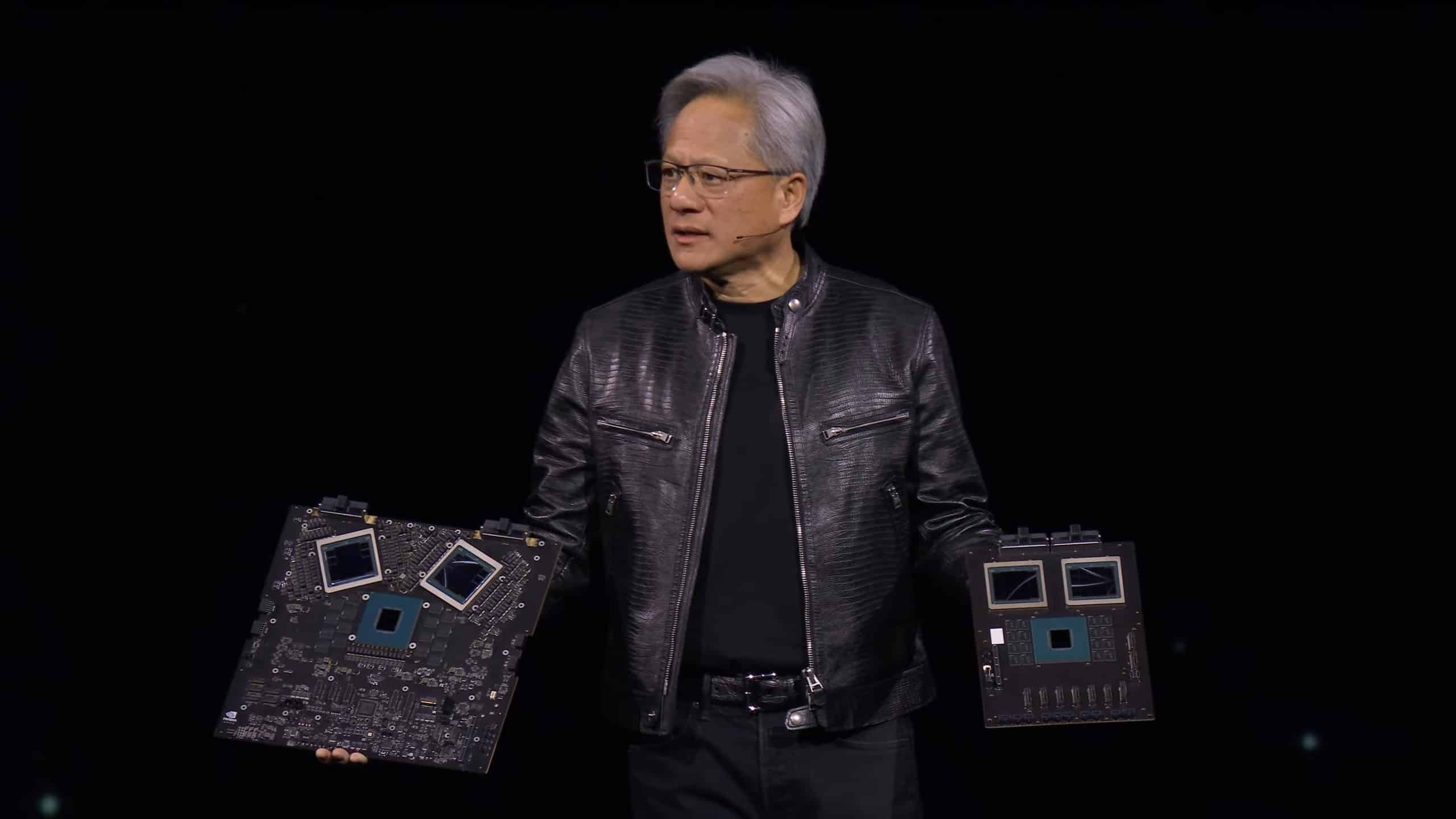

The highlight is the flagship B200 GPU, a behemoth packing 208 billion transistors across two cutting-edge chiplets connected by a blazing 10TB/s link. Huang proclaimed it “the world’s most advanced GPU in production.”

Blackwell introduces several groundbreaking technologies. It features a second-gen Transformer Engine to double AI model sizes with new 4-bit precision. The 5th-gen NVLink interconnect enables up to 576 GPUs to work seamlessly on trillion-parameter models. An AI reliability engine maximises supercomputer uptime for weeks-long training runs.

“When we were told Blackwell’s ambitions were beyond the limits of physics, the engineers said ‘so what?'” Huang said, showcasing a liquid-cooled B200 prototype. “This is what happened.”

Compared to its predecessor Hopper, the B200 promises 2.5x faster FP8 AI performance per GPU, double FP16 throughput with new FP6 format support, and up to 30x faster performance for large language models. Major tech giants like Amazon, Google, Microsoft, and Tesla have already committed to adopting Blackwell.

Huang said training a GPT model with 1.8 trillion parameters [GPT-4] typically takes three to five months using 25,000 amperes. HOPPER architecture would require around 8,000 GPUs, consuming 15 megawatts of power and taking about 90 days (three months). In contrast, BLACKWELL would need just 2,000 GPUs and significantly less power—only four megawatts—for the same duration.

He said that NVIDIA aims to reduce computing costs and energy consumption, thereby facilitating the scaling up of computations necessary for training next-generation models.

To showcase Blackwell’s scale, NVIDIA unveiled the DGX SuperPOD, a next-gen AI supercomputer with up to 576 Blackwell GPUs and 11.5 exaflops of AI compute. Each DGX GB200 system packs 36 Blackwell GPUs coherently linked to Arm-based Grace CPUs.

While focused on AI and data centres initially, Blackwell’s innovations are expected to benefit gaming GPUs too.

With Blackwell raising the bar, NVIDIA has clearly doubled down on its lead in the white-hot AI acceleration market. The race for the next AI breakthrough is on.

The post NVIDIA Introduces Very Big GPU, BLACKWELL appeared first on Analytics India Magazine.