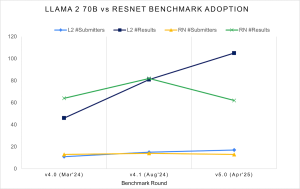

If there have been any doubts that generative AI is reshaping the inference panorama, the most recent MLPerf outcomes ought to put them to relaxation. MLCommons introduced new outcomes at the moment for its industry-standard MLPerf Inference v5.0 benchmark suite. For the primary time, massive language fashions overtook conventional picture classification as essentially the most extensively submitted workloads, with Meta’s Llama 2 70B displacing the long-dominant ResNet-50 benchmark.

The shift marks a brand new period in benchmarking, the place efficiency, latency, and scale should now account for the calls for of agentic reasoning and big fashions like Llama 3.1 405B, the most important ever benchmarked by MLPerf.

The shift marks a brand new period in benchmarking, the place efficiency, latency, and scale should now account for the calls for of agentic reasoning and big fashions like Llama 3.1 405B, the most important ever benchmarked by MLPerf.

Trade leaders have responded to the generative AI growth with new {hardware} and software program optimized for distributed inference, FP4 precision, and low-latency efficiency. That focus has pushed dramatic good points, with the latest MLPerf outcomes exhibiting how rapidly the group is advancing on each fronts.

A Benchmarking Framework That’s Evolving With the Discipline

The MLPerf Inference working group designs its benchmarks to guage machine studying efficiency in a method that’s architecture-neutral, reproducible, and consultant of real-world workloads, a activity that’s turning into harder as fashions quickly evolve. To remain present, MLCommons has accelerated its tempo of including new benchmarks, overlaying each datacenter and edge deployments throughout a number of eventualities, reminiscent of server (latency-constrained, like chatbots), offline (throughput-focused), and streaming modes.

To make sure truthful comparisons, every MLPerf benchmark features a detailed specification that defines the precise mannequin model, enter information, pre-processing pipeline, and accuracy necessities. Submissions fall into both a “closed” division that’s strictly managed to permit apples-to-apples comparisons, or an “open” division, which permits algorithmic and architectural flexibility however is just not straight comparable. The closed division permits firms to compete on techniques and software program optimizations alone, whereas the open division serves as a proving floor for novel architectures and new mannequin designs.

In model 5.0, most exercise remained within the closed datacenter class, with 23 organizations submitting practically 18,000 outcomes. Submitters for all classes embody AMD, ASUSTeK, Broadcom, cTuning Basis, Cisco, CoreWeave, Dell Applied sciences, FlexAI, Fujitsu, GATEoverflow, Giga Computing, Google, HPE, Intel, KRAI, Lambda, Lenovo, MangoBoost, Nvidia, Oracle, Quanta Cloud Know-how, Supermicro, and Sustainable Metallic Cloud.

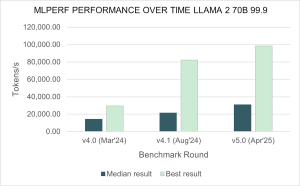

The efficiency outcomes for Llama 2 70B have skyrocketed since a 12 months in the past: the median submitted rating has doubled, and the perfect rating is 3.3 instances sooner in comparison with Inference v4.0. (Supply: MLCommons)

During the last 12 months submissions have elevated 2.5x to the Llama 2 70B benchmark take a look at, which implements a big generative AI inference workload primarily based on a widely-referenced open-source mannequin. With the v5.0 launch, Llama 2 70B has now supplanted Resnet50 as the very best submission charge take a look at. (Supply: MLCommons)

One of many clearest indicators of efficiency evolution on this spherical got here from the shift to lower-precision computation codecs like FP4, a four-bit floating-point format that dramatically reduces the reminiscence and compute overhead of inference. MLCommons reported that median efficiency scores for Llama 2 70B doubled in comparison with final 12 months, whereas best-case outcomes improved by 3.3x. These good points have been pushed partially by rising assist for FP4 throughout techniques. MLPerf’s tight accuracy constraints function a real-world filter: any efficiency good points from decrease precision should nonetheless meet established accuracy thresholds. The outcomes recommend that FP4 is now not simply experimental, however is turning into a viable efficiency lever in manufacturing inference.

“This demonstrates the aptitude of this format,” stated Miro Hodak, co-chair of the MLPerf Inference working group, in a press briefing. “Even when utilizing a lot decrease numerical codecs, techniques are nonetheless capable of produce outcomes that meet our accuracy and high quality targets.

(Supply: MLCommons)

New Workloads Replicate Increasing Scope of Inference

To maintain tempo with the fast evolution of AI workloads, MLCommons launched 4 new benchmarks in MLPerf Inference v5.0 for the most important growth in a single spherical up to now. On the heart is Llama 3.1 405B, essentially the most bold benchmark ever included in MLPerf. With 405 billion parameters and assist for as much as 128,000 tokens of context (in comparison with 4,096 in Llama 2 70B), the benchmark challenges techniques with a mixture of basic question-answering, mathematical reasoning, and code era.

“That is our most bold inference benchmark up to now,” Hodak said in a launch. “It displays the {industry} development towards bigger fashions, which might improve accuracy and assist a broader set of duties. It’s a harder and time-consuming take a look at, however organizations are attempting to deploy real-world fashions of this order of magnitude. Trusted, related benchmark outcomes are important to assist them make higher selections on the easiest way to provision them.”

(Supply: MLCommons)

The suite additionally provides a brand new variant of Llama 2 70B known as Llama 2 70B Interactive, which is designed to mannequin real-world purposes the place fast response is important, like chatbots and agentic AI techniques. On this situation, techniques are evaluated not simply on throughput but in addition on their capability to ship the primary token rapidly (time to first token) and maintain excessive token supply speeds (time per output token), each important to consumer expertise.

“A important measure of the efficiency of a question system or a chatbot is whether or not it feels aware of an individual interacting with it. How rapidly does it begin to reply to a immediate, and at what tempo does it ship its complete response?” stated Mitchelle Rasquinha, MLPerf Inference working group co-chair. “By imposing tighter necessities for responsiveness, this interactive model of the Llama 2 70B take a look at affords new insights into the efficiency of LLMs in real-world eventualities.”

Past massive language fashions, MLPerf 5.0 additionally launched two new benchmarks to seize rising use instances. The RGAT benchmark, primarily based on a large graph neural community (GNN) mannequin skilled on the Illinois Graph Benchmark Heterogeneous dataset, evaluates how nicely techniques can deal with graph-based reasoning duties. These workloads are more and more related in fraud detection, suggestion techniques, and drug discovery.

The Automotive PointPainting benchmark rounds out the brand new additions, concentrating on edge techniques utilized in autonomous automobiles. It simulates a fancy sensor fusion situation that mixes digital camera and LiDAR inputs for 3D object detection. Whereas MLCommons continues to work on a broader automotive suite, this benchmark serves as a proxy for safety-critical imaginative and prescient duties within the subject.

Nvidia Showcases Blackwell, Agentic-Prepared Infrastructure

Nvidia, which has constantly led MLPerf submissions, showcased early outcomes from its new Blackwell structure. The GB200 NVL72 system, which connects 72 NVIDIA Blackwell GPUs to behave as a single, huge GPU, delivered as much as 30x larger throughput on the Llama 3.1 405B benchmark over the NVIDIA H200 NVL8 submission this spherical. In an unverified submission, Nvidia stated its full-rack GB200 NVL72 system achieved practically 900,000 tokens per second working Llama 2 70B, a quantity no different system has come near.

Hopper, now three years outdated however nonetheless extensively obtainable, confirmed good points of 60% over the previous 12 months on some benchmarks, a reminder that software program optimization continues to be a significant driver of efficiency. On the Llama 2 70B benchmark, first launched a 12 months in the past in MLPerf Inference v4.0, H100 GPU throughput has elevated by 1.5x. The H200 GPU, primarily based on the identical Hopper GPU structure with bigger and sooner GPU reminiscence, extends that improve to 1.6x. Hopper additionally ran each benchmark, together with the newly added Llama 3.1 405B, Llama 2 70B Interactive, and graph neural community assessments, the corporate stated in a weblog put up.

Nvidia used its MLPerf outcomes as a launchpad to speak about what it sees as the following frontier: reasoning AI. On this rising class, generative techniques don’t simply generate, but in addition plan, resolve, and execute multi-step duties utilizing chains of prompts, massive context home windows, and typically a number of fashions working in coordination, known as mixture-of-experts (MOE). Massive fashions like Llama 3.1 (with assist for enter sequence lengths as much as 120,000 tokens) sign the arrival of what Nvidia calls “lengthy considering time.” The fashions should course of, motive by, and synthesize way more info than earlier generations.

These capabilities include steep infrastructure necessities. As fashions develop in measurement and complexity, inference is turning into a full-stack, multi-node problem that spans GPUs, CPUs, networking, and orchestration layers: “More and more, inference is just not a single GPU drawback. In some instances, it's not even a single server drawback. It’s a full stack multi-node problem,” stated Dave Salvator, director of accelerated computing merchandise within the Accelerated Computing Group at Nvidia, in a press briefing.

A key optimization Nvidia highlighted is disaggregated serving, or the separation of the computationally intense “prefill” stage from the memory-bound “decode” stage of inference. The corporate additionally pointed to its FP4 work as a cornerstone of its technique going ahead. In its first FP4 implementation of the DeepSeek-V3 reasoning mannequin, Nvidia claimed greater than 5x speedups with beneath 1% accuracy loss.

Momentum Builds Throughout the Ecosystem

Whereas Nvidia captured a lot of the highlight with its Blackwell benchmarks, it wasn’t the one firm breaking new floor in LLM inference and system optimization. MLPerf Inference v5.0 additionally noticed aggressive submissions from AMD, Intel, Google, and Broadcom, in addition to smaller corporations and startups seeking to carve out a job within the generative AI stack.

AMD submitted outcomes utilizing its new Intuition MI325X accelerators, demonstrating aggressive efficiency in a number of LLM benchmarks. The MI325X is the primary in AMD’s up to date MI300X GPU sequence to be benchmarked in MLPerf and affords assist for FP4. A number of companions together with Supermicro, ASUSTeK, Giga Computing, and MangoBoost submitted outcomes utilizing AMD {hardware}. Associate submissions utilizing MI325X matched AMD’s personal outcomes on Llama 2 70B, reinforcing the maturity and reliability of its {hardware} and software program stack. AMD additionally benchmarked Steady Diffusion XL on the MI325X, utilizing GPU partitioning methods to enhance generative inference efficiency.

Intel continued to place its Sixth-generation Xeon CPUs as viable AI inference engines, notably for graph neural networks (GNNs) and suggestion workloads. Throughout six benchmarks, Xeon 6 CPUs delivered a 1.9x enchancment over the earlier era, constructing on a development that has seen as much as 15x good points since Intel first entered MLPerf in 2021. The corporate confirmed efficiency good points in workloads starting from classical machine studying to mid-sized deep studying fashions and graph-based reasoning. Intel was as soon as once more the one vendor to submit standalone server CPU outcomes, whereas additionally serving because the host processor in lots of accelerated techniques. Submissions have been supported in partnership with Cisco, Dell Applied sciences, Quanta, and Supermicro.

Google Cloud submitted 15 leads to Inference v5.0, highlighting advances throughout each its GPU and TPU-based infrastructure. For the primary time, Google submitted outcomes from its A3 Extremely VMs, powered by NVIDIA H200 GPUs, and A4 VMs, that includes NVIDIA B200 GPUs in preview. A3 Extremely delivered sturdy efficiency throughout massive language fashions, mixture-of-experts, and imaginative and prescient workloads, benefiting from its excessive reminiscence bandwidth and GPU interconnect speeds. A4 outcomes additionally positioned nicely amongst comparable submissions. Google additionally returned with a second spherical of outcomes for its sixth-generation TPU, Trillium, which delivered a 3.5x throughput enchancment on Steady Diffusion XL in comparison with TPU v5e.

Broadcom stood out for its management on the newly launched RGAT benchmark, a graph neural community activity designed to replicate real-world scientific purposes like protein folding and supplies discovery. Broadcom stated its accelerator structure was notably nicely suited to sparse and structured information operations, and it used the chance to place its AI technique extra squarely inside the “AI for science” area.

This spherical additionally noticed a surge of participation from smaller distributors and startups, together with MangoBoost, Lambda, FlexAI, and GATEoverflow — a number of of which have been new to the MLPerf benchmark course of. Their submissions helped validate that generative AI inference is just not the unique area of the most important hyperscalers. MangoBoost and Lambda, particularly, submitted outcomes exhibiting how tightly built-in hardware-software stacks can ship low-latency efficiency on open-source fashions, even outdoors of proprietary cloud environments.

“We wish to welcome the 5 first-time submitters to the Inference benchmark: CoreWeave, FlexAI, GATEOverflow, Lambda, and MangoBoost,” stated David Kanter, head of MLPerf at MLCommons. “The persevering with progress in the neighborhood of submitters is a testomony to the significance of correct and reliable efficiency metrics to the AI group. I’d additionally like to focus on Fujitsu’s broad set of datacenter energy benchmark submissions and GateOverflow’s edge energy submissions on this spherical, which reminds us that vitality effectivity in AI techniques is an more and more important concern in want of correct information to information decision-making.”

The newest MLPerf Inference v5.0 outcomes replicate how the {industry} is redefining AI workloads. Throughout these outcomes, one of the vital placing takeaways was the position of software program. Many distributors posted improved scores in comparison with MLPerf 4.0 even on the identical {hardware}, highlighting how a lot optimization, within the type of compilers, runtime libraries, and quantization methods, continues to maneuver the bar ahead.

Inference at the moment means managing complexity throughout {hardware} and software program stacks, measuring not solely throughput and latency however adaptability for reasoning fashions that plan, motive, and generate in actual time. With new codecs like FP4 gaining traction and agentic AI demanding bigger context home windows and extra orchestration, the benchmark is turning into as a lot about infrastructure design as it’s about mannequin execution. As MLCommons prepares for its subsequent spherical, the strain will probably be on distributors not simply to carry out, however to maintain tempo with a generative period that’s reshaping each layer of the inference stack.

To view the complete MLPerf Inference v5.0 outcomes, go to the Datacenter and Edge benchmark outcomes pages.

Beneath are vendor statements from the MLPerf submitters (flippantly edited).

AMD

AMD is happy to announce sturdy MLPerf Inference v5.0 outcomes with the first-ever AMD Intuition MI325X submission and the primary multi-node MI300X submission by a accomplice. This spherical highlights funding from AMD in AI scalability, efficiency, software program developments and open-source technique, whereas demonstrating sturdy {industry} adoption by a number of accomplice submissions.

For the primary time, a number of companions—Supermicro (SMC), ASUSTeK, and Giga Computing with MI325X, and MangoBoost with MI300X—submitted MLPerf outcomes utilizing AMD Intuition options. MI325X accomplice submissions for Llama 2 70B achieved comparable efficiency of AMD’s personal outcomes, reinforcing the consistency and reliability of our GPUs throughout totally different environments.

AMD additionally expanded its MLPerf benchmarks by submitting Steady Diffusion XL (SDXL) with MI325X, demonstrating aggressive efficiency in generative AI workloads. Revolutionary GPU partitioning methods performed a key position in optimizing SDXL inference efficiency.

MangoBoost achieved the first-ever multi-node MLPerf submission utilizing AMD Intuition GPUs, leveraging 4 nodes of MI300X GPUs. This milestone demonstrates the scalability and effectivity of AMD Intuition for large-scale AI workloads. Moreover, it demonstrated important scaling from an 8-GPU MI300X submission final spherical to a 4 node 32-GPU MI300X submission this spherical, additional reinforcing the robustness of AMD options in multi-node deployments.

The transition from MI300X to MI325X delivered important efficiency enchancment in Llama 2 70B inference, enabled by fast {hardware} and software program improvements.

Broadcom

Broadcom is pioneering VMware Personal AI as an architectural method that balances the enterprise good points from AI and ML with the privateness and compliance wants of organizations. Constructed on prime of the VMware Cloud Basis (VCF) personal cloud platform, this method ensures privateness and management of knowledge, alternative of open-source and industrial AI options, optimum value, efficiency, compliance, and best-in-class automation and cargo balancing.

Broadcom brings the ability of virtualized NVIDIA GPUs to the VCF personal cloud platform to simplify administration of AI accelerated information facilities and allow environment friendly utility growth and execution for demanding AI and ML workloads. VMware software program helps numerous {hardware} distributors, facilitating scalable deployments.

Broadcom partnered with NVIDIA, Supermicro, and Dell Applied sciences to showcase virtualization's advantages, reaching spectacular MLPerf Inference v5.0 outcomes. We demonstrated close to bare-metal efficiency throughout numerous AI domains— Pc Imaginative and prescient, Medical Imaging, and Pure Language Processing utilizing a six billion parameter GPT-J language mannequin. We additionally achieved excellent outcomes with the Mixtral-8x7B 56 billion parameter massive language mannequin (LLM). The graph under compares normalized digital efficiency with bare-metal efficiency, exhibiting minimal overhead from vSphere 8.0.3 with NVIDIA virtualized GPUs. Check with the official MLCommons Inference 5.0 outcomes for the uncooked comparability of queries per second or the tokens per second.

We ran MLPerf Inference v5.0 on eight virtualized NVIDIA SXM H100 80GB GPUs on SuperMicro GPU SuperServer SYS-821GE-TNRT and Dell PowerEdge XE9680. These VMs used solely a fraction of the obtainable assets—for instance, simply 25% of CPU cores, leaving 75% for different workloads. This environment friendly utilization maximizes {hardware} funding, permitting concurrent execution of different purposes. This interprets to important value financial savings on AI/ML infrastructure whereas leveraging vSphere's sturdy information heart administration. Enterprises now acquire each high-performance GPUs and vSphere's operational efficiencies.

CTuning

The cTuning Basis is a non-profit group devoted to advancing open-source, collaborative, and reproducible analysis in pc techniques and machine studying. It develops instruments and methodologies to automate efficiency optimization, facilitate experiment sharing, and enhance reproducibility in analysis workflows.

cTuning leads the event of the open Collective Information platform, powered by the MLCommons CM workflow automation framework with MLPerf automations. This instructional initiative helps customers to learn to run AI, ML, and different rising workloads in essentially the most environment friendly and cost-effective method throughout numerous fashions, datasets, software program, and {hardware}, primarily based on the MLPerf methodology and instruments.

On this submission spherical, we’re testing the Collective Information platform with a brand new prototype of Collective Thoughts framework (CMX) and MLPerf automations, developed in collaboration with ACM, MLCommons, and our volunteers and contributors in open optimization challenges.

To study extra, please go to:

- https://github.com/mlcommons/ck

- https://entry.cKnowledge.org

- https://cTuning.org/ae

Cisco Programs Inc.

With generative AI poised to considerably increase world financial output, Cisco helps to simplify the challenges of getting ready organizations’ infrastructure for AI implementation. The exponential progress of AI is remodeling information heart necessities, driving demand for scalable, accelerated computing infrastructure.

To this finish, Cisco lately launched the Cisco UCS C885A M8, a high-density GPU server designed for demanding AI workloads, providing highly effective efficiency for mannequin coaching, deep studying, and inference. Constructed on the NVIDIA HGX platform, it may scale out to ship clusters of computing energy that may deliver your most bold AI initiatives to life. Every server contains NVIDIA NICs or SuperNICs to speed up AI networking efficiency, in addition to NVIDIA BlueField-3 DPUs to speed up GPU entry to information and allow sturdy, zero-trust safety. The brand new Cisco UCS C885A M8 is Cisco’s first entry into its devoted AI server portfolio and its first eight-way accelerated computing system constructed on the NVIDIA HGX platform.

Cisco efficiently submitted MLPerf v5.0 Inference leads to partnership with Intel and NVIDIA to reinforce efficiency and effectivity, optimizing numerous inference workloads reminiscent of Massive language mannequin (Language), Pure language processing (Language), Picture Technology (Picture), Generative picture (Textual content to Picture), Picture classification (Imaginative and prescient), Object detection(Imaginative and prescient), Medical picture segmentation (Imaginative and prescient) and Advice (Commerce).

Distinctive AI efficiency throughout Cisco UCS platforms:

- Cisco UCS C885A M8 platform with 8x NVIDIA H200 SXM GPUs

- Cisco UCS C885A M8 platform with 8x NVIDIA H100 SXM GPUs

- Cisco UCS C245 M8, X215 M8 + X440p PCIe node with 2x NVIDIA PCIe H100-NVL GPUs & 2x NVIDIA PCIe L40S GPUs

- Cisco UCS C240 M8 with Intel Granite Speedy 6787P processors

CoreWeave

CoreWeave at the moment introduced its MLPerf Inference v5.0 outcomes, setting excellent efficiency benchmarks in AI inference. CoreWeave is the primary cloud supplier to submit outcomes utilizing NVIDIA GB200 GPUs.

Utilizing a CoreWeave GB200 occasion that includes two Grace CPUs and 4 Blackwell GPUs, CoreWeave delivered 800 tokens per second (TPS) on the Llama 3.1 405B mannequin—one of many largest open-source fashions. CoreWeave additionally submitted outcomes for NVIDIA H200 GPU situations, reaching over 33,000 TPS on the Llama 2 70B mannequin benchmark.

“CoreWeave is dedicated to delivering cutting-edge infrastructure optimized for large-model inference by our purpose-built cloud platform,” stated Peter Salanki, Chief Know-how Officer at CoreWeave. “These benchmark MLPerf outcomes reinforce CoreWeave’s place as a most popular cloud supplier for among the main AI labs and enterprises.”

CoreWeave delivers efficiency good points by its absolutely built-in, purpose-built cloud platform. Our naked steel situations characteristic NVIDIA GB200 and H200 GPUs, high-performance CPUs, NVIDIA InfiniBand with Quantum switches, and Mission Management, which helps to maintain each node working at peak effectivity.

This 12 months, CoreWeave turned the primary to supply basic availability of NVIDIA GB200 NVL72 situations. Final 12 months, the corporate was among the many first to supply NVIDIA H100 and H200 GPUs and one of many first to demo GB200s.

Dell Applied sciences

Dell, in collaboration with NVIDIA, Intel, and Broadcom, has delivered groundbreaking MLPerf Inference v5.0 outcomes, showcasing industry-leading AI inference efficiency throughout a various vary of accelerators and architectures.

Unmatched AI Efficiency Throughout Flagship Platforms:

- PowerEdge XE9680 and XE9680L (liquid-cooled): These flagship AI inference servers assist high-performance accelerators, together with NVIDIA H100 and H200 SXM GPUs, guaranteeing unparalleled flexibility and compute energy for demanding ML workloads. ● PowerEdge XE7745: This PCIe-based powerhouse, outfitted with 8x NVIDIA H200-NVL or 8x L40S GPUs, demonstrated distinctive efficiency and industry-leading performance-per-watt effectivity, making it a powerful contender for AI scaling at decrease vitality prices.

Breakthrough Ends in Actual-World LLM Inference:

- Superior Efficiency Throughout Mannequin Sizes: Dell’s MLPerf submissions embody outcomes spanning small (GPT-J 6B), midsize (Llama 2 70B), and large-scale (Llama 3 405B) LLMs, highlighting constant effectivity and acceleration throughout totally different AI workloads.

- Optimized for Latency-Delicate AI: MLCommon added the Llama 2 70B-Interactive mannequin to indicate the way it works in real-time. We shared information to indicate how nicely it performs.

- First-Ever Llama 3 Benchmarks: With Llama 3 launched on this spherical, Dell’s outcomes present important insights into next-generation inference workloads, additional substantiating its management in AI infrastructure.

Knowledge-Pushed Efficiency Insights for Smarter AI Infrastructure Choices:

- PCIe vs. SXM Efficiency Evaluation: Dell’s MLPerf outcomes ship priceless comparative information between PCIe-based GPUs and SXM-based accelerators, equipping prospects with the data to optimize AI {hardware} choice for his or her particular workloads.

By delivering energy-efficient, high-performance, and latency-optimized AI inference options, Dell units the benchmark for next-gen ML deployment, serving to organizations confidently speed up AI adoption. Generate larger high quality, sooner predictions and outputs whereas accelerating decision-making with highly effective Dell Applied sciences options. Take a look at drive them at our worldwide Buyer Answer Facilities or collaborate in our innovation labs to faucet right into a Facilities of Excellence.

FlexAI

FlexAI is a Paris-based firm based by {industry} veterans from Apple, Intel, NVIDIA, and Tesla. It focuses on optimizing and simplifying AI workloads by its Workload as a Service (WaaS) platform. This platform dynamically scales, adapts, and self-recovers, enabling builders to coach, fine-tune, and deploy AI fashions sooner, at decrease prices, and with diminished complexity.

For this submission spherical, FlexAI shared with the group a prototype of a easy, open-source device primarily based on MLPerf LoadGen to benchmark the out-of-the-box efficiency and accuracy of non-MLPerf fashions and datasets from the Hugging Face Hub, utilizing vLLM and different inference engines throughout commodity software program and {hardware} stacks.

We validated this prototype in our open submission with the DeepSeek R1 and Llama 3.3 fashions. A complicated model of this device—with full automation and optimization workflows—is offered within the FlexAI cloud. To study extra, please go to: https://flex.ai.

Fujitsu

Fujitsu affords a incredible mix of techniques, options, and experience to ensure most productiveness, effectivity, and adaptability delivering confidence and reliability. Since 2020, we’ve been actively taking part in and submitting to inference and coaching rounds for each information heart and edge divisions.

On this spherical, we centered on PRIMERGY CDI, outfitted with exterior packing containers suitable with PCIe Gen.5, which has 8x H100 NVL GPUs, submitting two divisions: the information heart closed division and its energy division.

PRIMERGY CDI stands aside from conventional server merchandise, comprising computing servers, PCIe material switches, and PCIe packing containers. Gadget assets reminiscent of GPUs, SSDs, and NICs are saved externally in PCIe packing containers relatively than inside the computing server chassis. Probably the most outstanding characteristic of PRIMERGY CDI is the flexibility to freely allocate gadgets inside the PCIe packing containers to a number of computing servers. For example, you possibly can cut back the variety of GPUs for inference duties in the course of the day and improve them for coaching duties at evening. This flexibility in GPU allocation permits for diminished server standby energy with out occupying GPUs for particular workloads.

On this spherical, the PRIMERGY CDI system outfitted with 8x H100 NVL GPUs achieved excellent outcomes throughout seven benchmark applications, together with mixtral-8x7b, which couldn’t be submitted within the earlier spherical, in addition to the newly added llama 2 70b-interactive and RGAT.

Our function is to make the world extra sustainable by constructing belief in society by innovation. With a wealthy heritage of driving innovation and experience, we’re devoted to contributing to the expansion of society and our valued prospects. Due to this fact, we’ll proceed to satisfy the calls for of our prospects and try to offer engaging server techniques by the actions of MLCommons.

GATEOverflow

GATEOverflow, an training initiative primarily based in India, is happy to announce its first MLPerf Inference v5.0 submission, reflecting our ongoing efforts in machine studying benchmarking. This submission was pushed by the energetic involvement of our college students, supported by GO Courses, fostering hands-on expertise in real-world ML efficiency analysis.

Our outcomes—over 15,000+ efficiency benchmarks—have been generated utilizing MLCFlow, the automation framework from MLCommons, and deployed throughout a various vary of {hardware}, together with laptops, workstations, and cloud platforms reminiscent of AWS, GCP, and Azure.

Notably, GATEOverflow contributed to over 80% of all closed Edge submissions. We’re additionally the one energy submitter within the Edge class and the only submitter for the newly launched PointPainting mannequin.

This submission underscores GATEOverow’s dedication to open, clear, and reproducible benchmarking. We lengthen our gratitude to all contributors and contributors who performed a job in growing MLCFlow automation and guaranteeing the success of this submission. With this achievement, we look ahead to additional improvements in AI benchmarking and increasing our collaborations inside the MLPerf group.

Giga Computing

Giga Computing, a GIGABYTE subsidiary, focuses on server {hardware} and superior cooling options. Working independently, it delivers high-performance computing for information facilities, edge environments, HPC, AI, information analytics, 5G, and cloud. With sturdy {industry} partnerships, it drives innovation in efficiency, safety, scalability, and sustainability. Giga Computing leverages the well known GIGABYTE model at expos, taking part beneath the GIGABYTE banner.

As a founding member of MLCommons, Giga Computing continues to assist the group’s efforts in benchmarking server options for AI coaching and inference workloads. Within the newest MLPerf Inference v5.0 benchmarks, Giga Computing submitted take a look at outcomes primarily based on the GIGABYTE G893 air-cooled sequence outfitted with essentially the most superior accelerators, together with the AMD Intuition TM MI325X and the NVIDIA HGX TM H200. These techniques showcase industry-leading efficiency and supply complete take a look at outcomes throughout all mainstream AI platforms.

These techniques excel in excessive information bandwidth, massive reminiscence capability, optimized GPU useful resource allocation, and distinctive information switch options reminiscent of InfiniBand, Infinity Cloth, and all-Ethernet designs. With a completely optimized thermal design and verified techniques, our outcomes communicate for themselves—delivering excellent effectivity whereas sustaining top-tier efficiency throughout all benchmarked duties.

At Giga Computing, we’re dedicated to continuous enchancment, providing distant testing and public benchmarks for system evaluations. We additionally lead in superior cooling applied sciences, together with immersion and direct liquid cooling (DLC), to deal with the rising energy calls for of contemporary computing. Keep tuned as we push the boundaries of computing excellence with Giga Computing.

Google Cloud

For MLPerf Inference v5.0, Google Cloud submitted 15 outcomes, together with its first submission with A3 Extremely (NVIDIA H200) and A4 (NVIDIA HGX B200) VMs, and a second-time submission for Trillium, the sixth-generation TPU. The sturdy outcomes display the efficiency of its AI Hypercomputer, bringing collectively AI optimized {hardware}, software program, and consumption fashions to enhance productiveness and effectivity.

A3 Extremely VM is powered by eight NVIDIA H200 Tensor Core GPUs and affords 3.2 Tbps of GPU-to-GPU non-blocking community bandwidth and twice the excessive bandwidth reminiscence (HBM) in comparison with A3 Mega with NVIDIA H100 GPUs. Google Cloud's A3 Extremely demonstrated extremely aggressive efficiency throughout LLMs, MoE, picture, and suggestion fashions. As well as, A4 VMs in preview, powered by NVIDIA HGX B200 GPUs, achieved standout outcomes amongst comparable GPU submissions. A3 Extremely and A4 VMs ship highly effective inference efficiency, a testomony to Google Cloud’s continued shut partnership with NVIDIA to offer infrastructure for essentially the most demanding AI workloads.

Google Cloud's Trillium, the sixth-generation TPU, delivers our highest inference efficiency but. Trillium continues to realize standout efficiency on compute-heavy workloads like picture era, additional bettering Steady Diffusion XL (SDXL) throughput by 12% for the reason that MLPerf v4.1 submission. Trillium now delivers 3.5x throughput enchancment for queries/second on SDXL in comparison with the efficiency demonstrated within the final MLPerf spherical by its predecessor, TPU v5e. That is pushed by Trillium’s purpose-built structure and developments within the open software program stack, particularly on inference frameworks, to leverage the elevated compute energy.

Hewlett Packard Enterprise

That is the eighth spherical Hewlett Packard Enterprise (HPE) has joined since v1.0 and our rising portfolio of high-performance servers, storage, and networking merchandise have constantly demonstrated sturdy AI inference outcomes. On this spherical, HPE submitted a number of new configurations from our Compute and Excessive Efficiency Computing (HPC) server households with our accomplice, NVIDIA.

Our HPE ProLiant Compute Gen12 portfolio affords servers optimized for efficiency and effectivity to assist all kinds of AI fashions and inference budgets. Highlights embody:

- HPE ProLiant Compute DL380a Gen12 – supporting eight NVIDIA H200 NVL, H100 NVL, or L40S GPUs per server – and NVIDIA TensorRT LLM, greater than doubled inference throughput for the reason that final spherical because of upgrades and efficiency optimizations.

- HPE ProLiant Compute DL384 Gen12 with dual-socket (2P) NVIDIA GH200 144GB demonstrated twice the efficiency of our single-socket (1P) NVIDIA GH200 144GB submission – a major first in HPE’s MLPerf outcomes.

- HPE’s first-ever submission of a HPE Compute Scale-up Server 3200 featured a 1-to-1 mapping between 4 Intel CPUs and 4 NVIDIA H100 GPUs, providing high-performance reliability and scalable inference.

The HPE Cray XD portfolio delivers high-performance for quite a lot of coaching and inference use instances. Highlights on inference outcomes embody:

- HPE Cray XD670 air-cooled servers with 8-GPU NVIDIA HGX H200 and H100 baseboards delivered our highest MLPerf inference efficiency up to now.

- All HPE Cray outcomes used HPE GreenLake for File Storage or HPE Cray ClusterStor E1000 Storage Programs to host datasets and mannequin checkpoints, proving that high-throughput inference will be obtained with out transferring datasets and checkpoints to native disks.

HPE wish to thank the MLCommons group and our companions for his or her continued innovation and for making MLPerf the {industry} normal to measure AI efficiency.

Intel

The newest MLPerf Inference v5.0 outcomes reaffirm Intel Xeon 6 with P-cores energy in AI inference and general-purpose AI workloads.

Throughout six MLPerf benchmarks, Xeon 6 CPUs delivered a 1.9x enchancment in AI efficiency increase over its earlier era, fifth Gen Intel Xeon processors. The outcomes spotlight Xeon’s capabilities in AI workloads, together with classical machine studying, small- to mid-size fashions, and relational graph node classification.

Since first submitting Xeon to MLPerf in 2021 (with third Gen Intel Xeon Scalable Processors), Intel has achieved as much as a 15x efficiency improve, pushed by {hardware} and software program developments, with current optimizations bettering outcomes by 22% over v4.1.

Notably, Intel is the one vendor submitting server CPU outcomes to MLPerf. And Xeon additionally continues to be the host CPU of alternative for accelerated techniques.

Intel additionally supported a number of prospects with their submissions and collaborated with OEM companions – Cisco, Dell Applied sciences, Quanta, and Supermicro – to ship MLPerf submissions powered by Intel Xeon 6 with P-cores. Thanks, MLCommons, for making a trusted normal for measurement and accountability. For extra particulars, please see MLCommons.org.

KRAI

Based in 2020 in Cambridge, UK ("The Silicon Fen"), KRAI is a purveyor of premium benchmarking and optimization options for AI Programs. Our skilled staff has participated in 11 out of 11 MLPerf Inference rounds, having contributed to among the quickest and most energy-efficient leads to MLPerf historical past in collaboration with main distributors.

As we all know firsthand how a lot effort (months to years) goes into getting ready extremely aggressive and absolutely compliant MLPerf submissions, we got down to display what will be achieved with extra lifelike effort (days to weeks). As the one submitter of the state-of-the-art DeepSeek-v3-671B Open Division workload, we in contrast it towards Llama 3.1 405B on 8x MI300X-192GB GPUs. Surprisingly, (sparse) DeepSeek-v3 was slower than (dense) Llama 3.1 405B when it comes to tokens per second (TPS: 243.45 vs 278.65), in addition to much less correct (e.g. rougeL: 18.95 vs 21.63).

Moreover, we in contrast the efficiency of Llama 3.1 70B utilizing a number of publicly launched Docker pictures on 8x H200-141GB GPUs, reaching Oine scores of as much as 30,530 TPS with NIM v1.5.0, 27,950 TPS with SGLang v0.4.3, and 21,372 TPS with vLLM v0.6.4. Curiously, NIM was slower than SGLang when it comes to queries per second (QPS: 94.26 vs 95.65). That is defined by NIM additionally being much less correct than SGLang (e.g. rouge1: 46.52 vs 47.57), whereas producing extra tokens per pattern on common. Within the new interactive Server class with a lot stricter latency constraints, NIM achieved 15,960 TPS vs 28,421 TPS for non-interactive Server and 30,530 TPS for Offline.

We cordially thank Hewlett Packard Enterprise for offering entry to Cray XD670 servers with 8x NVIDIA H200 GPUs, and Dell Applied sciences for offering entry to PowerEdge XE9680 servers with 8x AMD MI300X GPUs.

Lambda

About Lambda

Lambda was based in 2012 by AI engineers with revealed analysis on the prime machine studying conferences on this planet. Our aim is to turn into the #1 AI compute platform serving builders throughout your entire AI growth lifecycle. Lambda permits AI engineers to simply, securely and affordably construct, take a look at and deploy AI merchandise at scale. Our product portfolio spans from on-prem GPU {hardware} to hosted GPUs within the cloud – in each Public and Personal settings. Lambda’s mission is to create a world the place entry to computation is as easy and ubiquitous as electrical energy.

In regards to the Benchmarks

That is our staff's first participation within the MLPerf inference spherical of submissions, in partnership with NVIDIA. Our benchmarks are run on two Lambda Cloud 1-Click on Clusters:

- 1-Click on Cluster with eight NVIDIA H200 141GB SXM GPUs, 112 CPU Cores, 2 TB RAM, and 28 TB SSD.

- 1-Click on Cluster with eight NVIDIA B200 180GB SXM GPUs, 112 CPU Cores, 2.9 TB RAM, and 28 TB SSD.

Our inference benchmark runs for fashions like DLRM, GPTJ, Llama 2 and Mixtral on 8 GPUs display state-of-the-art time-to-solution on Lambda Cloud 1-Click on Clusters. We famous important throughput enhancements on our clusters, with efficiency ranges making them prime techniques for our prospects' most demanding inference workloads.

Lenovo

Empowering Innovation: Lenovo's AI Journey

At Lenovo, we're captivated with harnessing the ability of AI to remodel industries and lives. To attain this imaginative and prescient, we put money into cutting-edge analysis, rigorous testing, and collaboration with {industry} leaders by MLPerf Inference v5.0.

Driving Excellence By means of Partnership and Collaboration

Our partnership with MLCommons permits us to display our AI options' efficiency and capabilities quarterly, showcasing our dedication to innovation and buyer satisfaction. By working along with {industry} pioneers like NVIDIA on important purposes reminiscent of picture classification and pure language processing, we've achieved excellent outcomes.

Unlocking Innovation: The Energy of Lenovo ThinkSystem

Our highly effective ThinkSystems SR675 V3, SR680a V3, SR6780a V3, and SR650a V4, outfitted with NVIDIA GPUs, allow us to develop and deploy AI-powered options that drive enterprise outcomes and enhance buyer experiences. We're proud to have participated in these challenges utilizing our ThinkSystem alongside NVIDIA GPUs.

Partnership for Progress: Enhancing Product Improvement

Our partnership with MLCommons gives priceless insights into how our AI options examine towards the competitors, units buyer expectations, and empowers us to repeatedly improve our merchandise. By means of this collaboration, we will work carefully with {industry} consultants to drive progress and ship higher merchandise for our prospects.

By empowering innovation and driving excellence in AI growth, we're dedicated to delivering unparalleled experiences and outcomes for our prospects. Our partnerships with main organizations like NVIDIA have been instrumental in reaching these targets. Collectively, we're shaping the way forward for AI innovation and making it accessible to everybody.

MangoBoost

MangoBoost is a DPU and system software program firm aiming to dump community and storage duties to enhance efficiency, scalability and effectivity of contemporary datacenters.

We’re excited to introduce Mango LLMBoost, a ready-to-deploy AI inference serving resolution that delivers excessive efficiency, scalability, and adaptability. LLMBoost software program stack is designed to be seamless and versatile throughout a various vary of GPUs. On this submission, MangoBoost partnered up with AMD and showcased the efficiency of LLMBoost as a highly-scalable multi-node inference serving resolution.

Our outcomes for LLaMA2-70B on 32 MI300x GPUs showcase linear efficiency scaling in each server and offline eventualities, reaching 103k and 93k TPS respectively. This end result highlights how LLMBoost permits easy LLM scaling—from a single-GPU proof of idea to large-scale, multi-GPU deployments. Better of all, anybody can replicate our outcomes with ease by downloading our Docker picture and working a single command.

Mango LLMBoost has been rigorously examined and confirmed to assist inference serving on a number of GPU architectures from AMD and NVIDIA, and is suitable with well-liked open fashions such because the Llama, Qwen, DeepSeek, and multi-modal reminiscent of Llava. LLMBoost’s one-line deployment, sturdy OpenAI API and REST API integration allow builders and information scientists to quickly incorporate LLMBoost inference serving into their present software program ecosystems. LLMBoost is offered and free to attempt in main cloud environments together with Azure, AWS, and GCP in addition to on-premise.

MangoBoost's suite of DPU and {hardware} options gives complementary {hardware} acceleration for inference serving, considerably decreasing system overhead in trendy AI/ML workloads. Mango GPUBoost RDMA accelerates multi-node inference and coaching over RoCEv2 networks, whereas Mango NetworkBoost offloads web-serving and TCP stacks, dramatically decreasing CPU utilization. Mango StorageBoost delivers high-performance storage initiator and goal options, enabling AI storage techniques—together with conventional and JBoF techniques—to scale effectively.

NVIDIA

In MLPerf Inference v5.0, NVIDIA and its companions submitted many excellent outcomes throughout each the Hopper and Blackwell platforms. NVIDIA made its first MLPerf submission utilizing the GB200 NVL72 rack-scale system, that includes 72 Blackwell GPUs and 36 Grace CPUs in a rack-scale, liquid-cooled design. It incorporates a 72-GPU NVLink area that acts as a single, huge GPU. This method achieved Llama 3.1 405B token throughput of 13,886 tokens per second within the offline situation and achieved 30X larger throughput within the server situation than the NVIDIA submission utilizing eight Hopper GPUs.

NVIDIA additionally submitted outcomes utilizing a system with eight B200 GPUs, related over NVLink, together with on the Llama 2 70B Interactive benchmark, a model of the Llama 2 70B benchmark with tighter time-to-first token and token-to-token latency constraints. On this benchmark, eight Blackwell GPUs delivered triple the token throughput in comparison with the identical variety of Hopper GPUs.

Hopper efficiency additionally noticed will increase, bringing much more worth to the Hopper put in base. By means of full-stack optimizations, the Hopper structure delivered a cumulative efficiency enchancment of 1.6X in a single 12 months on the Llama 2 70B benchmark amongst leads to the “obtainable” class. Hopper additionally continued to ship nice outcomes on all benchmarks, together with on the brand new Llama 2 70B Interactive, Llama 3.1 405B, and GNN benchmarks.

15 NVIDIA companions submitted nice outcomes on the NVIDIA platform, together with ASUSTek, Broadcom, CoreWeave, Cisco, Dell Applied sciences, Fujitsu, GigaComputing, Google, HPE, Lambda, Lenovo, Oracle, Quanta Cloud Know-how, Supermicro, and Sustainable Metallic Cloud. NVIDIA would additionally prefer to commend MLCommons for his or her ongoing dedication to develop and promote goal and helpful measurements of AI platform efficiency.

Oracle

Oracle has delivered stellar MLPerf Inference v5.0 outcomes, showcasing the strengths of OCI’s Cloud Providing in offering industry-leading AI inference efficiency. Oracle efficiently submitted outcomes throughout numerous inference workloads reminiscent of picture classification, object detection, LLM – Q&A, summarization, textual content and code era, node classification, and suggestion for commerce. The inference benchmark outcomes for the high-end NVIDIA H200 naked steel occasion display that OCI gives excessive efficiency and throughput which might be important to delivering quick, cost-effective, and scalable LLM inference throughout numerous purposes.

Oracle Cloud Infrastructure (OCI) affords AI Infrastructure, Generative AI, AI Companies, ML Companies, and AI in our Fusion Functions. OCI has numerous choices for GPUs for workloads starting from small-scale to mid-level and large-scale AI. For the smallest workloads, we provide each naked steel (BM) and VM situations with NVIDIA A10 GPUs. For mid-level scale-out workloads, we provide the NVIDIA A100 VMs, BMs and NVIDIA L40S Naked Metallic with GPUs. For the most important inference and foundational mannequin coaching workloads, we provide NVIDIA A100 80GB, H100 GPU, NVIDIA H200, NVIDIA GB200 and NVIDIA GB300 that may scale from one node to tens of hundreds of GPUs.

Generative AI workloads drive a unique set of engineering tradeoffs than conventional cloud workloads. So, we designed a purpose-built GenAI community tailor-made to the wants of the best-of-breed Gen AI workloads. Oracle Cloud Infrastructure (OCI) affords many distinctive companies, together with cluster community, an ultra-high efficiency community with assist for distant direct reminiscence entry (RDMA) to assist high-throughput and latency-sensitive nature of Gen AI workloads in addition to excessive efficiency storage options.

Quanta Cloud Know-how

Quanta Cloud Know-how (QCT), a world chief in information heart options, continues to drive innovation in HPC and AI with best-in-class system designs tailor-made to satisfy the evolving calls for of contemporary computational workloads. As a part of its dedication to delivering cutting-edge efficiency, QCT participated within the newest MLPerf Inference v5.0 benchmark, submitting leads to the information heart closed division throughout numerous system configurations.

On this spherical of MLPerf Inference v5.0 submissions, QCT showcased techniques primarily based on totally different compute architectures, together with CPU-centric and GPU-centric techniques.

CPU-Centric Programs:

- QuantaGrid D55X-1U – A high-density 1U server powered by twin Intel Xeon 6 processors as the first compute engines. With AMX instruction set assist, it delivers optimized inference efficiency throughout general-purpose AI fashions reminiscent of ResNet-50, RetinaNet, 3D-UNet, and DLRMv2, in addition to small language fashions like GPT-J-6B—providing a cost-ecient various to GPU-based options.

GPU-Centric Programs:

- QuantaGrid D54U-3U – A extremely exible 3U x86_64-based platform supporting as much as 4 dual-width or eight single-width PCIe GPUs, permitting customers to customise congurations primarily based on their workload necessities.

- QuantaGrid D74H-7U – A robust 7U x86_64-based system designed for large-scale AI workloads, supporting as much as eight NVIDIA H100 SXM5 GPUs. Leveraging NVLink interconnect, it permits high-speed GPU-to-GPU communication and helps GPUDirect Storage, guaranteeing ultra-fast, low-latency information transfers.

- QuantaGrid S74G-2U – A next-generation 2U system primarily based on the NVIDIA Grace Hopper Superchip. The mixing of CPU and GPU through NVLink C2C establishes a unified reminiscence structure, permitting seamless information entry and improved computational effectivity.

QCT’s complete AI infrastructure options cater to a various consumer base, from educational researchers to enterprise purchasers, who require sturdy and scalable AI infrastructure. By actively taking part in MLPerf benchmarking and brazenly sharing outcomes, QCT underscores its dedication to transparency and reliability, empowering prospects to make data-driven selections primarily based on validated efficiency metrics.

Supermicro

Supermicro affords a complete AI portfolio with over 100 GPU-optimized techniques, each air-cooled and liquid cooled choices, with a alternative of Intel, AMD, NVIDIA, and Ampere CPUs. These techniques will be built-in into racks, together with storage and networking for deployment in information facilities. Supermicro is transport NVIDIA HGX 8-GPU B200 techniques, in addition to NVL72 GPU techniques, together with an intensive checklist of different techniques.