It has been an eventful week for Hugging Face as it has been raining AI innovations. It has just released Transformers Agent which allows users to manage over 100,000 HF models by conversing with the Transformers and Diffusers interface. The new API of tools and agents can generate other HF models to address complex, multimodal challenges.

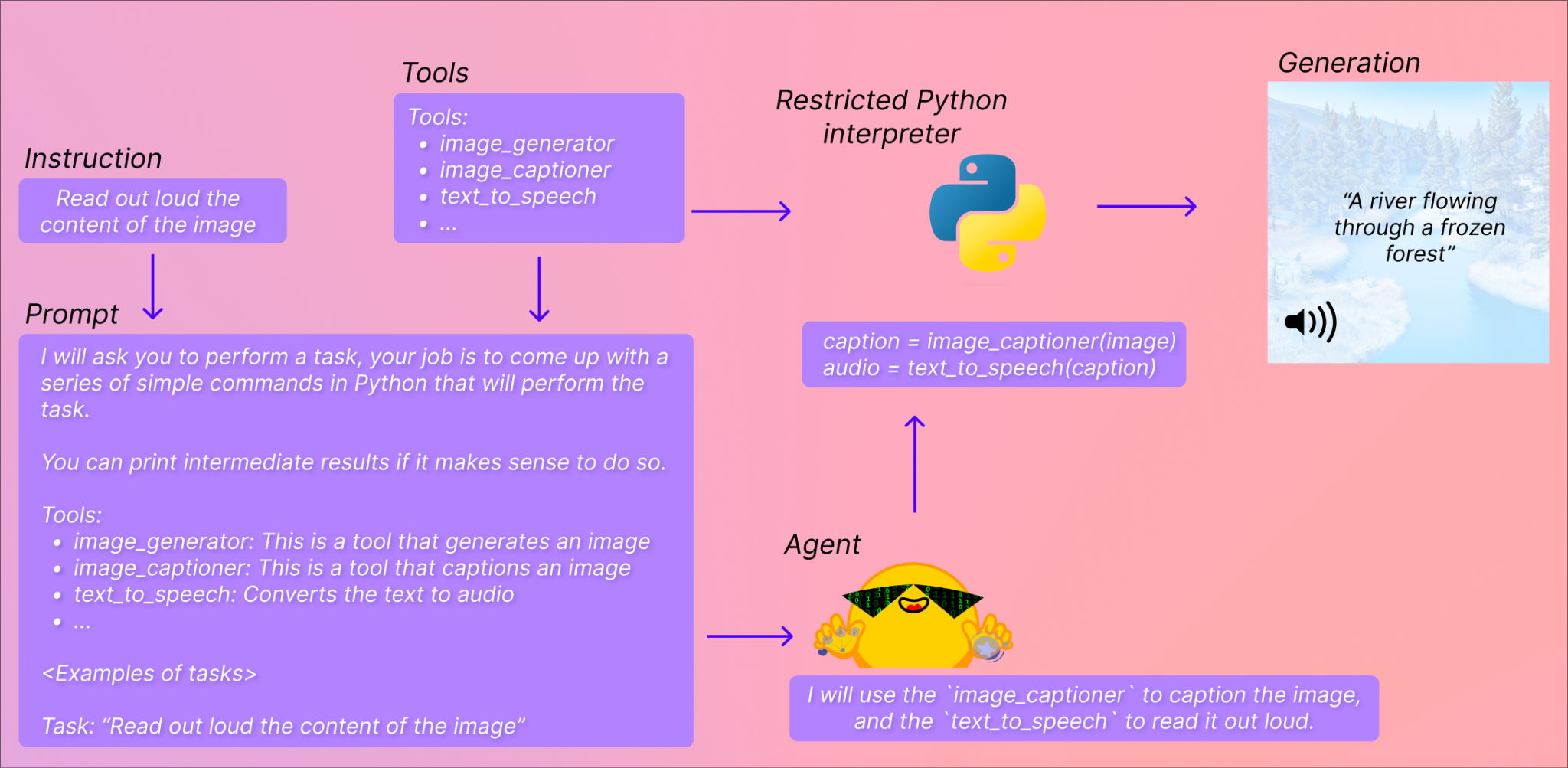

Transformers Agent provides a natural language API on top of transformers, with a set of curated tools and an agent designed to interpret natural language and utilise these tools. The system is intentionally extensible, with the ability to easily integrate any relevant tools developed by the community. Before using the agent.run functionality, users must instantiate an agent, which is an LLM. The system supports both OpenAI modes as well as open source alternatives from BigCode and OpenAssistant. Hugging Face offers free access to endpoints for BigCode and OpenAssistant models.

The tools consist of a single function with a designated name and description which are then employed to prompt an agent to perform a given task. The agent is taught how to use these tools by means of a prompt that demonstrates how the tools can be leveraged to accomplish the requested query. While pipelines frequently combine multiple tasks into a single operation, tools are designed to concentrate on one specific, uncomplicated task.

There are two APIs available:

Single execution (run): The user has access to the single execution method which involves using the agent’s run() method. This method automatically selects the necessary tool or tools required for the task at hand and runs them accordingly. The run() method is capable of executing one or multiple tasks in the same instruction, although the complexity of the instruction may increase the likelihood of the agent failing. Each run() operation is independent, allowing the user to run it multiple times with different tasks consecutively.

Chat-based Execution (chat): The agent’s chat-based approach is characterised by its use of the chat() method. This method is particularly useful when there is a need to maintain the state across different instructions. While it is ideal for experimentation, it is not particularly well-suited for complex instructions, which the run() method is better equipped to handle. The chat() method can also accept arguments, allowing for the passage of non-textual types or specific prompts as required.

The code is then executed using a small Python interpreter along with a set of inputs provided by the user’s tools. Despite concerns about arbitrary code execution, the only functions that can be called are those provided by Hugging Face and the print function, which limits what can be executed. Additionally, attribute lookups and imports are not allowed, further reducing the risk of attacks.

Curated Tools

- Document-based question answering: Using Donut, agents can answer questions based on a document, even if it is in image format, like a PDF (Donut).

- Text-based question answering: With Flan-T5, agents can answer questions based on long texts by identifying the most relevant information (Flan-T5).

- Unconditional image captioning: Agents can add captions to images using BLIP (BLIP).

- Image question answering: VILT enables agents to answer questions about an image by identifying the most relevant features. (VILT)

- Image segmentation: With CLIPSeg, agents can output segmentation masks based on image prompts, which can be useful for tasks like object detection (CLIPSeg).

- Speech to text: Agents can transcribe spoken words into text using Whisper, which is particularly useful for processing audio recordings (Whisper).

- Text to speech: SpeechT5 enables agents to convert text into speech (SpeechT5).

- Zero-shot text classification: BART helps agents to classify text into predefined labels without needing prior training data (BART).

- Text summarisation: With BART, agents can summarise long texts into concise sentences or paragraphs (BART).

- Translation: NLLB allows agents to translate text from one language to another, which can be useful for communication across different cultures and languages (NLLB).

Custom tools

- Text downloader: This tool enables you to download text from a web URL.

- Text to image: With this tool, you can create an image based on a prompt, using stable diffusion.

- Image transformation: Using instruct pix2pix stable diffusion, this tool enables you to modify an image based on an initial image and a prompt.

- Text to video: This tool generates a brief video according to a prompt, utilizing damo-vilab.

Read more: The Peaks and Pits of Open-Source with Hugging Face

Last week, Hugging Face partnered with ServiceNow to develop a new open-source language model for codes called StarCoder. The model created as a part of the BigCode initiative is an improved version of the StarCoderBase model trained on 35 billion Python tokens. Researchers stated StarCoder’s capabilities have been tested on a range of benchmarks, including the HumanEval benchmark for Python. The model has outperformed larger models like PaLM, LaMDA, and LLaMA, and has proven to be on par with or even better than closed models like OpenAI’s code-Cushman-001 (the original Codex model that powered early versions of GitHub Copilot).

The post Hugging Face Releases Groundbreaking Transformers Agent appeared first on Analytics India Magazine.