Image by Author

Anthropic has recently launched a new series of AI models that have outperformed both GPT-4 and Gemini in benchmark tests. With the AI industry growing and evolving rapidly, Claude 3 models are making significant strides as the next big thing in Large Language Models (LLMs).

In this blog post, we will explore the performance benchmarks of Claude's 3 models. We will also learn about the new Python API that supports simple, asynchronous, and stream response generation, along with its enhanced vision capabilities.

Introducing Claude 3

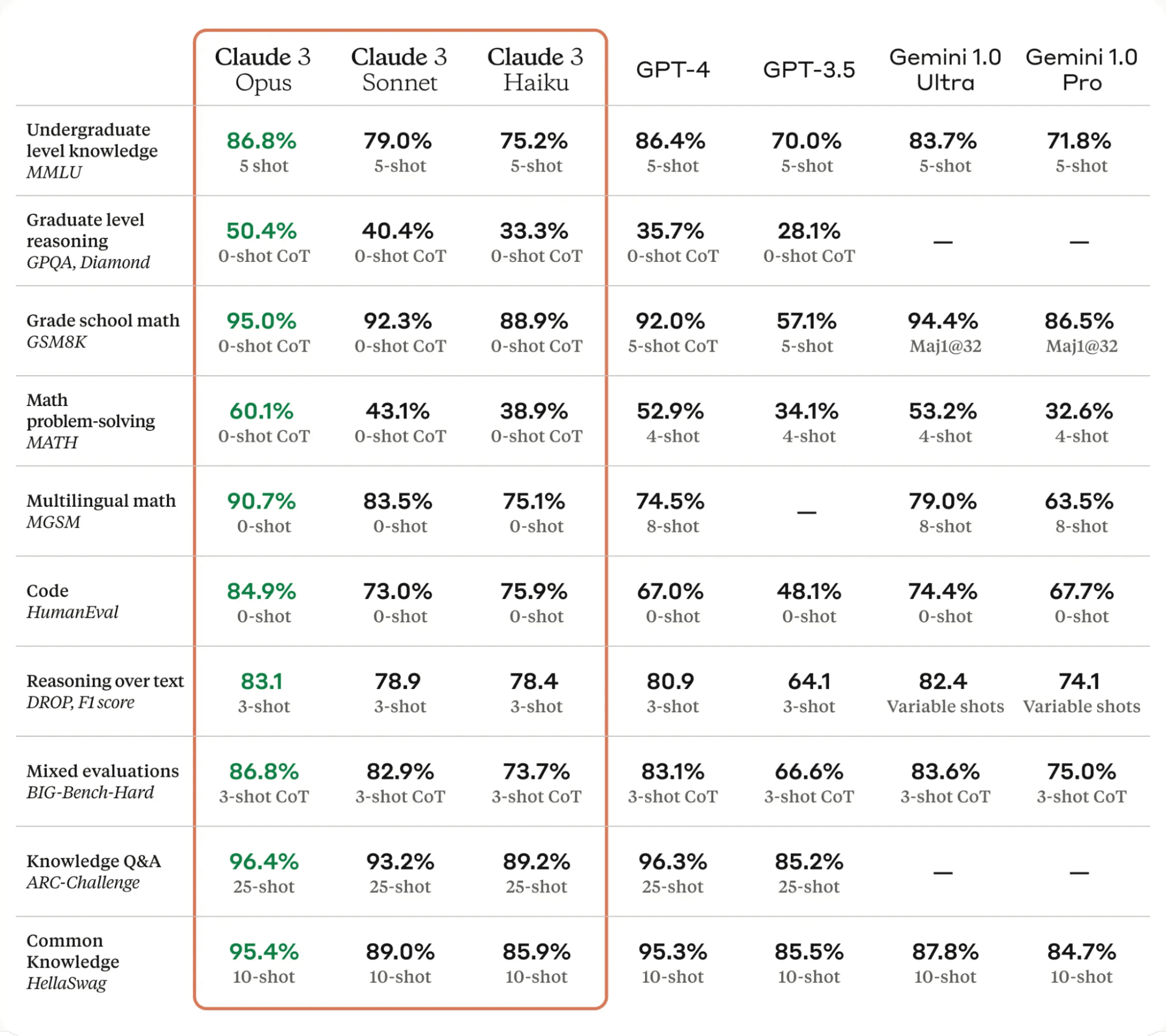

Claude 3, is a significant leap forward in the field of AI technology. It outperforms state of the art language models on various evaluation benchmarks, including MMLU, GPQA, and GSM8K, demonstrating near-human levels of comprehension and fluency in complex tasks.

The Claude 3 models come in three variants: Haiku, Sonnet, and Opus, each with its unique capabilities and strengths.

- Haiku is the fastest and most cost-effective model, capable of reading and processing information-dense research papers in less than three seconds.

- Sonnet is 2x faster than Claude 2 and 2.1, excelling at tasks demanding rapid responses, like knowledge retrieval or sales automation.

- Opus delivers similar speeds to Claude 2 and 2.1 but with much higher levels of intelligence.

According to the table below, Claude 3 Opus outperformed GPT-4 and Gemini Ultra on all LLMs benchmarks, making it the new leader in the AI world.

Table from Claude 3

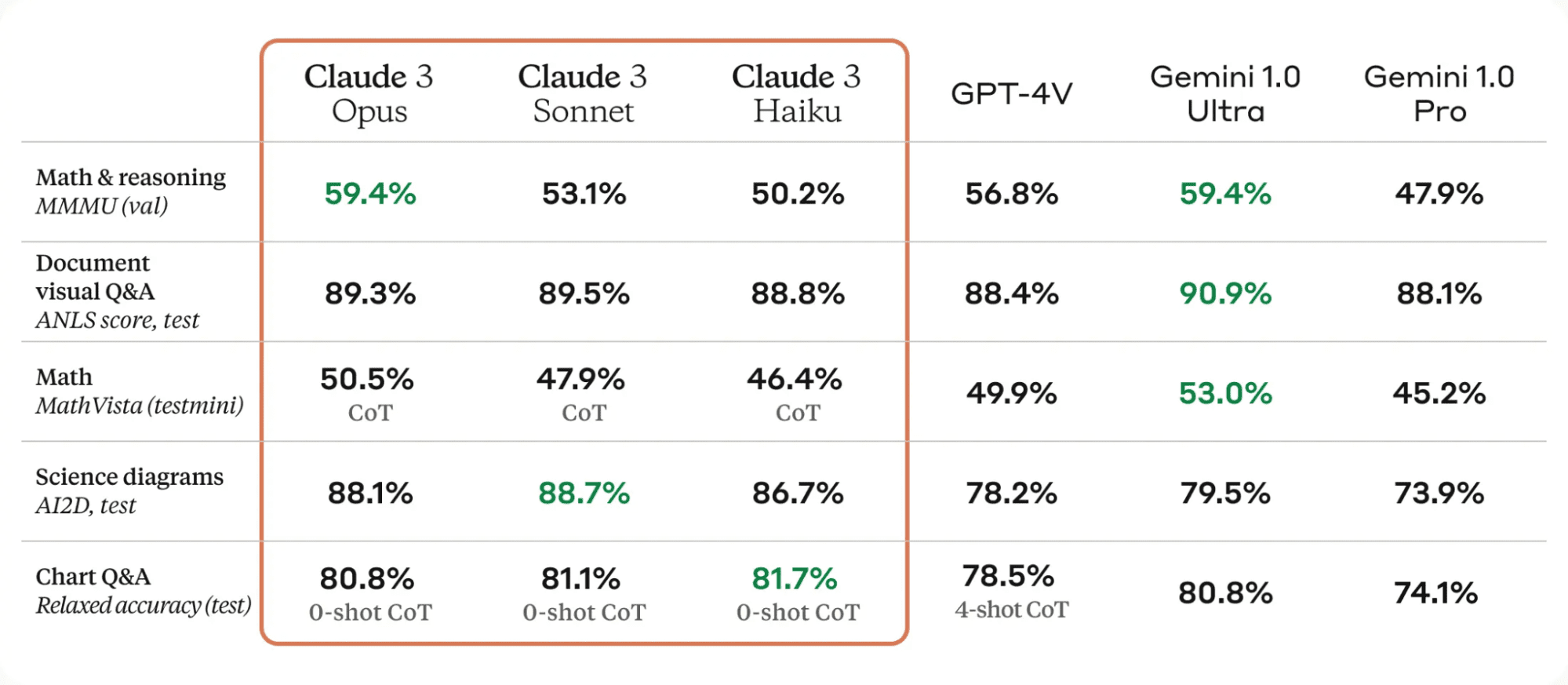

One of the significant improvements in the Claude 3 models is their strong vision capabilities. They can process various visual formats, including photos, charts, graphs, and technical diagrams.

Table from Claude 3

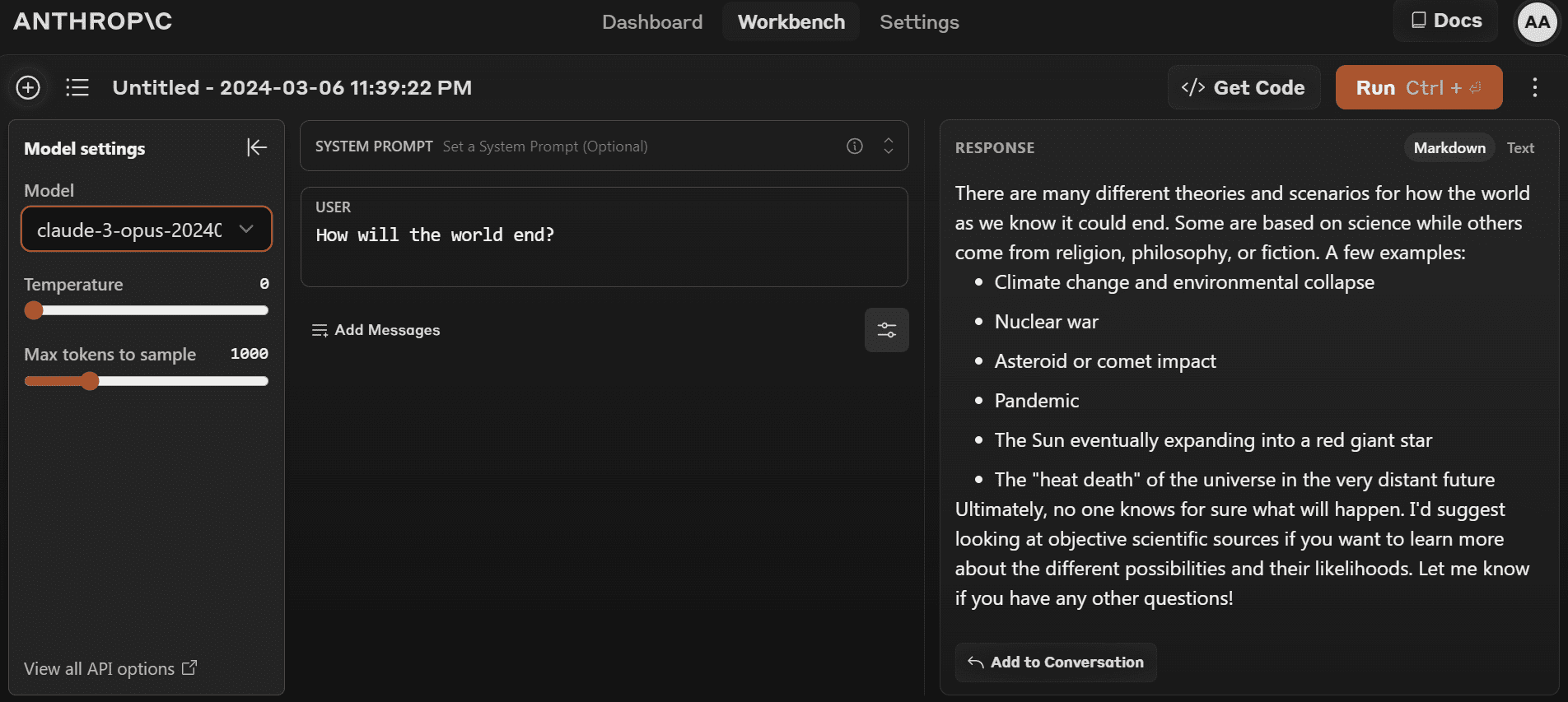

You can start using the latest model by going to https://www.anthropic.com/claude and creating a new account. It is quite simple compared to the OpenAI playground.

Setting Up

Setting Up

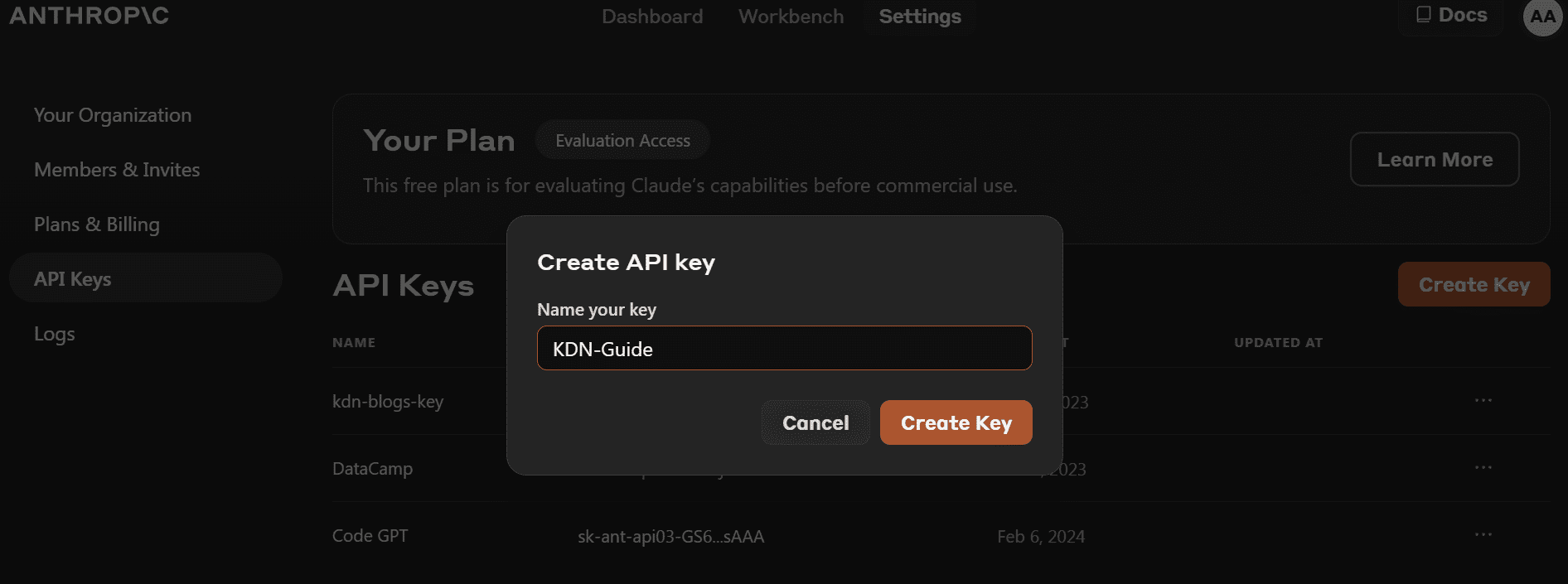

- Before we install the Python Package, we need to go to https://console.anthropic.com/dashboard and get the API key.

- Instead of providing the API key directly for creating the client object, you can set the `ANTHROPIC_API_KEY` environment variable and provide it as the key.

- Install the `anthropic` Python package using PIP.

pip install anthropic- Create the client object using the API key. We will use the client for text generation, access vision capability, and streaming.

import os import anthropic from IPython.display import Markdown, display client = anthropic.Anthropic( api_key=os.environ["ANTHROPIC_API_KEY"], )API that Only works with Claude 2

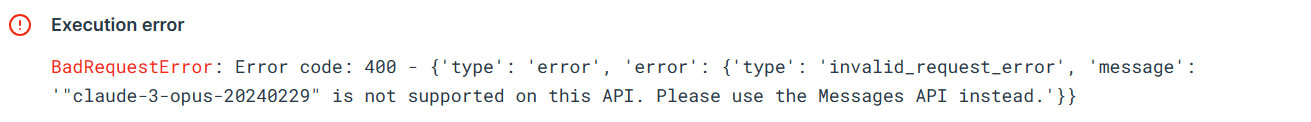

Let’s try the old Python API to test if it still works or not. We will provide the completion API with the model name, max token length, and prompt.

from anthropic import HUMAN_PROMPT, AI_PROMPT completion = client.completions.create( model="claude-3-opus-20240229", max_tokens_to_sample=300, prompt=f"{HUMAN_PROMPT} How do I cook a original pasta?{AI_PROMPT}", ) Markdown(completion.completion)The error shows that we cannot use the old API for the `claude-3-opus-20240229` model. We need to use the Messages API instead.

Claude 3 Opus Python API

Claude 3 Opus Python API

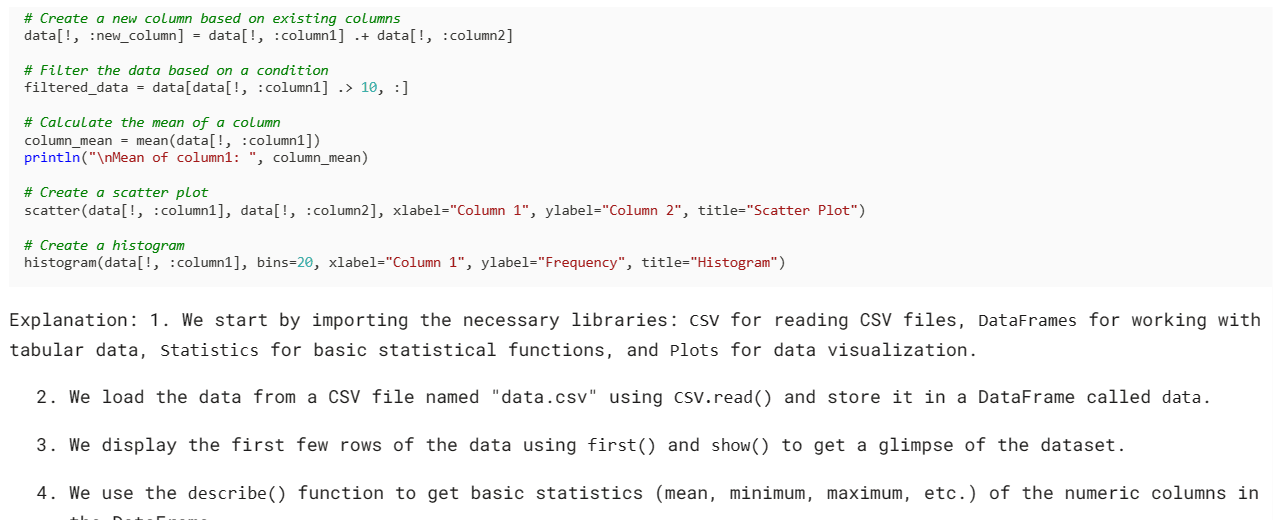

Let’s use the Messages API to generate the response. Instead of prompt, we have to provide the messages argument with a list of dictionaries containing the role and content.

Prompt = "Write the Julia code for the simple data analysis." message = client.messages.create( model="claude-3-opus-20240229", max_tokens=1024, messages=[ {"role": "user", "content": Prompt} ] ) Markdown(message.content[0].text)Using IPython Markdown will display the response as Markdown format. Meaning it will show bullet points, code blocks, headings, and links in a clean way.

Adding System Prompt

Adding System Prompt

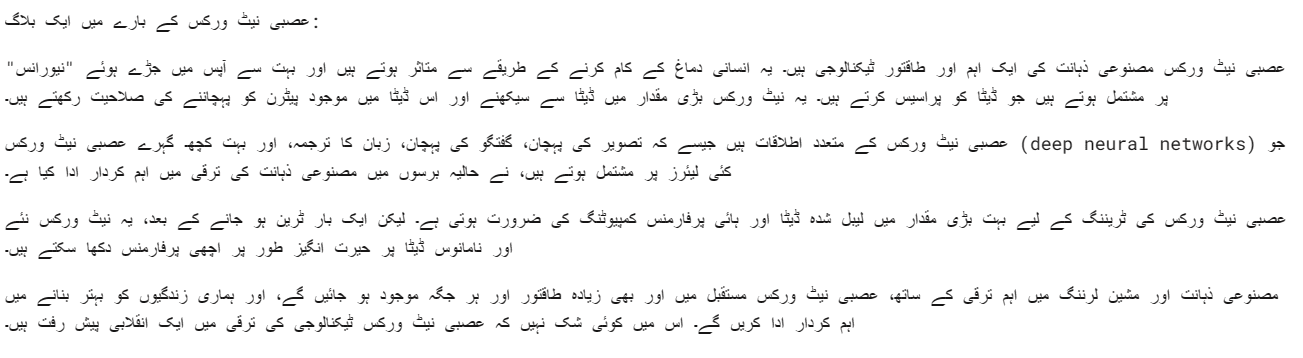

We can also provide a system prompt to customize your response. In our case we are asking Claude 3 Opus to respond in Urdu language.

client = anthropic.Anthropic( api_key=os.environ["ANTHROPIC_API_KEY"], ) Prompt = "Write a blog about neural networks." message = client.messages.create( model="claude-3-opus-20240229", max_tokens=1024, system="Respond only in Urdu.", messages=[ {"role": "user", "content": Prompt} ] ) Markdown(message.content[0].text)The Opus model is quite good. I mean I can understand it quite clearly.

Claude 3 Async

Claude 3 Async

Synchronous APIs execute API requests sequentially, blocking until a response is received before invoking the next call. Asynchronous APIs, on the other hand, allow multiple concurrent requests without blocking, making them more efficient and scalable.

- We have to create an Async Anthropic client.

- Create the main function with async.

- Generate the response using the await syntax.

- Run the main function using the await syntax.

import asyncio from anthropic import AsyncAnthropic client = AsyncAnthropic( api_key=os.environ["ANTHROPIC_API_KEY"], ) async def main() -> None: Prompt = "What is LLMOps and how do I start learning it?" message = await client.messages.create( max_tokens=1024, messages=[ { "role": "user", "content": Prompt, } ], model="claude-3-opus-20240229", ) display(Markdown(message.content[0].text)) await main()

Note: If you are using async in the Jupyter Notebook, try using await main(), instead of asyncio.run(main())

Claude 3 Streaming

Streaming is an approach that enables processing the output of a Language Model as soon as it becomes available, without waiting for the complete response. This method minimizes the perceived latency by returning the output token by token, instead of all at once.

Instead of `messages.create`, we will use `messages.stream` for response streaming and use a loop to display multiple words from the response as soon as they are available.

from anthropic import Anthropic client = anthropic.Anthropic( api_key=os.environ["ANTHROPIC_API_KEY"], ) Prompt = "Write a mermaid code for typical MLOps workflow." completion = client.messages.stream( max_tokens=1024, messages=[ { "role": "user", "content": Prompt, } ], model="claude-3-opus-20240229", ) with completion as stream: for text in stream.text_stream: print(text, end="", flush=True)As we can see, we are generating the response quite fast.

Claude 3 Streaming with Async

Claude 3 Streaming with Async

We can use an async function with streaming as well. You just need to be creative and combine them.

import asyncio from anthropic import AsyncAnthropic client = AsyncAnthropic() async def main() -> None: completion = client.messages.stream( max_tokens=1024, messages=[ { "role": "user", "content": Prompt, } ], model="claude-3-opus-20240229", ) async with completion as stream: async for text in stream.text_stream: print(text, end="", flush=True) await main()  Claude 3 Vision

Claude 3 Vision

Claude 3 Vision has gotten better over time, and to get the response, you just have to provide the base64 type of image to the messages API.

In this example, we will be using Tulips (Image 1) and Flamingos (Image 2) photos from Pexel.com to generate the response by asking questions about the image.

We will use the `httpx` library to fetch both images from pexel.com and convert them to base64 encoding.

import anthropic import base64 import httpx client = anthropic.Anthropic() media_type = "image/jpeg" img_url_1 = "https://images.pexels.com/photos/20230232/pexels-photo-20230232/free-photo-of-tulips-in-a-vase-against-a-green-background.jpeg" image_data_1 = base64.b64encode(httpx.get(img_url_1).content).decode("utf-8") img_url_2 = "https://images.pexels.com/photos/20255306/pexels-photo-20255306/free-photo-of-flamingos-in-the-water.jpeg" image_data_2 = base64.b64encode(httpx.get(img_url_2).content).decode("utf-8")We provide base64-encoded images to the messages API in image content blocks. Please follow the coding pattern shown below to successfully generate the response.

message = client.messages.create( model="claude-3-opus-20240229", max_tokens=1024, messages=[ { "role": "user", "content": [ { "type": "image", "source": { "type": "base64", "media_type": media_type, "data": image_data_1, }, }, { "type": "text", "text": "Write a poem using this image." } ], } ], ) Markdown(message.content[0].text)We got a beautiful poem about the Tulips.

Claude 3 Vision with Multiple Images

Claude 3 Vision with Multiple Images

Let’s try loading multiple images to the same Claude 3 messages API.

message = client.messages.create( model="claude-3-opus-20240229", max_tokens=1024, messages=[ { "role": "user", "content": [ { "type": "text", "text": "Image 1:" }, { "type": "image", "source": { "type": "base64", "media_type": media_type, "data": image_data_1, }, }, { "type": "text", "text": "Image 2:" }, { "type": "image", "source": { "type": "base64", "media_type": media_type, "data": image_data_2, }, }, { "type": "text", "text": "Write a short story using these images." } ], } ], ) Markdown(message.content[0].text)We have a short story about a Garden of Tulips and Flamingos.

If you're having trouble running the code, here's a Deepnote workspace where you can review and run the code yourself.

Conclusion

I think the Claude 3 Opus is a promising model, though it may not be as fast as GPT-4 and Gemini. I believe paid users may have better speeds.

In this tutorial, we learned about the new model series from Anthropic called Claude 3, reviewed its benchmark, and tested its vision capabilities. We also learned to generate simple, async, and stream responses. It's too early to say if it's the best LLM out there, but if we look at the official test benchmarks, we have a new king on the throne of AI.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in Technology Management and a bachelor's degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.

- Meet Gorilla: UC Berkeley and Microsoft’s API-Augmented LLM…

- Getting Started with Claude 2 API

- Top 10 Tools for Detecting ChatGPT, GPT-4, Bard, and Claude

- ChatGPT Dethroned: How Claude Became the New AI Leader

- 3 Ways to Access Claude AI for Free

- How to Access and Use Gemini API for Free