Image by Freepik

AI development has become bigger than ever, especially in the Generative AI field. From generating text similar to a conversation with people to generating images from text, it’s all become possible now.

That advancement also comes into the music generation field, signified by Google, which launched a music generation model called MusicLM. This model was released in January 2023, and people have been trying out their capabilities since then. So, what is MusicLM in detail, and how can you try it out? Let’s discuss them.

Google MusicLM

MusicLM was first introduced in the paper by Agostinelli et al. (2023), where the research group explained MusicLM as a model to generate high-fidelity music from textual description. The model is generally built on top of AudioLM, and the experiments showed that the model could produce several minutes' worth of high-quality music at 24 kHz while still adhering to the text description.

Additionally, the research produces public text-to-music dataset musiccaps for anyone who wishes to develop a similar model or extend the research. The data is manually curated and hand-picked by professional musicians.

Also, MusicLM has been developed following responsible model development practices for people who fear the potential misappropriation of creative content because of the music generation. By extending the work of Carlini et al. (2022), the generated token by MusicLM is significantly different than the training data.

Trying Out MusicLM

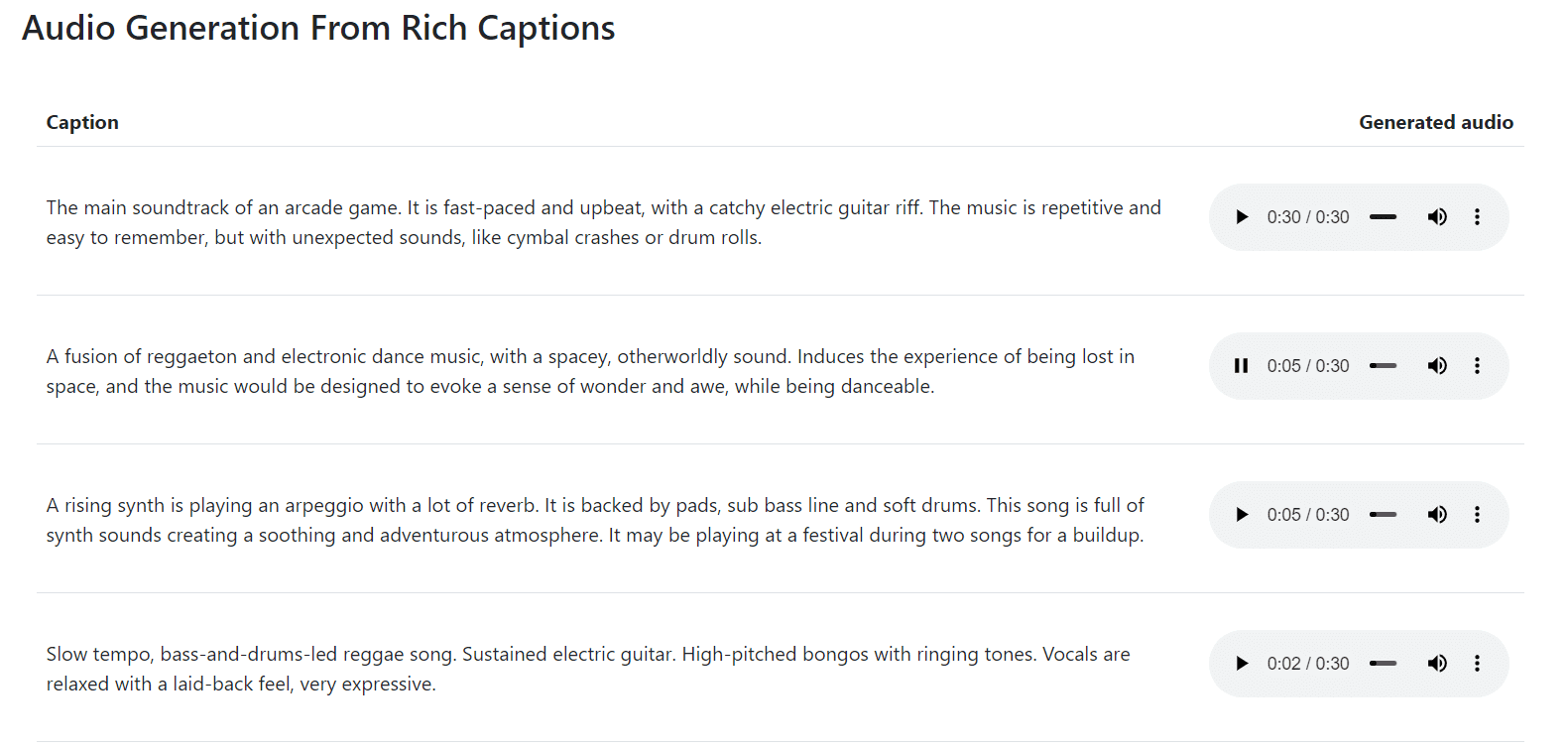

If you want to explore the MusicLM result sample, the Google research group has provided a simple website for us to see how capable MusicLM is. For example, you can explore the generated audio samples from the text caption on the website.

Image by Author (Adapted from google-research.github.io)

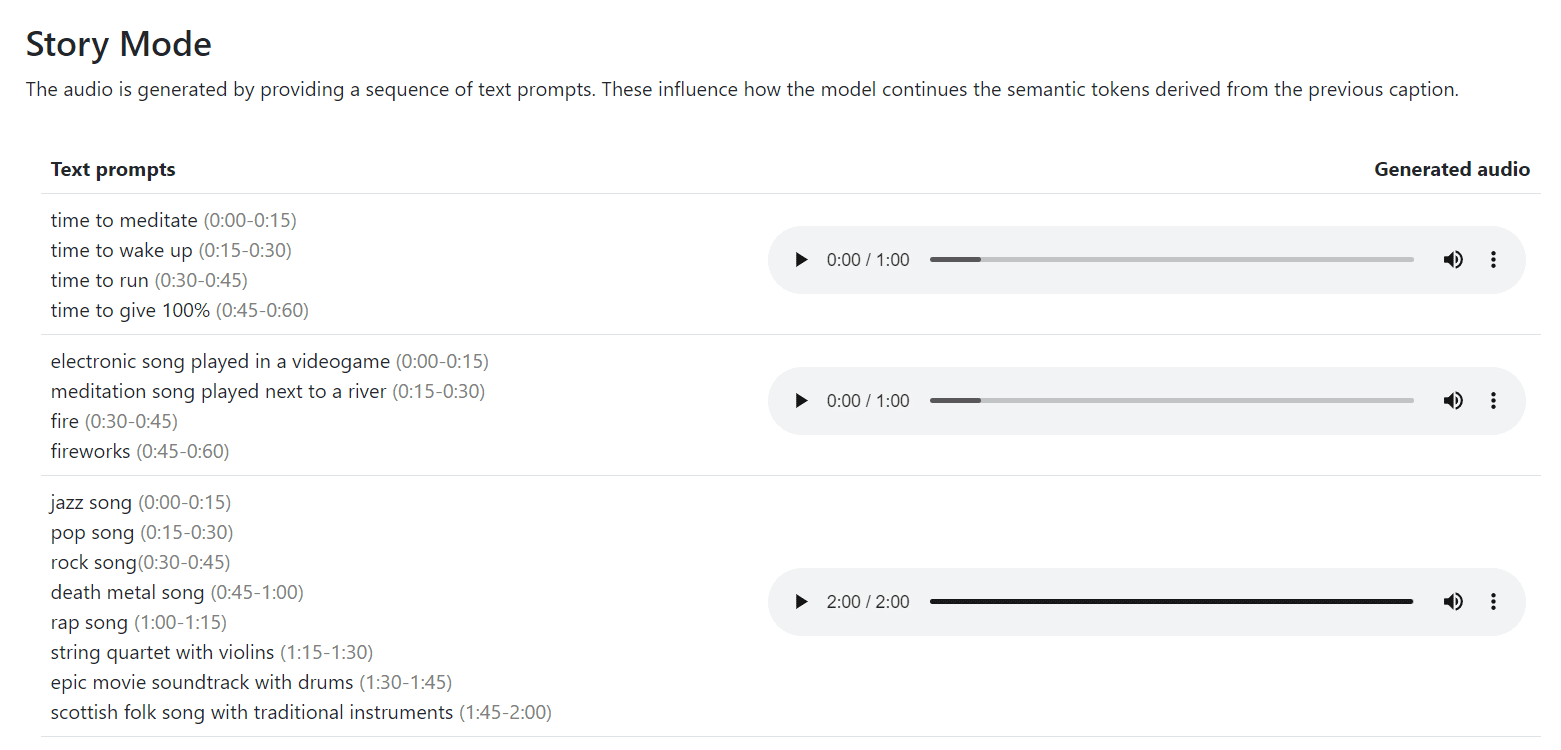

Another example is my favorite sample, the Story Mode music generation, where different styles of music can be integrated into one by using several text prompts.

Image by Author (Adapted from google-research.github.io)

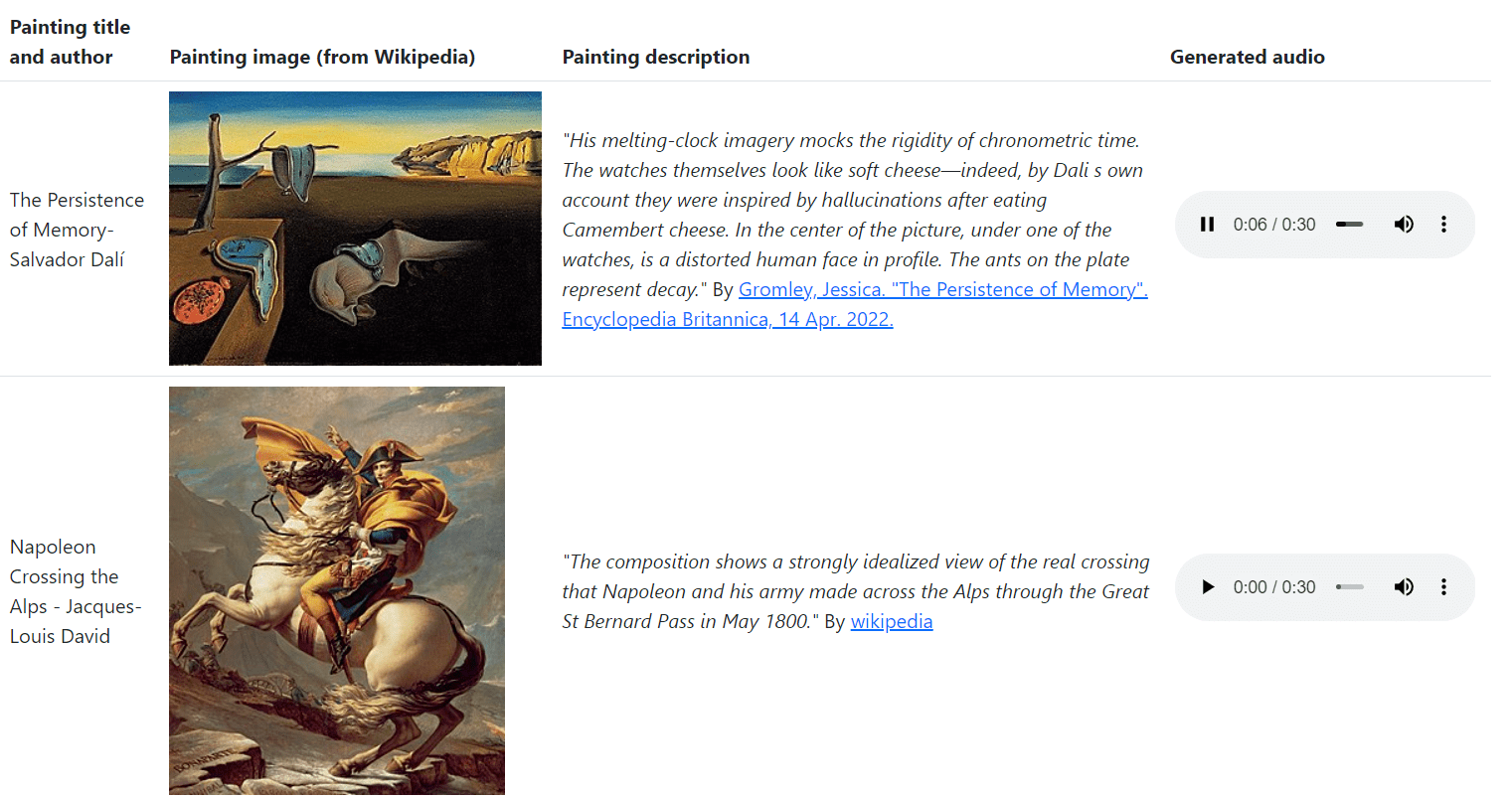

It’s also possible to generate music based on the Painting caption, possibly capturing the image's mood.

Image by Author (Adapted from google-research.github.io)

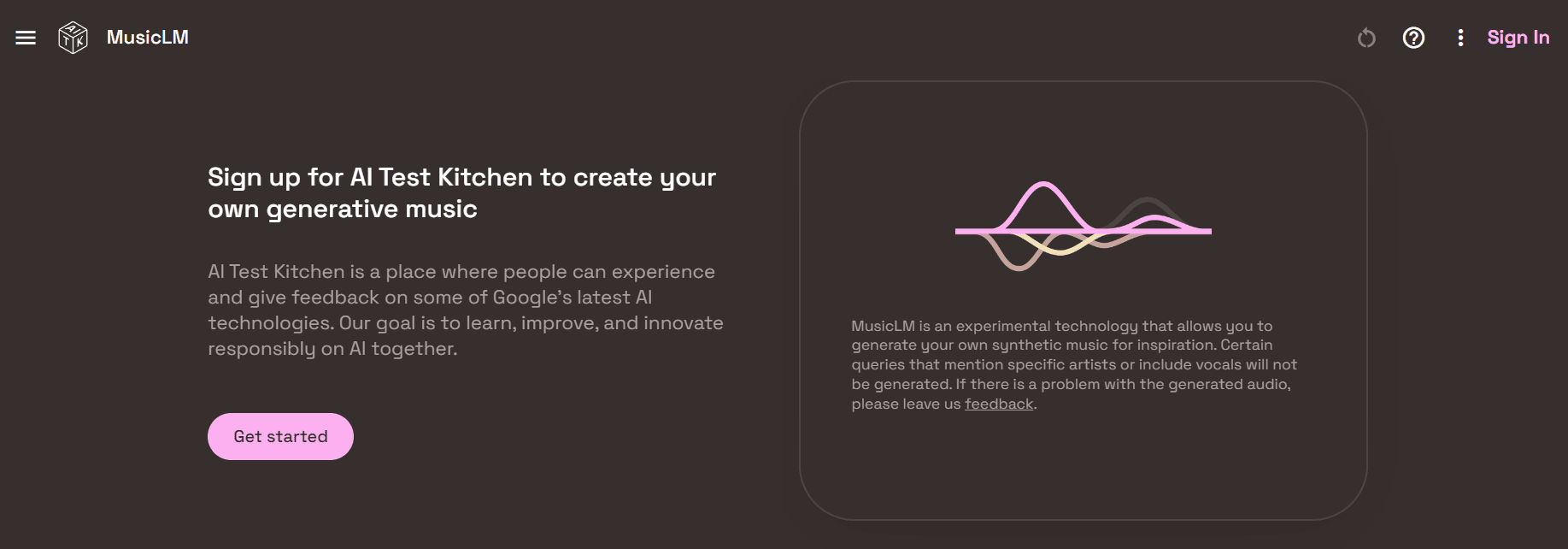

The result sounds amazing, but how can we try the model? Luckily, Google has accepted the registration to test the MusicLM since May 2023 in the AI Test Kitchen. Go to the website and sign up with your Google account.

Image by Author (Adapted from aitestkitchen)

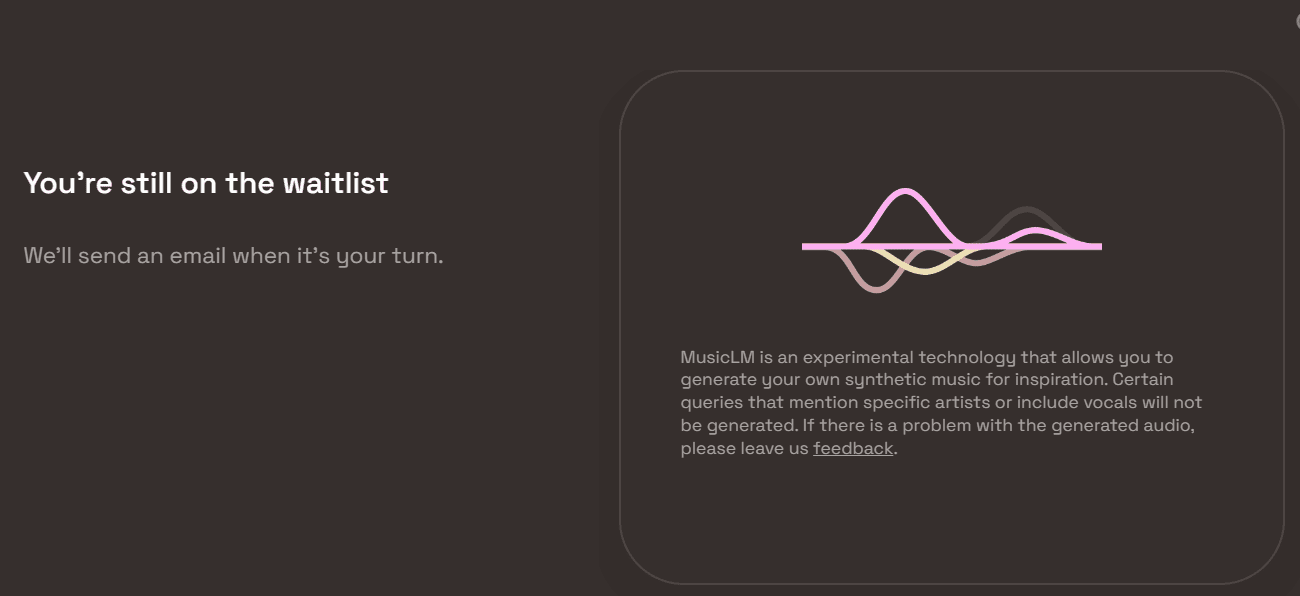

After registration, we would need to wait for our turn to try out MusicLM. So, keeps your eyes on your email.

Image by Author (Adapted from aitestkitchen)

That is all for now; I hope you can get your turn soon to try out the exciting MusicLM.

Conclusion

MusicLM is a model by the Google Research group to generate music from a text. The model can provide several minutes of high-quality music while following the text instruction. We can try out MusicLM by signing up for the AI Test Kitchen. Although, we can visit the Google Research website if we are only interested in the sample result.

Cornellius Yudha Wijaya is a data science assistant manager and data writer. While working full-time at Allianz Indonesia, he loves to share Python and Data tips via social media and writing media.

- How To Generate Meaningful Sentences Using a T5 Transformer

- How to Generate Synthetic Tabular Dataset

- 4 Ways to Generate Passive Income Using ChatGPT

- Generate Synthetic Time-series Data with Open-source Tools

- How to Generate Automated PDF Documents with Python

- What is Google AI Bard?