2024 resulted in a bang for the HPC-AI market. SC24 had file attendance, the Top500 listing had a formidable new primary with El Capitan at Lawrence Livermore Nationwide Laboratory, and the marketplace for AI boomed, with hyperscale corporations spending greater than double their already lofty investments in 2023.

So why does all of it really feel so unstable? As we kick off 2025, the HPC-AI {industry} is at a tipping level. The ballooning AI market is dominating the dialog, with some individuals involved it should take the air out of HPC, and others (or maybe the identical individuals) ready for the AI bubble to pop. In the meantime political change is threatening the established order, doubtlessly altering the market dynamics of HPC-AI.

Intersect360 Analysis is forming its analysis calendar for the yr, fueled by inputs from the HPC-AI Management Group (HALO). As we lay out the surveys that can assist us craft a brand new five-year forecast, listed here are 5 massive questions going through the HPC-AI marketplace for the again half of the last decade.

1. How massive can the AI market get?

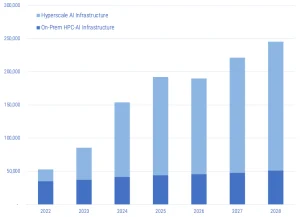

In our pre-SC24 webinar, Intersect360 Analysis made a dramatic adjustment to its 2024 HPC-AI market forecast, saying that we anticipated a second straight yr of triple-digit development in hyperscale AI, with extra years of excessive development charges forward. We additionally elevated the outlook for the blended, on-premises (non-hyperscale) HPC-AI market, however this comparatively modest bump was dwarfed by the mammoth good points posted by hyperscale.

Revised HPC-AI Market Forecast ($M) Intersect360 Analysis, November 2024. (Supply: Intersect360 Analysis)

AI has already dominated the dialog in information heart infrastructure. At Scorching Chips 2024, for instance, the few displays that didn’t focus explicitly on AI nonetheless made reference to it. Distributors are racing to embrace the seemingly boundless development within the AI market.

The hyperscale AI market is predominantly client in nature, and there may be precedent for it. Hyperscale hit its preliminary development spurt by creating cloud information heart markets out of client markets that didn’t depend on enterprise computing beforehand. Calendars, maps, video video games, music, and movies used to exist offline, and social media is a class with no prior analogy. AI is constructing on all these phenomena, and it’s creating new ones.

No market is actually boundless, however the hyperscale part continues to be groping for its ceiling. To take one instance, Meta introduced in its April 2024 earnings name that it was rising its capital expenditures to $35 to $40 billion per yr to accommodate its accelerated funding in AI infrastructure. Taking out a measure of capex doubtlessly not associated to AI, that also leaves about $10 per person throughout Meta’s roughly 3.2 billion customers worldwide on its numerous platforms (Fb, Instagram, WhatsApp, and so forth.).

In that context, it is sensible {that a} hyperscale firm may anticipate it may earn an extra ten {dollars} of revenue per person per yr by way of the usage of AI. To go increased, an organization would wish both extra customers, or extra anticipated worth per person. Not many corporations depend greater than a 3rd of the world inhabitants as customers. Can a single person’s private information be value twenty {dollars} extra due to AI? 100

Past economics, essentially the most cited limiting think about hyperscale AI information heart manufacturing is energy consumption. AI information facilities are being constructed lots of of megawatts, even gigawatts, at a time. Corporations are pursuing revolutionary options to seek out energy to gasoline these buildouts. Most notoriously, Microsoft signed a contract with the Crane Clear Power Middle that can restart Unit 1 at Three Mile Island, the Pennsylvania web site that suffered a nuclear meltdown accident in 1979. (Unit 1 is unbiased from Unit 2, which suffered the accident, and Unit 1 continued operations thereafter.)

Hyperscale AI is due to this fact entwined with the notion of sustainability, and whether or not it’s globally accountable to devour a lot energy. But when spending tens of billions of {dollars} per yr hasn’t been an obstacle, then discovering the ability hasn’t been both, and hyperscale corporations haven’t but discovered the restrict to how a lot energy they will entry and devour.

Maybe essentially the most wonderful reality about all this development in hyperscale AI is that it hasn’t been the main target of most information heart conversations. As a substitute, we’re chasing the notion of “enterprise AI,” the promise of AI to revolutionize enterprise computing.

This revolution will undoubtedly occur. Simply as private computer systems, the web, and the world large net all revolutionized the enterprise, so will AI. The market alternative for enterprise AI is bounded by the anticipated enterprise outcome. For AI to be a worthwhile initiative, there are two paths: it may cut back prices, or it may deliver in additional income.

Up to now, a lot of the emphasis appears to be on price optimization, equivalent to by way of streamlined operations or (let’s face it) discount of headcount. This funding is proscribed by a easy riddle: How a lot cash will you spend to avoid wasting one greenback? Even annualizing the payoff—{dollars} per yr—offers a sensible restrict to how a lot it’s value spending. Moreover, there are diminishing returns to this path. If an organization can spend two million {dollars} to avoid wasting a million {dollars} per yr, it’s unlikely it may repeat the trick, on the identical stage to the identical profit, with the subsequent two million.

As to elevated income, there are two sorts: main (creating extra income general) and secondary (taking share from a competitor). Let’s take a look at an airline for instance. By implementing AI, will the airline get extra individuals to take flights? Will passengers, on common, spend more cash per flight, particularly due to the airline’s AI? (Bonus query: If that’s the case, how does this have an effect on client spending in different markets? Or do individuals simply have more cash?)

Extra doubtless, we’re taking a look at a aggressive market share argument: Extra prospects will select Airline A over Airline B due to Airline A’s AI funding. Making a well timed pivot on this case may be necessary. Amazon obtained its begin as a bookseller. If Borders or Barnes and Noble had made an earlier funding in net commerce, Amazon may by no means have had its likelihood.

However it is a zero-sum sport. If each Airline A and Airline B make the identical funding in AI, and their respective revenues are unchanged, they spend obligatory cash for no acquire. (This can be a traditional “prisoner’s dilemma” in microeconomics sport idea. On this simplified instance, each airways are higher off if neither invests, however every is individually higher off making the funding, no matter what the opposite does.)

In the end, those that construct {hardware}, fashions, and providers for AI are banking on a serious enterprise migration. If AI goes the best way of the net, then in ten years, a sturdy AI funding will merely be thought of the price of doing enterprise, even when profitability hasn’t soared in consequence. On this method, AI turns into a dominant a part of the IT funds, however in all probability not a lot completely different quantitatively than IT budgets as they already existed.

2. Will hyperscale utterly take over enterprise computing?

Within the pursuit of enterprise AI, some {hardware} corporations could also be ambivalent as as to whether the programs for AI wind up on-premises or within the cloud, however for the hyperscale neighborhood, every thing (together with AI) as-a-Service is the imaginative and prescient of the long run. We’ve already seen the cloudification of client markets. With the acute focus of knowledge at hyperscale, AI could possibly be the lever that does the identical for enterprise.

Intersect360 Analysis has been forecasting an asymptote in cloud penetration of the HPC-AI market, at roughly one-quarter of complete HPC funds. The first limiting issue isn’t any cloud barrier, however reasonably easy price; for anybody who can hit a excessive sufficient utilization mark, it’s cheaper to lease than to purchase. Moreover, information gravity and sovereignty points are pushing extra organizations to lean on-premises. As one instance, representatives from GEICO introduced its motion away from cloud for its full vary of purposes, together with HPC and AI, on the OCP World Summit in September.

However what if cloud turns into the one selection? Over three-quarters of HPC-AI infrastructure—and of all information heart infrastructure general—is now consumed by the hyperscale market. The highest hyperscale corporations spend tens of billions of {dollars} per yr; every of them is a market unto itself. Part and system producers understandably prioritize them in relation to product design and availability.

These looking for options for HPC-AI could discover that the most recent applied sciences merely aren’t accessible, as hyperscalers are able to consuming your complete provide of a specific product. Nvidia GPUs—the definitive magical gems that allow AI—when they’re accessible in any respect, are priced excessive, with lengthy wait occasions. HPC-focused storage corporations are equally engaged in hyperscale AI deployments.

AI is able to tipping the scales additional in favor of cloud. If it does, it will push the on-premises marketplace for HPC-AI applied sciences into decline. Conventional OEM enterprise product and options corporations, equivalent to HPE, Dell, Atos/Eviden, Fujitsu, Cisco, EMC, and NetApp, would compete for a smaller market. (Others, together with Lenovo, Supermicro, and Penguin Options, have already embraced hybrid ODM-OEM enterprise fashions to be able to promote successfully to the high-growth hyperscale market.)

Fueled by AI, hyperscale corporations have already grown properly past ranges forecast by Intersect360 Analysis. Traditionally this stage of market focus has been unstable. 5 years in the past, in forecasting the hyperscale market, Intersect360 Analysis wrote, “Such market energy isn’t unprecedented on the earth’s financial historical past, nevertheless it has not beforehand been seen at this stage within the info expertise period.”

Hyperscale has grown far more since then. The worldwide information heart market is concentrated right into a small variety of consumers. If the pattern continues, it should essentially disrupt how enterprise computing is purchased and used, and the view of everything-as-a-service might turn into a actuality, whether or not consumers need it or not.

3. What impact will the brand new U.S. administration have on HPC-AI?

Nationwide sovereignty issues round HPC-AI capabilities have been on the rise for years. The worldwide HALO Advisory Committee not too long ago cited “HPC nationalism” as a key subject impeding {industry} progress. There are unbiased initiatives for HPC-AI sovereignty, based mostly on native applied sciences, within the U.S., China, the EU, the UK, China, Japan, and India. The New York Occasions reported [subscription required] that King Jigme Khesar Namgyel Wangchuck of Bhutan not too long ago traveled to go to Nvidia’s headquarters in California to debate the constructing of AI information facilities.

President Trump is already accelerating this pattern towards nationwide independence. His political platform is one in every of American exceptionalism, and his first days in workplace signaled an intention to advertise American greatness. Notably, Trump amplified the announcement of the Stargate Venture, “a brand new firm which intends to speculate $500 billion over the subsequent 4 years constructing new AI infrastructure for OpenAI in the USA.” Trump known as Stargate “a brand new American firm … that can create over 100,000 American jobs virtually instantly.”

The Stargate Venture can hardly be known as a Trump achievement, because it was evidently already within the works earlier than Trump took workplace. Moreover, the funding doesn’t come from the U.S. authorities. Two of the first funders, Softbank (Japan) and MGX (UAE) are non-American corporations; MGX was established solely not too long ago by the Emirati authorities. However Trump may get credit score for creating an setting that stored the information facilities and the related jobs within the U.S.

Trump seized on the announcement and tied it to his meant insurance policies. “It’ll guarantee the way forward for expertise. What we wish to do is we wish to hold it on this nation. China is a competitor, and others are rivals. We would like it to be on this nation, and we’re making it accessible,” Trump famous.

As to development and energy era for Stargate, Trump vowed to make issues straightforward. “I’m going to assist quite a bit by way of emergency declarations, as a result of now we have an emergency. We’ve to get these items constructed,” he stated. “They’ve to provide quite a lot of electrical energy, and we’ll make it attainable for them to get that manufacturing finished very simply, at their very own vegetation, if they need.”

Coupled with different actions, equivalent to Trump’s instant withdrawal from the Paris Local weather Accords, Trump sends a message that he needs American funding to race forward, with urgency, regardless exterior elements, equivalent to different international locations’ sentiments or concern for the setting. He guarantees to clear hurdles for companies, primarily by way of deregulation, and he’ll promote American vitality manufacturing. All these actions ought to translate to a internet enhance in spending on HPC-AI applied sciences, not solely by hyperscale corporations, but additionally in key HPC business vertical markets, equivalent to oil and gasoline exploration, manufacturing, and monetary providers.

Public sector spending is extra doubtful. The brand new Division of Authorities Effectivity (DOGE), an unofficial advisory working exterior the institution, headed by Elon Musk, is particularly tasked with slashing authorities spending. Some supercomputing strongholds, such because the Workplace of Science and Expertise Coverage (OSTP) inside the U.S. Division of Power, have historically held stable bipartisan help. Different authorities departments, equivalent to NASA, NSF, or NIH, might come underneath the microscope, or worse, the axe.

Take into account the Nationwide Oceanic and Atmospheric Administration (NOAA), which operates underneath the U.S. Division of Commerce. Google introduced final month that its GenCast ensemble AI mannequin can present “higher forecasts of each day-to-day climate and excessive occasions than the highest operational system, the European Centre for Medium-Vary Climate Forecasts’ (ECMWF) ENS, as much as 15 days prematurely.” Throughout the subsequent 4 years, would DOGE suggest downsizing (or eliminating) NOAA in favor of a private-sector AI contract?

What occurs within the U.S. will naturally have results overseas. The European Fee had already been involved with establishing HPC-AI methods that don’t depend on American or Chinese language applied sciences. Anders Jensen, the manager director of the EuroHPC Joint Enterprise, stated in an interview with Intersect360 Analysis senior analyst Steve Conway, “Sovereignty stays a key tenet in our procurements, as our newly acquired programs will more and more depend on European applied sciences.” With the specter of U.S. tariffs and export restrictions rising, these efforts can solely escalate.

China had already been working towards HPC-AI expertise independence, and Chinese language organizations have stopped submitting system benchmarks to the semiannual Top500 listing. The approaching years will doubtless see a U.S.-China “AI race” akin to the U.S.-USSR area race of the earlier century. Different international locations with smaller however nonetheless notable HPC-AI presences, equivalent to Australia, Canada, Japan, Saudi Arabia, South Korea, or the UK, shall be challenged to plot methods to maintain tempo.

Returning to the U.S., it’s value contemplating what “American management” means on this context. Whereas the EU focuses on public sector funding and China has a novel mannequin of state-controlled capitalism, American corporations are neither owned nor managed by the U.S. authorities. The world’s largest hyperscale organizations are headquartered within the U.S., however they’re world corporations that depend on overseas prospects. Equally, key expertise suppliers, equivalent to Nvidia, Intel, and AMD, are American corporations that additionally promote their merchandise overseas. Limiting the distribution of these merchandise hurts the businesses in query.

In a weblog, Ned Finkle, VP of Authorities Affairs at Nvidia, blasted the Biden administration’s “AI Diffusion” rule, handed in President Biden’s ultimate days in workplace, as “unprecedented and misguided,” calling it a “regulatory morass” that “threatens to squander America’s hard-won technological benefit.” Contemplating these views, the Trump administration has a tough needle to string—selling the usage of world-leading American applied sciences for HPC-AI, equivalent to Nvidia GPUs, whereas conserving management over different international locations, notably China, which the U.S. authorities considers to be competitors.

4. Can anybody problem Nvidia?

The opinion of Nvidia’s government management issues, as a result of in relation to AI, Nvidia controls the crucial expertise, the GPU. GPUs have been as soon as confined to graphics, till Nvidia executed a masterful, decade-long marketing campaign to ascertain the CUDA programming mannequin that may deliver GPUs to HPC. When it turned out that the GPU was an ideal match for the neural community computing that may energy machine studying, Nvidia was really off to the races.

Nvidia now totally dominates the AI marketplace for GPUs and their related software program. Moreover, Nvidia has pioneered a proprietary interconnect, NVlink, for networking GPUs and their reminiscence into bigger, high-speed programs. By means of its 2020 acquisition of Mellanox, Nvidia assumed management of InfiniBand, the main high-speed system-level interconnect for HPC-AI. And Nvidia has vertically built-in into full system structure, with its Grace Hopper and Grace Blackwell “superchip” nodes and DGX SuperPOD infrastructure.

Most significantly, Nvidia is looking for to eradicate its reliance on exterior expertise by way of the discharge of its personal CPU, Grace. Nvidia Grace is an ARM structure CPU that enhances Nvidia GPUs in Grace Hopper and Grace Blackwell deployments. Whereas Nvidia has a commanding lead in GPUs, it’s coming from behind in CPUs, which stay on the coronary heart of the server.

The 2 most pure rivals to Nvidia, due to this fact, are the main U.S.-based CPU distributors, Intel and AMD. Intel’s massive benefit is within the CPU. Intel’s Xeon CPUs are nonetheless the main selection for enterprise servers, and a long time’ value of legacy software program is optimized for it. In mixed-workload environments serving each conventional scientific and engineering HPC codes and rising AI workloads, the compatibility and efficiency of these CPUs is necessary.

This entrenched benefit supplied Intel an inside lane for defending in opposition to the incursion of the GPU. To Intel’s credit score, the corporate foresaw the risk and tried to move it off. Within the early days of CUDA, Intel introduced its personal computational GPU, codenamed Larrabee. The challenge was canceled lower than two years after it was conceived, having by no means come to market.

Since that point, Intel has tried and failed at one accelerator challenge after one other, together with the Many Built-in Core (MIC) structure, which turned Intel Xeon Phi, a business flop as each an accelerator and as an built-in CPU. Intel’s newest GPU accelerator, codenamed Ponte Vecchio, suffered a collection of delays and fell in need of efficiency expectations within the Aurora supercomputer at Argonne Nationwide Laboratory.

Intel has now scrapped each Ponte Vecchio and a beforehand deliberate follow-on codenamed Rialto Bridge, so these in search of an Intel GPU are left ready for a product known as Falcon Shores and its successor, Jaguar Shores, although the way forward for all issues Intel is cloudy within the wake of CEO Pat Gelsinger’s sudden retirement. Intel does presently provide a non-GPU AI accelerator, Intel Gaudi, that has not made important inroads in opposition to Nvidia’s dominance.

Intel has made retreats from different tentative forays exterior of its core CPU enterprise. Intel developed Omni-Path Structure to compete with InfiniBand as a high-end system interconnect for HPC. After solely modest success, Intel backed away; Omni-Path was picked up off Intel’s trash pile by Cornelis Networks, which now carries it ahead. Intel, AMD, Cornelis Networks, and others are actually a part of the Extremely Ethernet Consortium, which seeks to allow high-performance Ethernet-based options able to competing with Nvidia InfiniBand.

Conversely, AMD has had important successes with each its AMD EPYC CPUs and AMD Intuition GPUs. Among the many three main distributors, AMD was the primary to market with each CPU and GPU related in an built-in system. AMD continues to achieve share in HPC-AI, drafting off of its two most notable wins, the El Capitan supercomputer at Lawrence Livermore Nationwide Laboratory and the Frontier Supercomputer at Oak Ridge Nationwide Laboratory, each led by HPE.

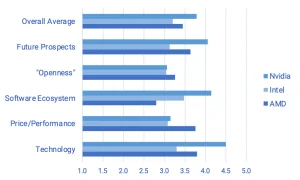

AMD’s weak spot is the software program ecosystem. In a 2023 Intersect360 Analysis survey, HPC-AI customers rated AMD forward of each Nvidia and Intel for the worth/efficiency of GPUs. However Nvidia crushed Intel and particularly AMD in perceptions of software program ecosystem. (See chart.) Nvidia additionally led all person scores in “expertise” and “future prospects.”

HPC-AI Person Scores of Main GPU Distributors

Person perceptions on five-point scale: 1 = “horrible”; 5 = “glorious”.

(Supply: Intersect360 Analysis HPC-AI Expertise Survey, 2023)

Naturally, consumers aren’t restricted to solely Nvidia, Intel, and AMD as decisions. Corporations equivalent to Cerebras, Groq, and SambaNova have all had notable wins with their accelerators for AI programs. However none of those is giant sufficient to be a aggressive market risk to Nvidia’s dominance. What could possibly be an element is that if one in every of these corporations or their cohorts have been acquired by a hyperscale firm.

Nvidia is to this point forward within the AI sport, the larger risk to Nvidia (and probably the one actual risk) is a whole paradigm shift. Hyperscale corporations have been Nvidia’s largest prospects. These corporations are absolutely conscious of their reliance on Nvidia GPUs, that are in aggressive demand globally, and due to this fact costly and sometimes briefly provide. Amazon, Google, and Microsoft are all designing their very own CPUs or GPUs internally, both providing them of their cloud providers to others or for their very own unique use.

On the identical time, Nvidia has invested in enabling a brand new breed of GPU-focused clouds. CoreWeave, Denvr DataWorks, Lambda Labs, and Nebius are solely a small few examples of cloud providers that provide GPUs. A few of these are newcomers; others are transformed bitcoin miners that now see greener pastures in AI.

This places Nvidia in competitors with its personal prospects on two fronts. First, Nvidia is designing full HPC-AI programs, in competitors with server OEM corporations like HPE, Dell, Lenovo, Supermicro, and Atos/Eviden, which carry Nvidia GPUs to market in their very own configurations. Second, Nvidia is funding or in any other case enabling GPU clouds, in competitors with its personal hyperscale cloud prospects, who themselves are designing processing components, doubtlessly decreasing their future reliance on Nvidia.

If AI continues to develop, and hyperscale continues to dominate, and constraints are faraway from the U.S. market, then we’re doubtlessly left with a brand new aggressive paradigm. By the tip of the last decade, the query will not be whether or not Intel or AMD can catch as much as Nvidia, however reasonably, how does Nvidia compete with Google, Microsoft, and Amazon.

By means of this lens, the aggressive area is large open. For Venture Stargate, which purports to have $500 billion to spend within the subsequent 4 years, OpenAI allied with Oracle and Microsoft, with Nvidia as a main expertise associate. Final yr, X.ai, led by the aforementioned DOGE czar Elon Musk, moved into the highest tier of hyperscale AI spending with the implementation of the Colossus AI supercomputer. Musk might make issues much more attention-grabbing if he have been so as to add to his secure of applied sciences by buying an organization with a specialty AI inference processor.

5. What about good outdated HPC?

Because the aggressive dynamics proceed to shift, the old-guard HPC crowd has understandably regarded to the host of how AI may be built-in with HPC, together with notions equivalent to AI-augmented HPC. Past easy duties like code migration, AI may be deployed for HPC pre-processing (e.g., goal discount), post-processing (e.g., picture recognition), optimization (e.g., dynamic mesh refinement), and even integration (e.g., computational steering). As AI thrives, we embrace a rosy view of a merged HPC-AI market.

This can be a dream from which HPC wants a wake-up name. Whereas AI does current these advantages to HPC, and extra, it has additionally created a disaster.

At SC24, we rightfully celebrated El Capitan, our third exascale supercomputer, essentially the most highly effective on the earth. And but, all of us knew we have been kidding ourselves. Glenn Lockwood, previously a high-performance storage knowledgeable at NERSC, now an AI architect for Microsoft Azure, confirmed in his conventional post-SC weblog that Microsoft is “constructing out AI infrastructure at a tempo of 5x Eagles (70,000 GPUs!) monthly,” referring to the Microsoft Eagle supercomputer, presently quantity 4 on the Top500 listing, behind the three DOE exascale programs. Microsoft, or one other hyperscale firm, might clearly submit a bigger rating if desired.

We’re accustomed to considering of those nationwide lab supercomputers as world leaders that set the course of improvement for the broader HPC and enterprise computing markets. That is now not true. A $500 million, 30-megawatt supercomputer isn’t world-leading any extra. It’s not even an particularly massive order. A DOE supercomputer should be crucial for science, however going ahead, the course of the enterprise information heart {industry} shall be set by AI, not conventional supercomputing.

If that doesn’t sound necessary, it’s. For as a lot because the HPC crowd has mentioned the convergence of HPC and AI, we’re now heading in the wrong way, because the applied sciences and configurations that serve AI drift farther away from what scientific computing wants.

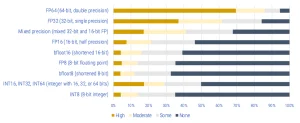

That is most evident within the dialogue of precision. Whereas HPC has relied on 64-bit, double-precision floating level calculations, now we have seen AI—significantly for inferencing—gravitate down by way of 32-bit single-precision, mixed-precision, and 16-bit half-precision, now into numerous combos of “bfloats” and both floating level or integer at 8-bit, 6-bit, and even 4-bit precision. With some regularity, corporations now promote what number of “AI flops” their processors or programs are able to, with no definition in sight of what an “AI flop” represents. (That is as foolish as having a contest to see who can eat essentially the most cookies, with no boundaries or requirements as to how small a person cookie may be.)

A few of this dialogue of precision may be of profit to HPC. There are instances wherein very costly, high-precision calculations may be thrown at fashions that aren’t very exact to start with. However in a 2024 Intersect360 Analysis survey of HPC-AI software program, customers clearly recognized FP64 as being a very powerful for his or her purposes sooner or later. (See chart.)

Future Significance of Precision Ranges for HPC-AI Functions

Weighted averages throughout 11 utility domains

(Supply: Intersect360 Analysis HPC-AI Software program Survey, 2024)

If processor distributors are pushed by AI, we might see FP64 slowly (or rapidly) disappear from product roadmaps, or on the very least, get much less consideration than the AI-driven lower-precision codecs. Utility domains with extra reliance on high-precision calculations, equivalent to chemistry, physics, and climate simulation, could have the largest hurdles to beat.

The steadiness of CPUs and GPUs can also be completely different between conventional HPC and newer AI purposes. Regardless of all Nvidia’s work with CUDA and software program, most HPC purposes don’t run properly on greater than two GPUs per node, and lots of purposes are nonetheless finest in CPU-only environments. Conversely, AI is commonly finest run with excessive densities of GPUs, with eight or extra per node. Moreover, these AI nodes may be well-served to have CPUs with comparatively low energy consumption and excessive reminiscence bandwidth—strengths of ARM structure, embodied in Nvidia Grace CPUs.

The blended HPC-AI market is now stuffed with server nodes with 4 GPUs per node, the commonest configuration presently put in. This may work properly in some eventualities, however in others, it may be the compromise that each side hate equally: too many GPUs for use successfully by HPC purposes; not sufficient for AI workloads. For its latest supercomputer, MareNostrum 5, Barcelona Supercomputing Middle (BSC) selected to specialize its nodes in numerous partitions, some with extra GPUs per node, some with much less. Composability applied sciences may additionally assist sooner or later, permitting one node to make use of the GPUs from one other. GigaIO and Liqid are two HPC-oriented corporations pursuing system-level composability, however adoption so far is proscribed.

Excessive-performance storage is getting hijacked as properly. Corporations we affiliate with HPC information administration, equivalent to DDN, VAST Information, VDURA (previously Panasas), and Weka, are actually rising at astounding charges, because of the applicability of their options to AI. Thankfully for HPC, at this level, it has not led to a serious change in how high-performance storage is architected.

In the end, if the options that drive enterprise computing are altering, then HPC may need to vary with it. If this sounds excessive, take consolation. It has occurred earlier than.

HPC has been on the wag finish of a bigger enterprise computing marketplace for a long time. Market forces drove the migration from vector processors to scalar, from Unix to Linux, and from RISC to x86. These final two got here on the identical time, because of the largest transition, from symmetric multi-processing (SMP) to clusters.

Clusters arrived in power within the late Nineties by way of the Beowulf challenge, which promoted the concept giant, high-performance programs could possibly be constructed from industry-standard, x86-Linux servers. These commodity programs have been being lifted into prominence by a pattern that had about as a lot hype and promise then as AI has at this time: the daybreak of the world large net.

Many a dyed-in-the-wool HPC nerd wrung his fingers at clustering, claiming it wasn’t “actual” HPC. It was capability, not functionality, individuals stated. (The IDC HPC analyst crew even constructed “Capability HPC” and “Functionality HPC” into its market methodology; this nomenclature persevered for years.) Individuals complained that clustering wouldn’t swimsuit bandwidth-constrained purposes, that it will result in low system utilization, and that it wouldn’t be well worth the porting efforts. These are similar to the arguments with respect to GPUs and decrease precisions at this time.

Clusters gained out, after all, although the transition took a decade, give or take. Clusters have been industry-standard and cost-efficient. And as soon as purposes have been taken by way of the (typically occasions painful) strategy of porting to MPI, they could possibly be migrated simply between completely different distributors’ {hardware}. Prefer it or not, low-precision GPUs might simply turn into the present-day analog. It turns into the duty of the HPC engineer to not design enterprise applied sciences, however reasonably to make the most of the applied sciences at hand.

Some segments of HPC will face an even bigger risk, or alternative, relying in your perspective. If AI actually does turn into nearly as good at predicting outcomes as conventional simulations, there shall be areas wherein the AI strategy actually does displace deterministic computing.

Take a traditional HPC case like finite factor evaluation, used for crash simulation. Digital crash simulations are quicker and cheaper than bodily exams. A automotive firm can take a look at extra eventualities in much less time, guiding improvement to optimum options. What if AI discovered do it as properly, or higher? Would we nonetheless run the deterministic utility? In spite of everything, the digital mannequin was by no means an ideal illustration of the bodily automotive.

There are (hopefully) limits to how far this displacement can go. HPC is (or ought to be) a long-term market as a result of we don’t attain the tip of science, and so long as there may be science to be finished, and issues to be solved, there’s a position for HPC in fixing it. AI continues to be a black field that doesn’t present its work. Science is a peer-reviewed course of that depends on artistic considering. And sooner or later, scientists have to do the maths. However throughout the total vary of HPC purposes, it’s value contemplating the place we should depend on exact calculations and the place an excellent guess is nice sufficient.

There are nonetheless promising HPC-focused applied sciences on the horizon. NextSilicon, for instance, is bucking the pattern by specializing in 64-bit computing for HPC purposes. Pushed by the necessity for non-American CPUs, each the EU and China are investing within the improvement of high-performance options based mostly on RISC-V structure. And maybe most enjoyable, there have been main current developments in quantum computing from a number of distributors throughout the {industry}.

In some ways, 2025 is shaping as much as be an inflection level that can set the course for HPC-AI, not just for the remainder of this decade, however for the subsequent decade past that. At Intersect360 Analysis, our analysis calendar for the yr shall be tailor-made to get readability on these crucial {industry} dynamics. HPC-AI customers globally may also help steer the dialog by becoming a member of HALO. We’re listening. We’ve obtained some massive inquiries to reply.

This text first appeared on sister web site HPCwire.