Image by Editor

We’ve been seeing large language models (LLMs) spitting out every week, with more and more chatbots for us to use. However, it can be hard to figure out which is the best, the progress on each and which one is most useful.

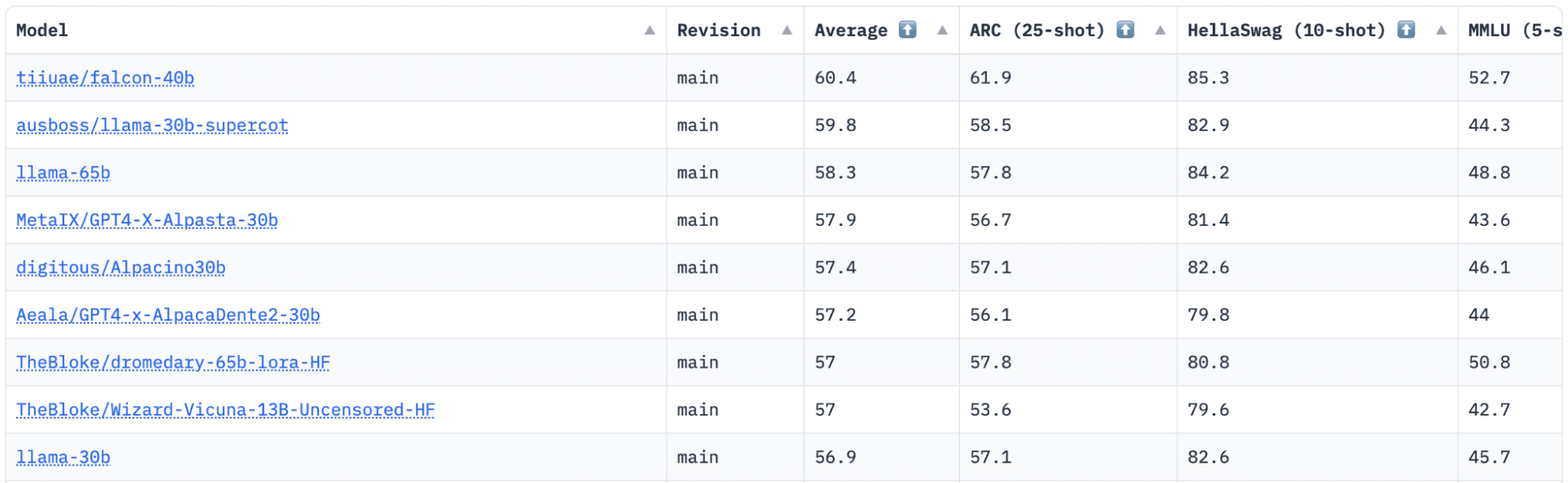

HuggingFace has an Open LLM Leaderboard which tracks, evaluates and ranks LLMs as they are being released. They use a unique framework which is used to test generative language models on different evaluation tasks.

Of recent, LLaMA (Large Language Model Meta AI) was at the top of the leaderboard and has been recently dethroned by a new pre-trained LLM – Falcon 40B.

Image by HuggingFace Open LLM Leaderboard About Technology Innovation Institute

Falcon LLM was Founded and built by the Technology Innovation Institute (TII), a company that is part of the Abu Dhabi Government’s Advanced Technology Research Council. The government oversees technology research in the whole of the United Arab Emirates, where the team of scientists, researchers and engineers focus on delivering transformative technologies and discoveries in science.

What is Falcon 40B?

Falcon-40B is a foundational LLM with 40B parameters, training on one trillion tokens. Falcon 40B is an autoregressive decoder-only model. An autoregressive decoder-only model means that the model is trained to predict the next token in a sequence given the previous tokens. The GPT model is a good example of this.

The architecture of Falcon has been shown to significantly outperform GPT-3 for only 75% of the training compute budget, as well as only requiring ? of the compute at inference time.

Data quality at scale was an important focus of the team at the Technology Innovation Institute, as we know that LLMs are highly sensitive to the quality of training data. The team built a data pipeline which scaled to tens of thousands of CPU cores for fast processing and was able to extract high-quality content from the web using extensive filtering and deduplication.

They also have another smaller version: Falcon-7B which has 7B parameters, trained on 1,500B tokens. Aswell as a Falcon-40B-Instruct, and Falcon-7B-Instruct models available, if you are looking for a ready-to-use chat model.

What can Falcon 40B do?

Similar to other LLMs, Falcon 40B can:

- Generate creative content

- Solve complex problems

- Customer service operations

- Virtual assistants

- Language Translation

- Sentiment analysis.

- Reduce and automate “repetitive” work.

- Help Emirati companies become more efficient

How was Falcon 40B trained?

Being trained on 1 trillion tokens, it required 384 GPUs on AWS, over two months. Trained on 1,000B tokens of RefinedWeb, a massive English web dataset built by TII.

Pretraining data consisted of a collection of public data from the web, using CommonCrawl. The team went through a thorough filtering phase to remove machine-generated text, and adult content as well as any deduplication to produce a pretraining dataset of nearly five trillion tokens was assembled.

Built on top of CommonCrawl, the RefinedWeb dataset has shown models to achieve a better performance than models that are trained on curated datasets. RefinedWeb is also multimodal-friendly.

Once it was ready, Falcon was validated against open-source benchmarks such as EAI Harness, HELM, and BigBench.

Falcon LLM is Open-Source

They have open-sourced Falcon LLM to the public, making Falcon 40B and 7B more accessible to researchers and developers as it is based on the Apache License Version 2.0 release.

The LLM which was once for research and commercial use only, has now become open-source to cater to the global demand for inclusive access to AI. It is now free of royalties for commercial use restrictions, as the UAE are committed to changing the challenges and boundaries within AI and how it plays a significant role in the future.

Aiming to cultivate an ecosystem of collaboration, innovation, and knowledge sharing in the world of AI, Apache 2.0 ensures security and safe open-source software.

How to Use Falcon-7B Instruct LLM

If you want to try out a simpler version of Falcon-40B which is better suited for generic instructions in the style of a chatbot, you want to be using Falcon-7B.

So let’s get started…

If you haven’t already, install the following packages:

!pip install transformers !pip install einops !pip install accelerate !pip install xformersOnce you have installed these packages, you can then move on to running the code provided for Falcon 7-B Instruct:

from transformers import AutoTokenizer, AutoModelForCausalLM import transformers import torch model = "tiiuae/falcon-7b-instruct" tokenizer = AutoTokenizer.from_pretrained(model) pipeline = transformers.pipeline( "text-generation", model=model, tokenizer=tokenizer, torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto", ) sequences = pipeline( "Girafatron is obsessed with giraffes, the most glorious animal on the face of this Earth. Giraftron believes all other animals are irrelevant when compared to the glorious majesty of the giraffe.nDaniel: Hello, Girafatron!nGirafatron:", max_length=200, do_sample=True, top_k=10, num_return_sequences=1, eos_token_id=tokenizer.eos_token_id, ) for seq in sequences: print(f"Result: {seq['generated_text']}")Wrapping it up

Standing as the best open-source model available, Falcon has taken the LLaMAs crown, and people are amazed at its strongly optimized architecture, open-source with a unique license, and it is available in two sizes: 40B and 7B parameters.

Have you had a try? If you have, let us know in the comments what you think.

Nisha Arya is a Data Scientist, Freelance Technical Writer and Community Manager at KDnuggets. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.

- RedPajama Project: An Open-Source Initiative to Democratizing LLMs

- Introducing MPT-7B: A New Open-Source LLM

- The 7 Best Open Source AI Libraries You May Not Have Heard Of

- Top Open Source Large Language Models

- GitHub Copilot Open Source Alternatives

- Baize: An Open-Source Chat Model (But Different?)