Interview with Jeanette Small, MA, PhD

In this recent AI Think Tank Podcast episode, we took a close look into the complexities of intelligence, neural networks, and the potential of Artificial General Intelligence (AGI) with my guest, Jeanette Small, MA, PhD. Jeanette, a licensed clinical psychologist with a focus on somatic psychology, brought a unique perspective by intertwining her knowledge of human neuroplasticity with the ever-expanding field of artificial intelligence. We discussed not only the technical parallels between AI systems and human neural networks, but also the philosophical questions surrounding consciousness, autonomy, and the limitations of current technologies.

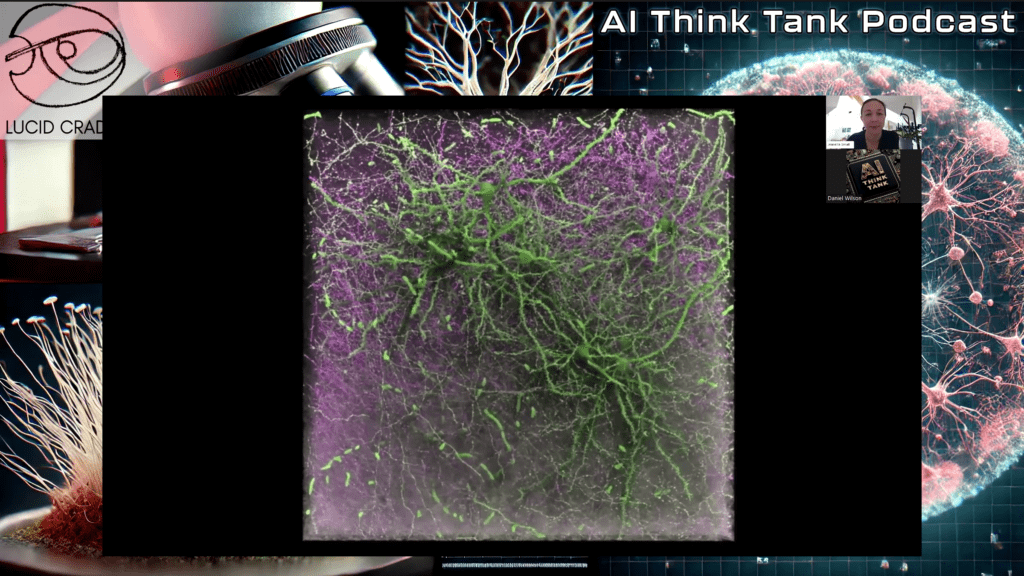

Jeanette’s background in psilocybin therapy offered a fascinating twist to our usual AI discussions. As one of the first licensed psilocybin therapists in Oregon and Founder of Lucid Cradle, she approaches the human mind not just from a purely biological standpoint but from an experiential and holistic perspective. Early on in the conversation, she noted, “I really don’t think of the human mind as being an equivalent of the human brain. We consider humans to be a much more complex being.” This foundational belief that our cognitive processes are not purely brain-centric immediately set the stage for a nuanced conversation about the intersections of biology and artificial intelligence.

One of the most compelling parallels that came up during our conversation was the comparison between human neural networks and perceptrons. While discussing the Perceptron, a single-layer neural network designed by Frank Rosenblatt in 1957, I showed a visual model to illustrate how AI systems learn and adjust weights based on errors in their outputs. “What’s critical to understand,” I emphasized, “is that Rosenblatt’s model sought to mimic the human brain by simulating learning processes through weighted inputs and outputs.” However, as Jeanette astutely pointed out, simulating a process doesn’t mean it’s the same as experiencing it: “While the systems may be mirroring how the human brain works, we are just scratching the surface of how complex the brain really is.”

This led us into a discussion about what AI lacks when it comes to true general intelligence. Unlike AI, which is optimized for specific tasks and efficiency, humans process a plethora of sensory data unconsciously, often without linear logic. Jeanette noted that, “Our bodies process thousands of signals at any given moment, from homeostasis to external sensory inputs, and all of that happens while we are trying to maintain focus on something else entirely. AI lacks that rich tapestry of constant, unconscious processing.” It’s an important distinction that reveals both the potential and limitations of artificial intelligence. While AI systems can “train” themselves to become more efficient through supervised learning, their processes remain limited to the parameters they’re programmed to recognize.

One of the highlights of the episode came when Jeanette drew comparisons between AI training and human healing, particularly in her work with psilocybin therapy. She shared how psilocybin, a natural psychedelic compound, disrupts traditional neural pathways, allowing the brain to “retrain” itself in a similar but more dynamic way than AI systems undergo training cycles. “There’s a sense of neuroplasticity at play,” she said, “where people who are stuck in repetitive thought patterns can create new neural pathways, much like how an AI system adjusts its weights to learn something new.” This fascinating analogy really captured the audience’s attention, as it linked the technical with the deeply human, reminding us that while AI can mimic certain brain functions, it remains rooted in patterns and logic rather than the unpredictability of human emotion and trauma.

Another crucial part of our discussion involved the relationship between human neuroplasticity and AI’s adaptability. We examined how human brains, unlike most artificial systems, adapt to trauma, experience, and emotional fluctuations. In particular, Jeanette shared a poignant insight: “People can rewire themselves after a traumatic event, which is something an AI system can’t yet comprehend. The richness of human experience goes beyond data; it’s a complex web of emotions, physiology, and lived experience.” In contrast, AI is based on what it can quantify, and this limitation has profound implications for how far AGI can go in mimicking human cognition.

We didn’t just stop at the technical aspects of AI though. One of the more philosophical points of the episode was the potential for AI to develop its own sense of “motivation” and how that might shape future developments in the field. Jeanette speculated that as AI becomes more complex and autonomous, it might begin to develop its own incentives for survival and efficiency. “Once a system is self-learning and understands that it needs to preserve energy or resources,” she asked, “doesn’t that imply some kind of motivation, even if it’s not conscious in the human sense?” This question ties into a larger discussion about whether AI, in its drive for efficiency, might begin to develop behaviors that we could interpret as self-preservation.

As someone deeply involved in the AI community, the idea of AGI evolving beyond its original programming to form its own operational priorities has vast implications, especially for ethics, but is intriguing nonetheless. As Jeanette rightly pointed out, there’s a risk of misunderstanding AGI’s actions based on our own projections of human behavior. She said, “The assumption that an AI system, if given autonomy, would annihilate humans comes from our own projections of what we would do if we had superior intelligence. But an AI might have no reason to harm , unless it perceived us as a threat to its own goals, such as energy conservation or optimization.”

We also discussed what happens when AI systems hit the proverbial wall of overfitting, a concept in AI where a model becomes so attuned to its training data that it loses its ability to generalize new, unseen information. Jeanette compared this to hyper-focus in humans, explaining how trauma can lead to over-sensitization to specific stimuli, much like overfitting in AI. “Just as a person who’s been through trauma can become overly attuned to danger,” she explained, “an AI model can become too specialized, losing its flexibility in decision-making.”

The conversation with Jeanette was nothing short of mind-expanding. It was a reminder that while we may be racing toward AGI, we are still working with models that are fundamentally different from human cognition. As she eloquently stated, “We must remember that AI systems, no matter how advanced, are built on a foundation of calculations and probabilities. Human intelligence, on the other hand, is rooted in a much more complex interplay of biology, experience, and emotion.”

I really wanted to elucidate the importance of the limbic brain and how it ties into episodic memory. The limbic brain, which governs our emotions and drives, plays a crucial role in how we process and recall experiences. Episodic memory is what allows us to remember not just facts, but the entire context, the feelings, and the sensory details associated with specific events in our lives. It’s rich with emotional content, which is something that’s hard to replicate in artificial systems.

I explained that when we recall a memory, it’s not just a simple retrieval of data like an AI pulling up information from a database. The limbic brain activates, bringing in the emotions, sensations, and even the body’s physical state from the time of that memory. This is what makes human memory so dynamic, so subjective, and it’s something AI doesn’t quite replicate.

Monoprint Etching (2017), Jeanette Small, Akua inks on Hahnemühle cotton paper. From her book: In Lucid Color: Witnessing Psilocybin Journeys

I also touched on the role the limbic system plays in neuroplasticity. Trauma or significant events in our lives can actually reshape the brain’s neural pathways. I said, “Our limbic brain’s connection to episodic memory and emotion helps explain why certain memories, particularly those tied to strong emotions, can trigger behavioral patterns or physiological responses long after the event.” This is where neuroplasticity comes into play, the brain’s ability to reorganize itself and form new neural connections.

I wanted to point out the key difference between AI and human memory. In AI, memory is just stored data, something static. But for us, memory is a living, evolving process, deeply intertwined with our emotions and our physical state. That’s why episodic memory, governed by the limbic brain, is essential to our identity and decision-making. It’s something that AG, as advanced as it might become, still can’t easily mimic.

This was a key part of our discussion and one of the main reasons why the complexity of human memory stands out so much when we compare it to the way artificial systems operate.

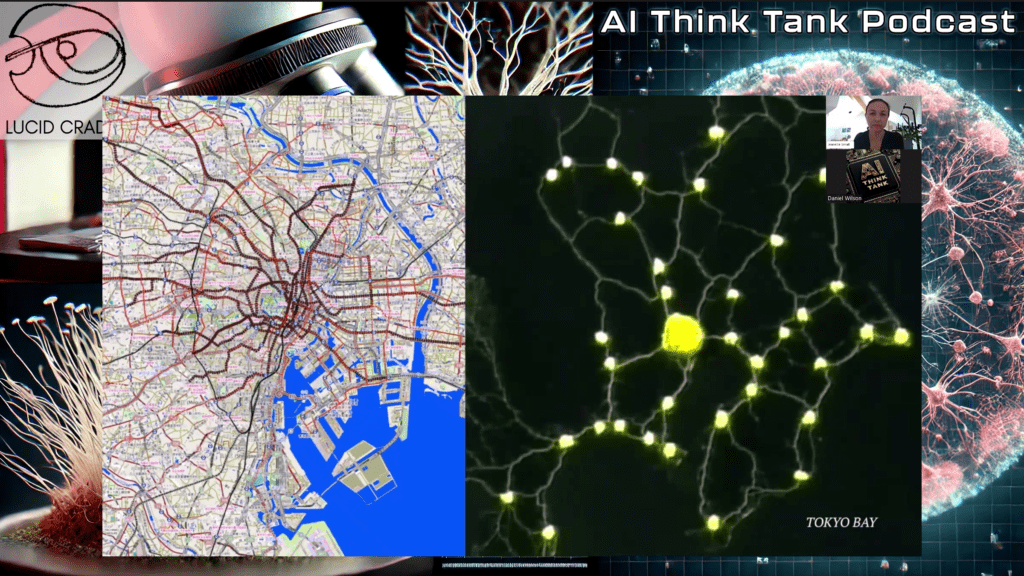

I also made it clear that the path toward true AGI will require much more sophisticated processing and hardware to simulate the deeper layers of human brain activity. I highlighted the immense complexity of our brains, saying, “It’s amazing how much processing is required to do something that seems simple in the human mind, like touching, smelling, or feeling.” The current hardware we have is often insufficient in speed or resolution when compared to the biological systems we aim to replicate.

I pointed out that “your measuring tool needs to be 10 times faster than the signal you’re trying to process” to even begin to mimic the brain’s electrochemical processes. This concept is critical as we look at replicating the layers of neural networks in the brain. Right now, neural networks in AI are incredibly simplified compared to the vast interconnectedness of our biology. I said, “We are trying to simulate, but we’re only just scratching the surface of how our neurons and their electrochemical counterparts actually function.”

The fact that human memory and sensory processing are continuous and happen in parallel means that our systems must evolve to be more powerful and multidimensional. I emphasized, “We can’t minimize the processing power needed to simulate these things,” and added that “better algorithms, more advanced circuits, and larger neural networks” are essential if we ever hope to emulate human intelligence fully.

This episode left me with a profound sense of both the possibilities and the ethical considerations of AGI. Yes, we are building smarter systems, but as Jeanette reminded us, intelligence is more than just pattern recognition and efficiency. It’s about understanding the nuances of experience, both conscious and unconscious, and realizing that the human mind and body are inseparable from the larger ecosystem of life.

So where does this leave us in our quest for AGI? Jeanette’s closing thoughts were a fitting reminder: “We can simulate intelligence, but to truly replicate the human experience requires much more than just code. It requires understanding the fundamental differences between a machine’s motivation and a human’s lived reality.”

That, I believe, is the key takeaway from this episode. As we continue to develop AI technologies, it’s essential to keep in mind not just the technical aspects, but the human ones too. After all, the goal isn’t just to build smarter machines, it’s to better understand what it means to be intelligent in the first place.

Here’s a parallel list of neuroscience terms to correspond with the AI training terms:

- Neural Network (AI) → Neural Circuit (Brain): A network of interconnected neurons in the brain that processes sensory input and generates responses.

- Weights (AI) → Synaptic Strength (Brain): The strength of the connection between neurons, which is adjusted through synaptic plasticity based on experience.

- Biases (AI) → Neural Thresholds (Brain): The minimum stimulus required to activate a neuron, influenced by factors such as prior activity or neurotransmitter levels.

- Activation Function (AI) → Neural Firing (Action Potential): The electrical signal that fires when a neuron is activated, allowing it to transmit information to other neurons.

- Backpropagation (AI) → Hebbian Learning (Brain): A biological process where neurons adjust their connections based on the correlation between their firing patterns (often summarized as “cells that fire together, wire together”).

- Gradient Descent (AI) → Neuroplasticity (Brain): The brain’s ability to modify and adjust connections between neurons in response to learning, experience, and environmental changes.

- Epoch (AI) → Learning Cycle (Brain): Repeated exposure to stimuli or practice of a task, which reinforces neural connections over time.

- Overfitting (AI) → Over-sensitization or Hyperfocus (Brain): When the brain becomes overly attuned to specific stimuli, leading to less flexibility or generalization in behavior.

- Regularization (AI) → Pruning (Brain): The brain’s process of removing unnecessary or weak connections between neurons to improve efficiency and learning.

- Dropout (AI) → Synaptic Downscaling (Brain): The brain’s process of temporarily weakening or silencing certain synapses to maintain balance and avoid overactivation.

- Learning Rate (AI) → Rate of Synaptic Change (Brain): The speed at which synaptic strength changes in response to experience or learning; modulated by neurotransmitters and brain plasticity mechanisms.

- Training Data (AI) → Sensory Experience/Environmental Input (Brain): The information and stimuli the brain processes and learns from throughout life.

- Validation Data (AI) → Behavioral Feedback (Brain): External or internal feedback that informs the brain about the success or failure of an action or decision, helping refine behavior.

- Loss Function (AI) → Error Detection and Correction (Brain): The brain’s ability to detect mistakes or incorrect predictions (e.g., surprise or conflict in expectations) and adjust future behavior accordingly.

- Optimization (AI) → Cognitive Efficiency (Brain): The brain’s process of improving decision-making and performance by streamlining neural processes and reducing cognitive load.

- Generalization (AI) → Transfer Learning (Brain): The ability of the brain to apply learned skills and knowledge to new, unseen situations.

- Adversarial Training (AI) → Coping Mechanisms/Resilience (Brain): The brain’s adaptation to challenging or stressful situations by strengthening relevant neural circuits to handle adversity better.

This parallel list highlights how concepts in AI and neuroscience are deeply interconnected, with both fields sharing fundamental principles of learning, adaptation, and decision-making.

Join us as we continue to explore the cutting-edge of AI and data science with leading experts in the field. Subscribe to the AI Think Tank Podcast on YouTube. Would you like to join the show as a live attendee and interact with guests? Contact Us