Image by Editor

With the rising interest in natural language processing, more and more practitioners are hitting the wall not because they can’t build or fine-tune LLMs, but because their data is messy!

We will show simple, yet very effective coding procedures for fixing noisy labels in text data. We will deal with 2 common scenarios in real-world text data:

- Having a category that contains mixed examples from a few other categories. I love to call this kind of category a meta category.

- Having 2 or more categories that should be merged into 1 category because texts belonging to them refer to the same topic.

We will use ITSM (IT Service Management) dataset created for this tutorial (CCO license). It’s available on Kaggle from the link below:

https://www.kaggle.com/datasets/nikolagreb/small-itsm-dataset

It’s time to start with the import of all libraries needed and basic data examination. Brace yourself, code is coming!

Import and Data Examination

import pandas as pd import numpy as np import string from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.naive_bayes import ComplementNB from sklearn.pipeline import make_pipeline from sklearn.model_selection import train_test_split from sklearn import metrics df = pd.read_excel("ITSM_data.xlsx") df.info() RangeIndex: 118 entries, 0 to 117 Data columns (total 7 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 ID_request 118 non-null int64 1 Text 117 non-null object 2 Category 115 non-null object 3 Solution 115 non-null object 4 Date_request_recieved 118 non-null datetime64[ns] 5 Date_request_solved 118 non-null datetime64[ns] 6 ID_agent 118 non-null int64 dtypes: datetime64[ns](2), int64(2), object(3) memory usage: 6.6+ KB Each row represents one entry in the ITSM database. We will try to predict the category of the ticket based on the text of the ticket written by a user. Let’s examine deeper the most important fields for described business use cases.

for text, category in zip(df.Text.sample(3, random_state=2), df.Category.sample(3, random_state=2)): print("TEXT:") print(text) print("CATEGORY:") print(category) print("-"*100) TEXT: I just want to talk to an agent, there are too many problems on my pc to be explained in one ticket. Please call me when you see this, whoever you are. (talk to agent) CATEGORY: Asana ---------------------------------------------------------------------------------------------------- TEXT: Asana funktionierte nicht mehr, nachdem ich meinen Laptop neu gestartet hatte. Bitte helfen Sie. CATEGORY: Help Needed ---------------------------------------------------------------------------------------------------- TEXT: My mail stopped to work after I updated Windows. CATEGORY: Outlook ---------------------------------------------------------------------------------------------------- If we take a look at the first two tickets, although one ticket is in German, we can see that described problems refer to the same software?—?Asana, but they carry different labels. This is starting distribution of our categories:

df.Category.value_counts(normalize=True, dropna=False).mul(100).round(1).astype(str) + "%"Outlook 19.1% Discord 13.9% CRM 12.2% Internet Browser 10.4% Mail 9.6% Keyboard 9.6% Asana 8.7% Mouse 8.7% Help Needed 7.8% Name: Category, dtype: object The help needed looks suspicious, like the category that can contain tickets from multiple other categories. Also, categories Outlook and Mail sound similar, maybe they should be merged into one category. Before diving deeper into mentioned categories, we will get rid of missing values in columns of our interest.

important_columns = ["Text", "Category"] for cat in important_columns: df.drop(df[df[cat].isna()].index, inplace=True) df.reset_index(inplace=True, drop=True) Assigning Tickets to the Proper Category

There isn’t a valid substitute for the examination of data with the bare eye. The fancy function to do so in pandas is .sample(), so we will do exactly that once more, now for the suspicious category:

meta = df[df.Category == "Help Needed"] for text in meta.Text.sample(5, random_state=2): print(text) print("-"*100) Discord emojis aren't available to me, I would like to have this option enabled like other team members have. --------------------------------------------------------------------------- Bitte reparieren Sie mein Hubspot CRM. Seit gestern funktioniert es nicht mehr --------------------------------------------------------------------------- My headphones aren't working. I would like to order new. --------------------------------------------------------------------------- Bundled problems with Office since restart:

Messages not sent

Outlook does not connect, mails do not arrive

Error 0x8004deb0 appears when Connection attempt, see attachment

The company account is affected: AB123

Access via Office.com seems to be possible.

--------------------------------------------------------------------------- Asana funktionierte nicht mehr, nachdem ich meinen Laptop neu gestartet hatte. Bitte helfen Sie. --------------------------------------------------------------------------- Obviously, we have tickets talking about Discord, Asana, and CRM. So the name of the category should be changed from “Help Needed” to existing, more specific categories. For the first step of the reassignment process, we will create the new column “Keywords” that gives the information if the ticket has the word from the list of categories in the “Text” column.

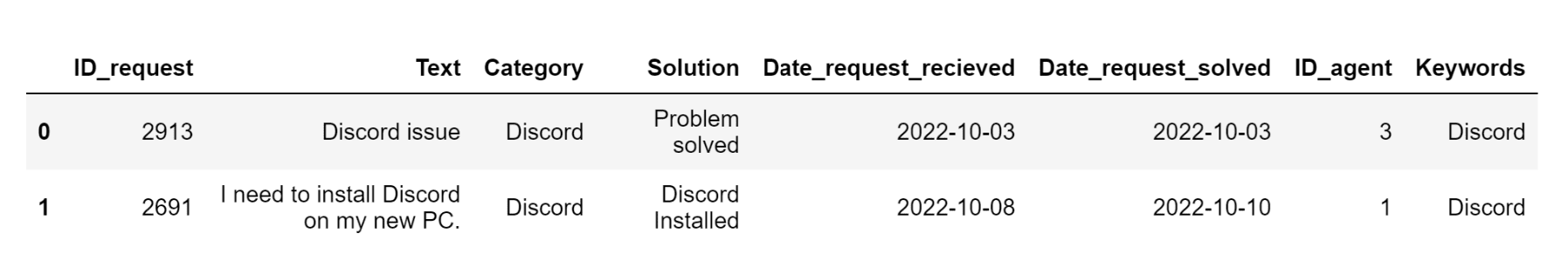

words_categories = np.unique([word.strip().lower() for word in df.Category]) # list of categories def keywords(row): list_w = [] for word in row.translate(str.maketrans("", "", string.punctuation)).lower().split(): if word in words_categories: list_w.append(word) return list_w df["Keywords"] = df.Text.apply(keywords) # since our output is in the list, this function will give us better looking final output. def clean_row(row): row = str(row) row = row.replace("[", "") row = row.replace("]", "") row = row.replace("'", "") row = string.capwords(row) return row df["Keywords"] = df.Keywords.apply(clean_row) Also, note that using "if word in str(words_categories)" instead of "if word in words_categories" would catch words from categories with more than 1 word (Internet Browser in our case), but would also require more data preprocessing. To keep things simple and straight to the point, we will go with the code for categories made of just one word. This is how our dataset looks now:

df.head(2)output as image:

After extracting the keywords column, we will assume the quality of the tickets. Our hypothesis:

- Ticket with just 1 keyword in the Text field that is the same as the category to the which ticket belongs would be easy to classify.

- Ticket with multiple keywords in the Text field, where at least one of the keywords is the same as the category to the which ticket belongs would be easy to classify in the majority of cases.

- The ticket that has keywords, but none of them is equal to the name of the category to the which ticket belongs is probably a noisy label case.

- Other tickets are neutral based on keywords.

cl_list = [] for category, keywords in zip(df.Category, df.Keywords): if category.lower() == keywords.lower() and keywords != "": cl_list.append("easy_classification") elif category.lower() in keywords.lower(): # to deal with multiple keywords in the ticket cl_list.append("probably_easy_classification") elif category.lower() != keywords.lower() and keywords != "": cl_list.append("potential_problem") else: cl_list.append("neutral") df["Ease_classification"] = cl_list df.Ease_classification.value_counts(normalize=True, dropna=False).mul(100).round(1).astype(str) + "%" neutral 45.6% easy_classification 37.7% potential_problem 9.6% probably_easy_classification 7.0% Name: Ease_classification, dtype: object We made our new distribution and now is the time to examine tickets classified as a potential problem. In practice, the following step would require much more sampling and look at the larger chunks of data with the bare eye, but the rationale would be the same. You are supposed to find problematic tickets and decide if you can improve their quality or if you should drop them from the dataset. When you are facing a large dataset stay calm, and don't forget that data examination and data preparation usually take much more time than building ML algorithms!

pp = df[df.Ease_classification == "potential_problem"] for text, category in zip(pp.Text.sample(5, random_state=2), pp.Category.sample(3, random_state=2)): print("TEXT:") print(text) print("CATEGORY:") print(category) print("-"*100) TEXT: outlook issue , I did an update Windows and I have no more outlook on my notebook ? Please help !

Outlook CATEGORY: Mail -------------------------------------------------------------------- TEXT: Please relase blocked attachements from the mail I got from name.surname@company.com. These are data needed for social media marketing campaing. CATEGORY: Outlook -------------------------------------------------------------------- TEXT: Asana funktionierte nicht mehr, nachdem ich meinen Laptop neu gestartet hatte. Bitte helfen Sie. CATEGORY: Help Needed -------------------------------------------------------------------- We understand that tickets from Outlook and Mail categories are related to the same problem, so we will merge these 2 categories and improve the results of our future ML classification algorithm.

Merging into the Cluster

mail_categories_to_merge = ["Outlook", "Mail"] sum_mail_cluster = 0 for x in mail_categories_to_merge: sum_mail_cluster += len(df[df["Category"] == x]) print("Number of categories to be merged into new cluster: ", len(mail_categories_to_merge)) print("Expected number of tickets in the new cluster: ", sum_mail_cluster) def rename_to_mail_cluster(category): if category in mail_categories_to_merge: category = "Mail_CLUSTER" else: category = category return category df["Category"] = df["Category"].apply(rename_to_mail_cluster) df.Category.value_counts() Number of categories to be merged into new cluster: 2 Expected number of tickets in the new cluster: 33 Mail_CLUSTER 33 Discord 15 CRM 14 Internet Browser 12 Keyboard 11 Asana 10 Mouse 10 Help Needed 9 Name: Category, dtype: int64 Last, but not least, we want to relabel some tickets from the meta category “Help Needed” to the proper category.

df.loc[(df["Category"] == "Help Needed") & ([set(x).intersection(words_categories) for x in df["Text"].str.lower().str.replace("[^ws]", "", regex=True).str.split()]), "Category"] = "Change" def cat_name_change(cat, keywords): if cat == "Change": cat = keywords else: cat = cat return cat df["Category"] = df.apply(lambda x: cat_name_change(x.Category, x.Keywords), axis=1) df["Category"] = df["Category"].replace({"Crm":"CRM"}) df.Category.value_counts(dropna=False) Mail_CLUSTER 33 Discord 16 CRM 15 Internet Browser 12 Asana 11 Keyboard 11 Mouse 10 Help Needed 6 Name: Category, dtype: int64 We did our data relabeling and cleaning but we shouldn't call ourselves data scientists if we don't do at least one scientific experiment and test the impact of our work on the final classification. We will do so by implementing The Complement Naive Bayes classifier in sklearn. Feel free to try other, more complex algorithms. Also, be aware that further data cleaning could be done – for example, we could also drop all tickets left in the "Help Needed" category.

Testing the Impact of Data Munging

model = make_pipeline(TfidfVectorizer(), ComplementNB()) # old df df_o = pd.read_excel("ITSM_data.xlsx") important_categories = ["Text", "Category"] for cat in important_categories: df_o.drop(df_o[df_o[cat].isna()].index, inplace=True) df_o.name = "dataset just without missing" df.name = "dataset after deeper cleaning" for dataframe in [df_o, df]: # Split dataset into training set and test set X_train, X_test, y_train, y_test = train_test_split(dataframe.Text, dataframe.Category, test_size=0.2, random_state=1) # Training the model with train data model.fit(X_train, y_train) # Predict the response for test dataset y_pred = model.predict(X_test) print(f"Accuracy of Complement Naive Bayes classifier model on {dataframe.name} is: {round(metrics.accuracy_score(y_test, y_pred),2)}") Accuracy of Complement Naive Bayes classifier model on dataset just without missing is: 0.48 Accuracy of Complement Naive Bayes classifier model on dataset after deeper cleaning is: 0.65 Pretty impressive, right? The dataset we used is small (on purpose, so you can easily see what happens in each step) so different random seeds might produce different results, but in the vast majority of cases, the model will perform significantly better on the dataset after cleaning compared to the original dataset. We did a good job!

Nikola Greb been coding for more than four years, and for the past two years he specialized in NLP. Before turning to data science, he was successful in sales, HR, writing and chess.

- How Noisy Labels Impact Machine Learning Models

- Dealing with Data Leakage

- Dealing with Imbalanced Data in Machine Learning

- Dealing with Position Bias in Recommendations and Search

- How to Clean Text Data at the Command Line

- eBook: Vocabularies, Text Mining and FAIR Data: The Strategic Role…