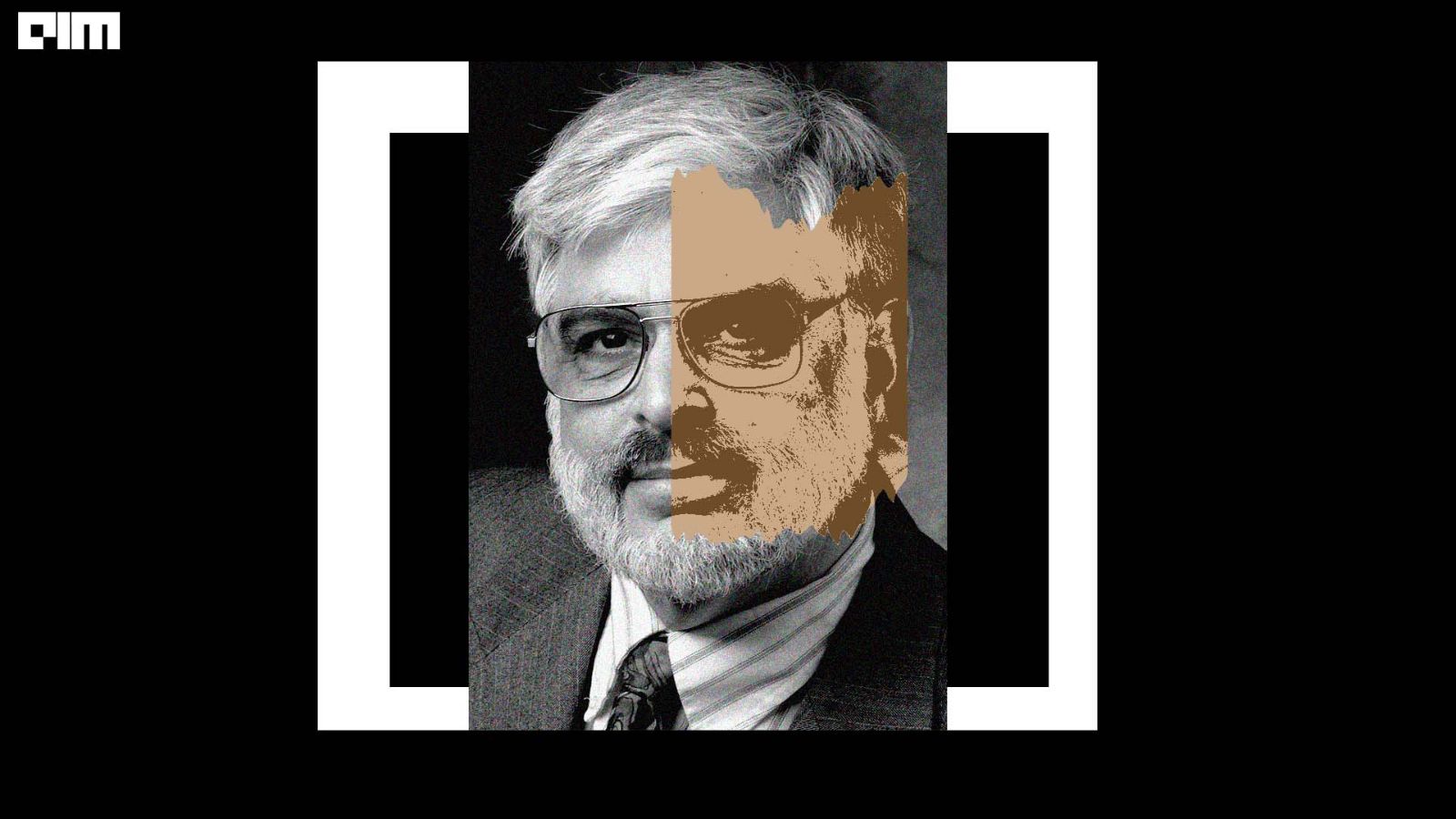

“Looking back, it’s amazing how easy things were for researchers when I was a young man. In comparison to just how competitive the field has become,” said the 80-year-old American computer scientist Jeffrey Ullman in an exclusive interview with AIM.

Recalling the state of research in the 60s he said, everywhere you looked, there was something new. Now there are a lot of new directions that come up, but so much of research these days is squeezing a little bit of performance out of something just to get a little improvement.

Further, talking about research he said, “A part of the problem is that too many people are led to believe that the success in life depends upon them doing research. What has happened is people who could be excellent teachers are basically forced to do second and third grade research because that’s how they get promoted.”

He humbly opined, “If there’s money to be made from that case, just make your money. Go do something else.”

Ullman won the Turing Award in 2020 for co-creating the building blocks of computer programming in the early 60s along with his long time friend Alfred Aho. The duo refined one of the key components of a computer: the “compiler”.

Bias In, Bias Out

Ullman’s contributions to computer science have a direct impact on the foundations of data science. His research bedrocked many key concepts and techniques employed in the storage, analysis, and interpretation of data, making him an influential figure.

Emphasising on the relevance of data science he said, “The thing that makes machine learning powerful is that you can feed so much data to these interesting models. For example, multi-layer neural nets only make sense if you look at epically millions or billions of training examples.” He also addressed that “for years people have been trying to exploit data to solve problems”.

Clarifying the bias problem in language models, he said, “Often people think the machine learning algorithms introduce bias. 50 years ago, everybody knew ‘garbage in garbage out’. In this particular case, it is ‘bias in, bias out’.

He stated a canonical example of a company which decided to write software to sift through resumes of people applying for jobs. “If the data shows women have not been promoted in proportion to their ability then the AI is going to learn that being female is a negative indication of success, and they’re gonna throw out females. If it’s a good algorithm, it’s going to learn and pick that up,” the said.

The fault is not with the AI, it is with the company policy and the data that policy has generated Ullman believes. “If they built an LLM, in the time of Galileo, the chat would have said that the sun revolves around the Earth because everybody believed that,” he chuckled.

ChatGPT and Eric Schmidt

“I am surprised you didn’t ask if these language models are becoming sentient,” he said jokingly. “The deceptive thing is because the people who created the text on which these models are built are sentient. The LLM is regurgitating the consensus of what sentient beings say it is going to sound sentient,” he added.

A couple of days ago Ullman was discussing with Eric Schmidt the problem of what happens when you ask ChatGPT what’s the best way to kill a million people?

The former Google CEO told Ullman that it wouldn’t answer that question. It knows not to let that question be answered. But that doesn’t mean you can’t rephrase it in such a way that you can get some really dangerous information out of that.

“It’s a very hard problem. If you understand that there is something these generative models shouldn’t be saying. You can stop them from saying it. But how do you understand everything that could cause trouble?” Ullman pondered.

Drawing parallels, he recalled the instance when social networks tried to get rid of hate speech on their platforms. The tech giants have put efforts for decades “but how to write an algorithm that disallows anything that we would all agree as hate speech and allows anything that we would all agree is not hate speech” still remains an unsolved issue.

Current work

Currently, Ullman is trying to calculate how much speech there is available. I’ve heard that the newest model GPT-4 essentially use everything. “At some point, they will run out of new data. You’ve got 8 billion people. Let’s say half of the people even type around 100,000 words a year. You’ve got a couple of 100 trillion words of data and that might support significantly more than a trillion parameters model.”

Ullman believes that there are many occupations where it could be used as a tool but the idea that lawyers and physicians are going to be completely replaced by these LLMs. “I do not think it’s going to happen,” he said.

“In fact, what I suspect will happen is exactly what happened when tools like Microsoft Word became available. You’ll spend just as much time writing or doing your job, but the output will be better quality. The jobs won’t really go away, the results may be better if these models are used,” he concluded.

The post Jeffrey Ullman’s Unsettling Ultimatum appeared first on Analytics India Magazine.