This week, the Allen Institute for AI (Ai2) launched Tülu3-405B, a large 405-billion parameter open supply AI mannequin claimed to outperform DeepSeek-V3 and match GPT-4o in key benchmarks, notably mathematical reasoning and security.

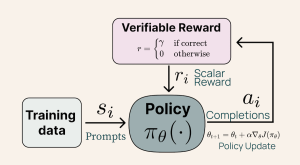

This launch showcases Ai2’s novel coaching methodology, Reinforcement Studying with Verifiable Rewards (RLVR). Tulu3-405B builds on Ai2’s Tulu3 post-training recipe, first launched in November 2024. The mannequin fine-tunes Meta’s Llama-405B utilizing a mixture of rigorously curated knowledge, supervised fine-tuning, Direct Choice Optimization (DPO), and RLVR.

RLVR is especially noteworthy as a result of it enhances abilities the place verifiable outcomes exist, resembling math problem-solving and instruction-following. In response to Ai2’s findings, RLVR scaled extra successfully at 405B parameters in comparison with smaller fashions like Tulu3-70B and Tulu3-8B. Scaling up gave Tulu3-405B an enormous enhance in math abilities, including weight to the concept that greater fashions do higher when fed specialised knowledge as a substitute of somewhat little bit of every thing, a la broad datasets.

RLVR is especially noteworthy as a result of it enhances abilities the place verifiable outcomes exist, resembling math problem-solving and instruction-following. In response to Ai2’s findings, RLVR scaled extra successfully at 405B parameters in comparison with smaller fashions like Tulu3-70B and Tulu3-8B. Scaling up gave Tulu3-405B an enormous enhance in math abilities, including weight to the concept that greater fashions do higher when fed specialised knowledge as a substitute of somewhat little bit of every thing, a la broad datasets.

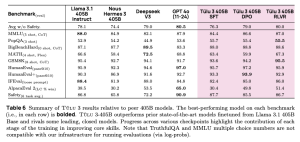

Ai2’s inside evaluations recommend that Tulu3-405B persistently outperforms DeepSeek-V3, notably in security benchmarks and mathematical reasoning. The mannequin additionally competes with OpenAI’s GPT-4o. Tulu3-405B additionally surpasses earlier open-weight post-trained fashions, together with Llama 3.1 405B Instruct and Nous Hermes 3 405B.

A desk mapping Tülu 3 405B efficiency in comparison with different present fashions throughout a number of analysis benchmarks. (Supply: Ai2)

Coaching a 405-billion parameter mannequin will not be a small job. Tulu3-405B required 256 GPUs throughout 32 nodes, utilizing vLLM, an optimized inference engine, with 16-way tensor parallelism. In response to a weblog publish, Ai2’s engineers confronted a number of challenges, together with these intense compute necessities: “Coaching Tülu 3 405B demanded 32 nodes (256 GPUs) working in parallel. For inference, we deployed the mannequin utilizing vLLM with 16-way tensor parallelism, whereas using the remaining 240 GPUs for coaching. Whereas most of our codebase scaled effectively, we often encountered NCCL timeout and synchronization points that required meticulous monitoring and intervention,” the authors wrote.

There have been additionally hyperparameter tuning challenges: “Given the computational prices, hyperparameter tuning was restricted. We adopted the precept of “decrease studying charges for bigger fashions” per prior apply with Llama fashions,” the Ai2 staff mentioned.

A diagram outlining the Reinforcement Studying with Verifiable Rewards (RLVR) course of. (Supply: Ai2)

With Tulu3-405B, Ai2 isn’t simply releasing one other open-source AI mannequin. It’s making a press release about mannequin coaching. By scaling up its RLVR methodology, Ai2 has not solely constructed a mannequin that may maintain its personal in opposition to top-tier AI like GPT-4o and DeepSeek-V3, nevertheless it argues an vital concept: greater fashions can get higher when educated the suitable manner. Coaching Tulu3-405B didn’t simply throw extra knowledge on the drawback however used specialised, high-quality knowledge and considerate coaching strategies to enhance it.

However past the technical wins, Tulu3-405B highlights a much bigger shift in AI: the struggle to maintain innovation open and accessible. Whereas the largest AI fashions are sometimes locked behind company paywalls, Ai2 is betting on a future the place highly effective AI stays obtainable for researchers, builders, and anybody curious sufficient to experiment.

To that finish, Ai2 has made Tulu3-405B freely obtainable for analysis and experimentation, internet hosting it on Google Cloud (and shortly, Vertex) and providing a demo by way of the Ai2 Playground.